Maybe not much. Except that it's coming sooner than before.

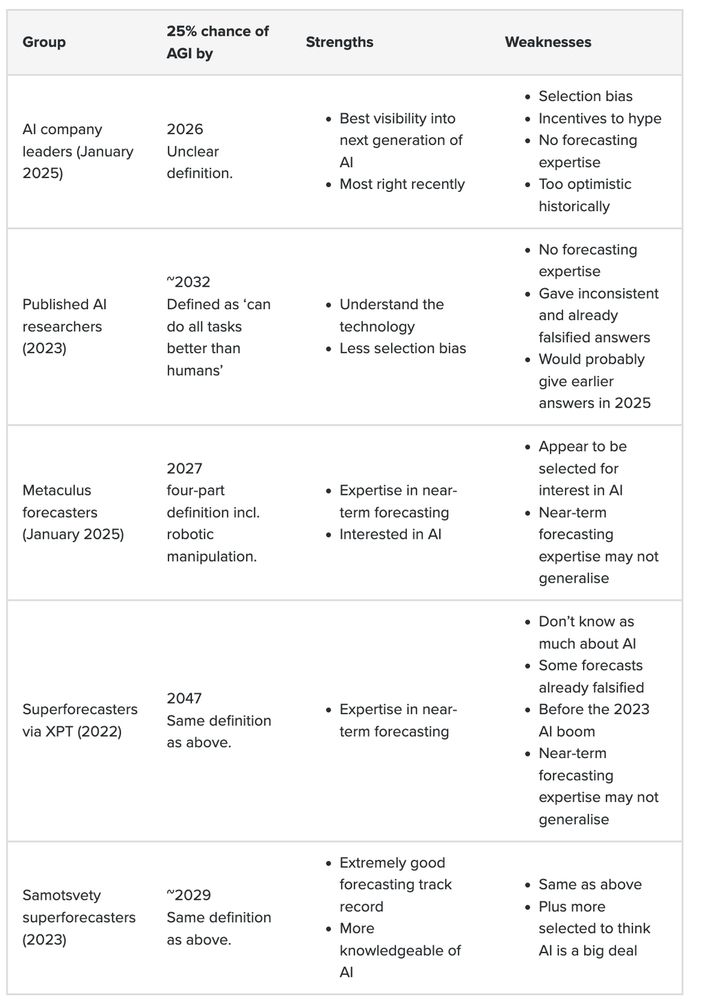

I did a review of the 5 most relevant expert groups and what we can learn from them..

Maybe not much. Except that it's coming sooner than before.

I did a review of the 5 most relevant expert groups and what we can learn from them..

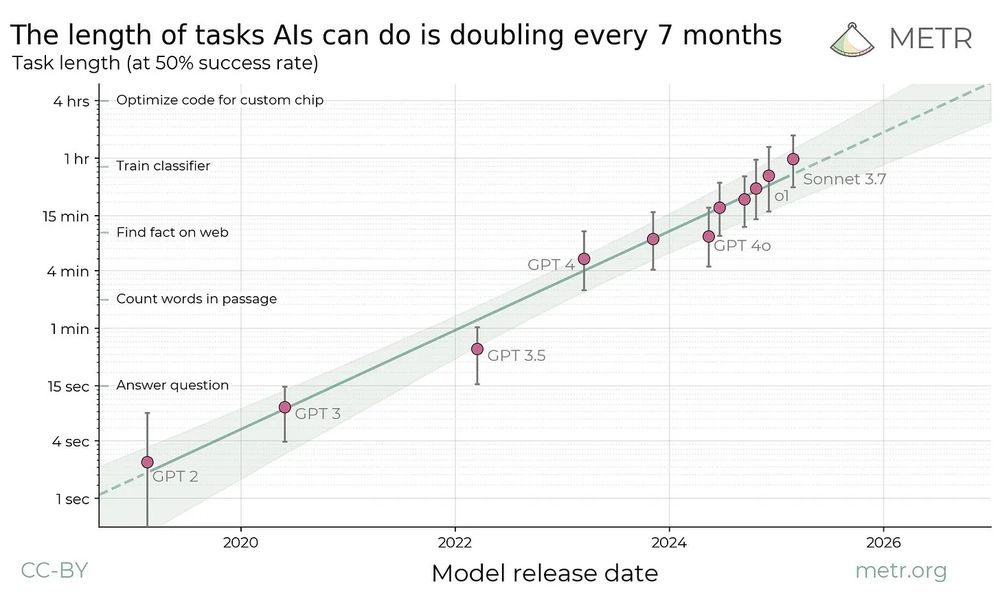

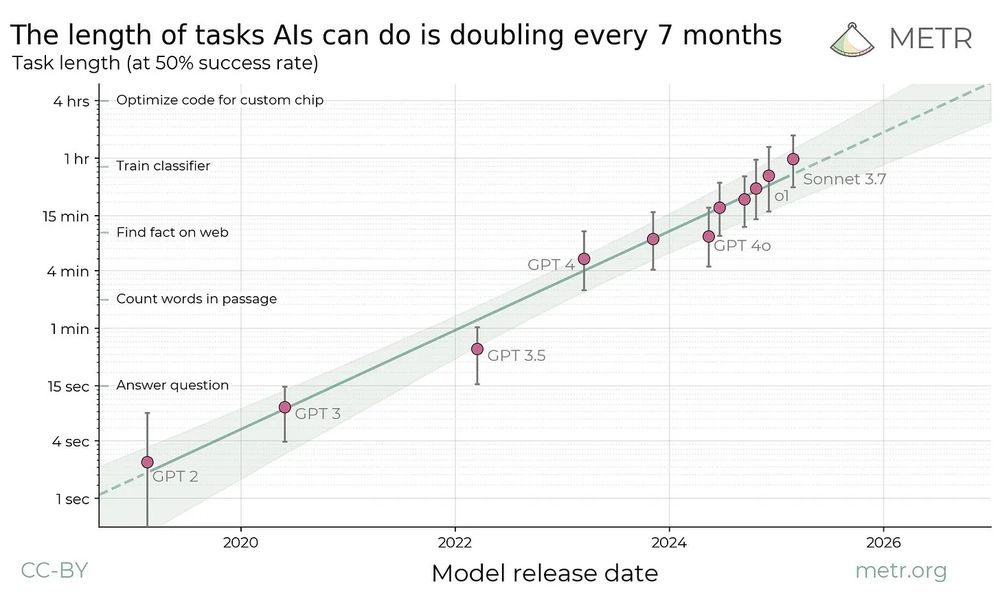

Models today can do tasks up to 1h.

But real jobs mainly consist of tasks taking days or weeks.

So AI can answer questions but can't do real jobs.

But that's about to change..

Models today can do tasks up to 1h.

But real jobs mainly consist of tasks taking days or weeks.

So AI can answer questions but can't do real jobs.

But that's about to change..

Is this just hype, or could they be right?

I spent weeks writing this new in-depth primer on the best arguments for and against.

Starting with the case for...🧵

Is this just hype, or could they be right?

I spent weeks writing this new in-depth primer on the best arguments for and against.

Starting with the case for...🧵

It’s perhaps the most important single piece of evidence for short timelines we have right now.

It’s perhaps the most important single piece of evidence for short timelines we have right now.

Cost to replicate the model drops 10x per year. In a couple of years, it's cheap enough that hundreds of companies can do it...

Cost to replicate the model drops 10x per year. In a couple of years, it's cheap enough that hundreds of companies can do it...

But when I imagine the future, I see a gradual erosion of human influence in an economy of trillions of AIs.

So I'm glad to see a new paper about those risks🧵

But when I imagine the future, I see a gradual erosion of human influence in an economy of trillions of AIs.

So I'm glad to see a new paper about those risks🧵

I also would add that if you are not familiar with o1 pro, your observations about the shortcomings of AI models should be discounted rather severely. And o3 pro is due soon, presumably it will be better yet.

I also would add that if you are not familiar with o1 pro, your observations about the shortcomings of AI models should be discounted rather severely. And o3 pro is due soon, presumably it will be better yet.

Most severe 10 year risk is extreme weather events??

Most severe 10 year risk is extreme weather events??

They've totally missed the new paradigm: teaching AI to reason using reinforcement learning.

Here's an attempt at an explainer of why it's the biggest thing going on right now.

They've totally missed the new paradigm: teaching AI to reason using reinforcement learning.

Here's an attempt at an explainer of why it's the biggest thing going on right now.