a new GDM paper shows that embeddings can’t represent combinations of concepts well

e.g. Dave likes blue trucks AND Ford trucks

even k=2 sub-predicates make SOTA embedding models fall apart

www.alphaxiv.org/pdf/2508.21038

a new GDM paper shows that embeddings can’t represent combinations of concepts well

e.g. Dave likes blue trucks AND Ford trucks

even k=2 sub-predicates make SOTA embedding models fall apart

www.alphaxiv.org/pdf/2508.21038

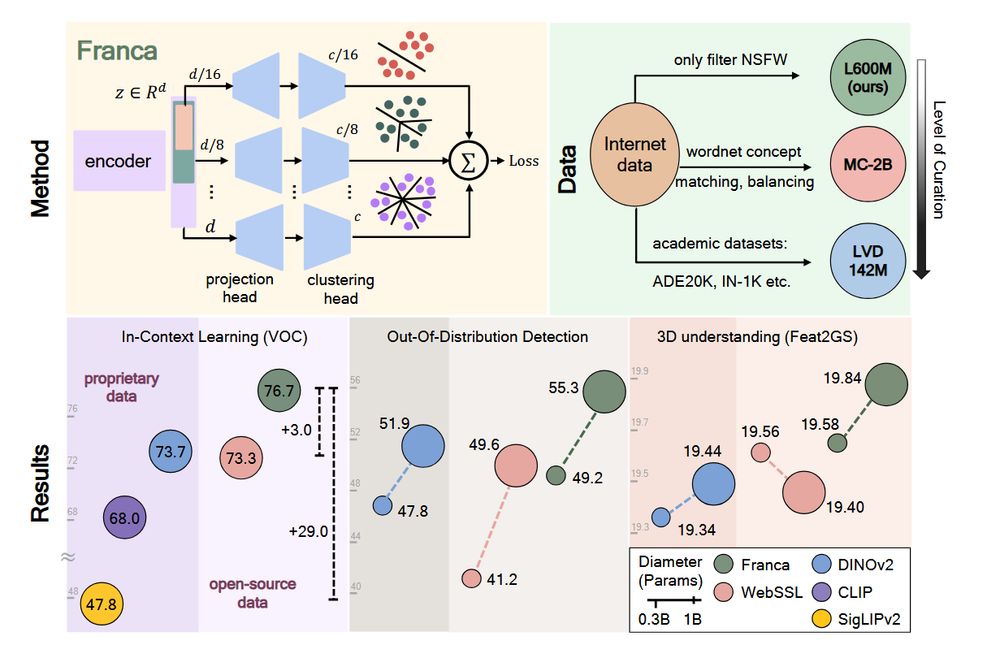

uh, holy shit this one is intriguing. bare minimum they compare themselves to all the (actual) top models and do okay

but inside.. damn this one has some cool ideas

huggingface.co/meituan-long...

uh, holy shit this one is intriguing. bare minimum they compare themselves to all the (actual) top models and do okay

but inside.. damn this one has some cool ideas

huggingface.co/meituan-long...

Now it seems natural to model the noise, find new clean data it can destroy, and then train a model to reverse the process.

Machine learning makes you a sicko.

Now it seems natural to model the noise, find new clean data it can destroy, and then train a model to reverse the process.

Machine learning makes you a sicko.

1) AI has obvious utility to many, this is a tremendous amount of use already

2) There is room for multiple frontier model providers, at least for now

3) Any losses from subsidizing cost of AI use (and it is not clear this is happening) are now relatively small

1) AI has obvious utility to many, this is a tremendous amount of use already

2) There is room for multiple frontier model providers, at least for now

3) Any losses from subsidizing cost of AI use (and it is not clear this is happening) are now relatively small

aside from the absolute piles of cash, Sama is very SV-minded and can’t imagine building apart from a product

a lot of accelerationists see things differently, more broadly, and ids dissatisfying to be forced into a product box

OpenAI self-admits that they optimize their models for ChatGPT, o3 was made for DeepResearch

Moonshot was dissatisfied with that

aside from the absolute piles of cash, Sama is very SV-minded and can’t imagine building apart from a product

a lot of accelerationists see things differently, more broadly, and ids dissatisfying to be forced into a product box

- H Nets arxiv.org/abs/2507.07955

- Energy Based Transformers arxiv.org/abs/2507.02092

- H Nets arxiv.org/abs/2507.07955

- Energy Based Transformers arxiv.org/abs/2507.02092

Boyuan Sun, Modi Jin, Bowen Yin, Qibin Hou

arxiv.org/abs/2507.01634

Trending on www.scholar-inbox.com

Boyuan Sun, Modi Jin, Bowen Yin, Qibin Hou

arxiv.org/abs/2507.01634

Trending on www.scholar-inbox.com

notable:

- high accuracy

- actually streaming (can use streaming text input)

- serves 32 simultaneous users on a single GPU

- voice cloning

- supports all 24 official EU languages

kyutai.org/next/tts

notable:

- high accuracy

- actually streaming (can use streaming text input)

- serves 32 simultaneous users on a single GPU

- voice cloning

- supports all 24 official EU languages

kyutai.org/next/tts

github.com/mirage-proje...

github.com/mirage-proje...

Accompanying blog post and mini thread

github.com/photoroom/da...

www.photoroom.com/inside-photo...

1/N

Accompanying blog post and mini thread

github.com/photoroom/da...

www.photoroom.com/inside-photo...

1/N

Made me realize that there is zero moat in this field, at least for Copilot.

Made me realize that there is zero moat in this field, at least for Copilot.

- Slack locked down its messages data.

- X locked down its post data.

- Anthropic cut off OpenAI's Windsurf.

- Google will stop using Scale.

The dream of unfettered MCP interconnects is a mirage.

www.dbreunig.com/2025/06/16/d...

- Slack locked down its messages data.

- X locked down its post data.

- Anthropic cut off OpenAI's Windsurf.

- Google will stop using Scale.

The dream of unfettered MCP interconnects is a mirage.

www.dbreunig.com/2025/06/16/d...

arxiv.org/pdf/2506.10943

arxiv.org/pdf/2506.10943

morethanmoore.substack.com/p/amds-ai-fu...

morethanmoore.substack.com/p/amds-ai-fu...

example code here github.com/Photoroom/da...

example code here github.com/Photoroom/da...

arxiv.org/abs/2505.11594

arxiv.org/abs/2505.11594

Last year, we showed how to turn Stable Diffusion 2 into a SOTA depth estimator with a few synthetic samples and 2–3 days on just 1 GPU.

Today's release features:

🏎️ 1-step inference

🔢 New modalities

🫣 High resolution

🧨 Diffusers support

🕹️ New demos

🧶👇

Last year, we showed how to turn Stable Diffusion 2 into a SOTA depth estimator with a few synthetic samples and 2–3 days on just 1 GPU.

Today's release features:

🏎️ 1-step inference

🔢 New modalities

🫣 High resolution

🧨 Diffusers support

🕹️ New demos

🧶👇