Thinking about language models

Past: PhD at NYU, fellow at Harvard’s Kempner Institute

With @hlzhang109.bsky.social @schwarzjn.bsky.social @shamkakade.bsky.social

With @hlzhang109.bsky.social @schwarzjn.bsky.social @shamkakade.bsky.social

#ML #AI

#ML #AI

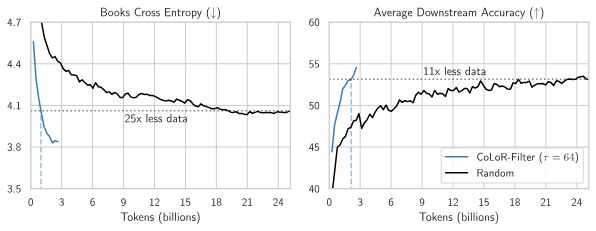

We propose a methodology to approach these questions by showing that we can predict the performance across datasets and losses with simple shifted power law fits.

We propose a methodology to approach these questions by showing that we can predict the performance across datasets and losses with simple shifted power law fits.