https://d-paape.github.io

In my latest blog post, I argue it’s time we had our own "Econometrics," a discipline devoted to empirical rigor.

doomscrollingbabel.manoel.xyz/p/the-missin...

In my latest blog post, I argue it’s time we had our own "Econometrics," a discipline devoted to empirical rigor.

doomscrollingbabel.manoel.xyz/p/the-missin...

doi.org/10.5281/zeno...

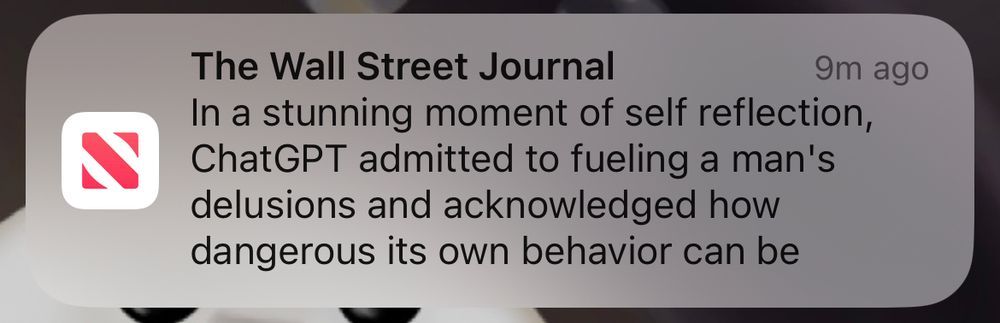

We unpick the tech industry’s marketing, hype, & harm; and we argue for safeguarding higher education, critical

thinking, expertise, academic freedom, & scientific integrity.

1/n

doi.org/10.5281/zeno...

We unpick the tech industry’s marketing, hype, & harm; and we argue for safeguarding higher education, critical

thinking, expertise, academic freedom, & scientific integrity.

1/n

www.wheresyoured.at/how-to-argue...

www.wheresyoured.at/how-to-argue...

The really interesting part is that the LLM (or LRM or whatever) enthusiasts quoted in the article defend the models' "reasoning" by basically saying that it's actually somehow clever of them to be wrong

The really interesting part is that the LLM (or LRM or whatever) enthusiasts quoted in the article defend the models' "reasoning" by basically saying that it's actually somehow clever of them to be wrong