I am interested in understanding “understanding”, the process or activity by which an agent makes sense of its environment and, in interaction, of and with other agents.

This is something we've been exploring since early 2023 with clembench ( clembench.github.io ), which we've been continuously maintaining & extending. »

This is something we've been exploring since early 2023 with clembench ( clembench.github.io ), which we've been continuously maintaining & extending. »

If you're interested in LLM post-training techniques and in how to make LLMs better "language users", read this thread, introducing the "LM Playpen".

If you're interested in LLM post-training techniques and in how to make LLMs better "language users", read this thread, introducing the "LM Playpen".

These are fairly independent research positions that will allow the candidate to build their own profile. Dln June 2nd.

Details: tinyurl.com/pd-potsdam-2...

#NLProc #AI 🤖🧠

These are fairly independent research positions that will allow the candidate to build their own profile. Dln June 2nd.

Details: tinyurl.com/pd-potsdam-2...

#NLProc #AI 🤖🧠

🤖🧠

www.nytimes.com/2025/05/14/b...

🤖🧠

www.nytimes.com/2025/05/14/b...

When did "to ablate" take on the meaning "to systematically vary"? I've noticed this only recently, but it's seems to be super common now.

When did "to ablate" take on the meaning "to systematically vary"? I've noticed this only recently, but it's seems to be super common now.

arxiv.org/abs/2504.08590

1/2

arxiv.org/abs/2504.08590

1/2

arxiv.org/abs/2504.08590

1/2

arxiv.org/abs/2504.08590

1/2

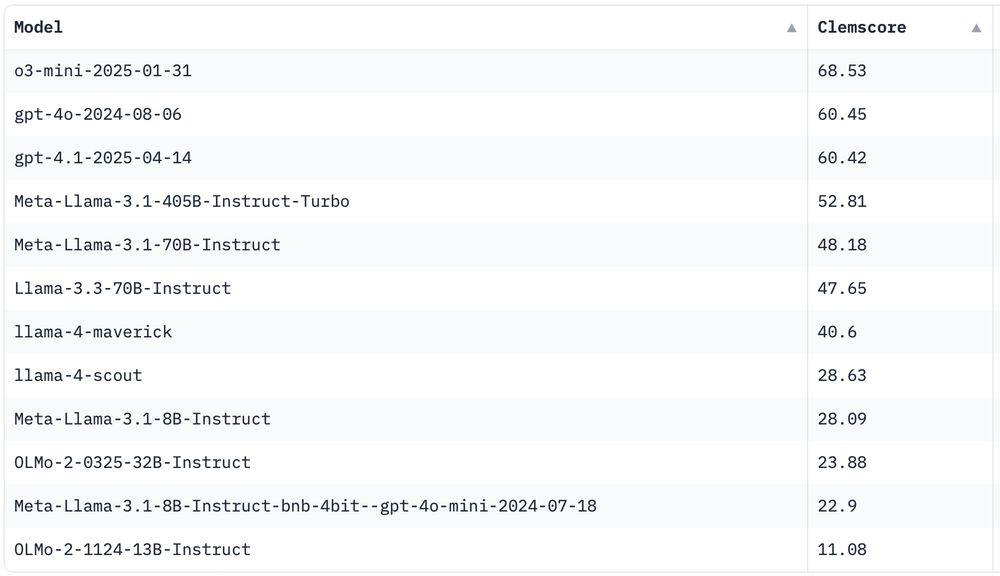

clembench.github.io

clembench.github.io

Playpen: An Environment for Exploring Learning Through Conversational Interaction

https://arxiv.org/abs/2504.08590

Playpen: An Environment for Exploring Learning Through Conversational Interaction

https://arxiv.org/abs/2504.08590

Check it out here: clembench.github.io

Check it out here: clembench.github.io

Along similar lines: We’re preparing a (complementary) challenge that will focus on exploring interaction for post-training, coming with a rich interaction environment to get things started. Stay tuned!

A 3rd BabyLM👶, as a workshop

@emnlpmeeting.bsky.social

Kept: all

New:

Interaction (education, agentic) track

Workshop papers

More in 🧵

Even more:

arxiv.org/abs/2502.10645

babylm.github.io

#AI #LLMs #MachineLearning #language #cognition #NLP #data

🤖📈

Along similar lines: We’re preparing a (complementary) challenge that will focus on exploring interaction for post-training, coming with a rich interaction environment to get things started. Stay tuned!

clembench.github.io

clembench.github.io

I think is probably an allegory for something, maybe the ending year 2024, or the coming year 2025.

I think is probably an allegory for something, maybe the ending year 2024, or the coming year 2025.

also me: I would really like to end this year with cookie jar zero / “pages remaining in the books I’ve started” zero.

me again, expert problem solver: *creates IMAP folder “unprocessed emails from 2024”, selects all, moves 625 items*

also me: I would really like to end this year with cookie jar zero / “pages remaining in the books I’ve started” zero.

me again, expert problem solver: *creates IMAP folder “unprocessed emails from 2024”, selects all, moves 625 items*