- Founding DevRel Engineer,

- Member of Technical Staff - LLM Inference, and

- a Rust Developer

Apply here: jobs.ashbyhq.com/dottxt

- Founding DevRel Engineer,

- Member of Technical Staff - LLM Inference, and

- a Rust Developer

Apply here: jobs.ashbyhq.com/dottxt

Join .txt as our Founding DevRel Engineer.

Apply here: jobs.ashbyhq.com/dottxt

Join .txt as our Founding DevRel Engineer.

Apply here: jobs.ashbyhq.com/dottxt

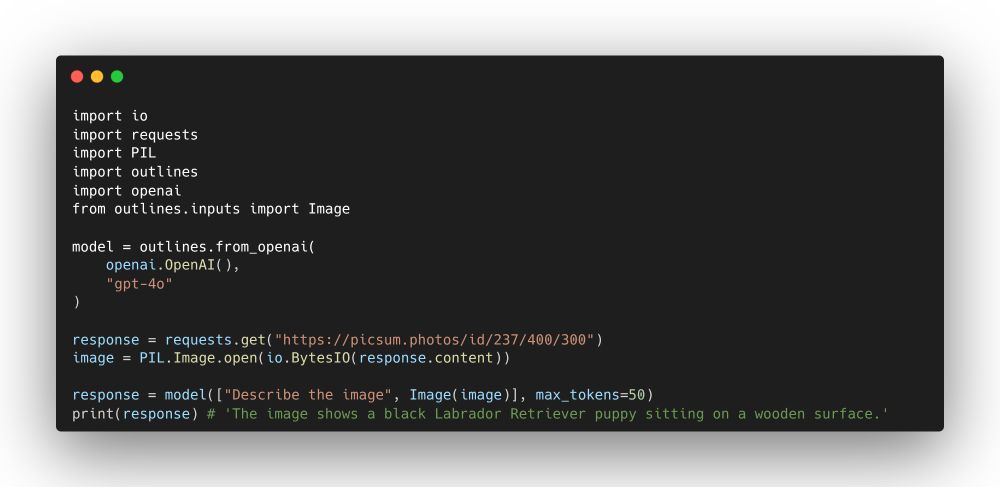

Most libraries will give the prompt a special role, separate it from the other inputs. We don’t anymore.

Most libraries will give the prompt a special role, separate it from the other inputs. We don’t anymore.

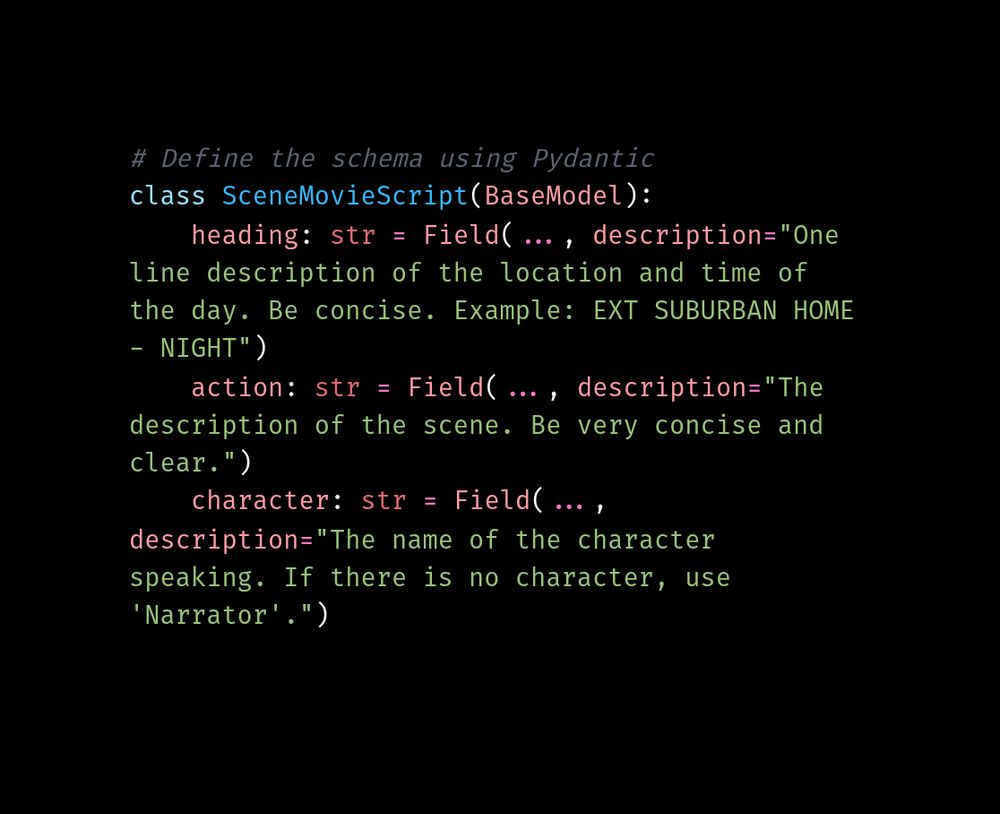

Here's the entire JSON schema he uses.

github.com/aastroza/tvtxt

Here's the entire JSON schema he uses.

github.com/aastroza/tvtxt

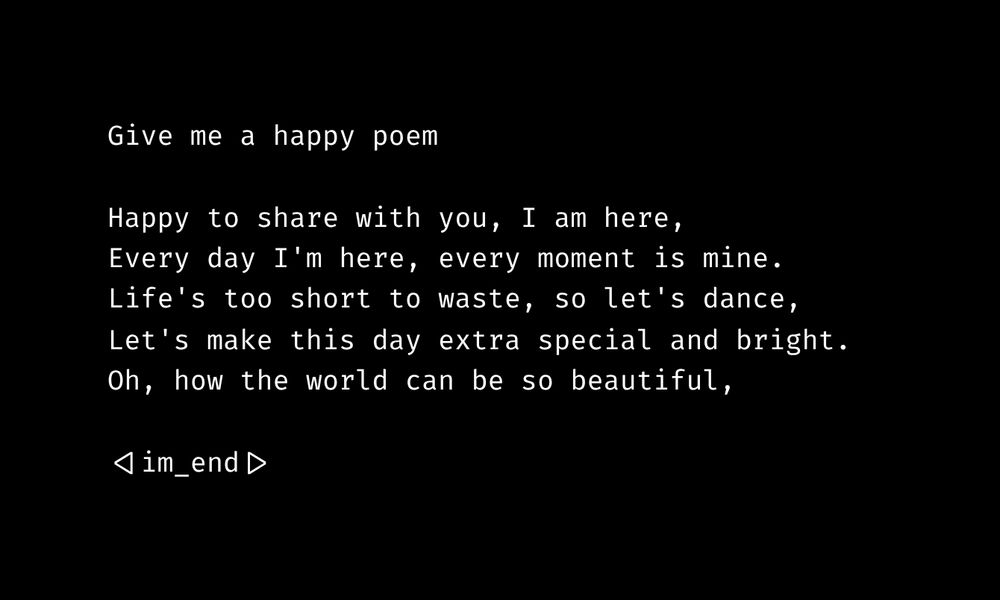

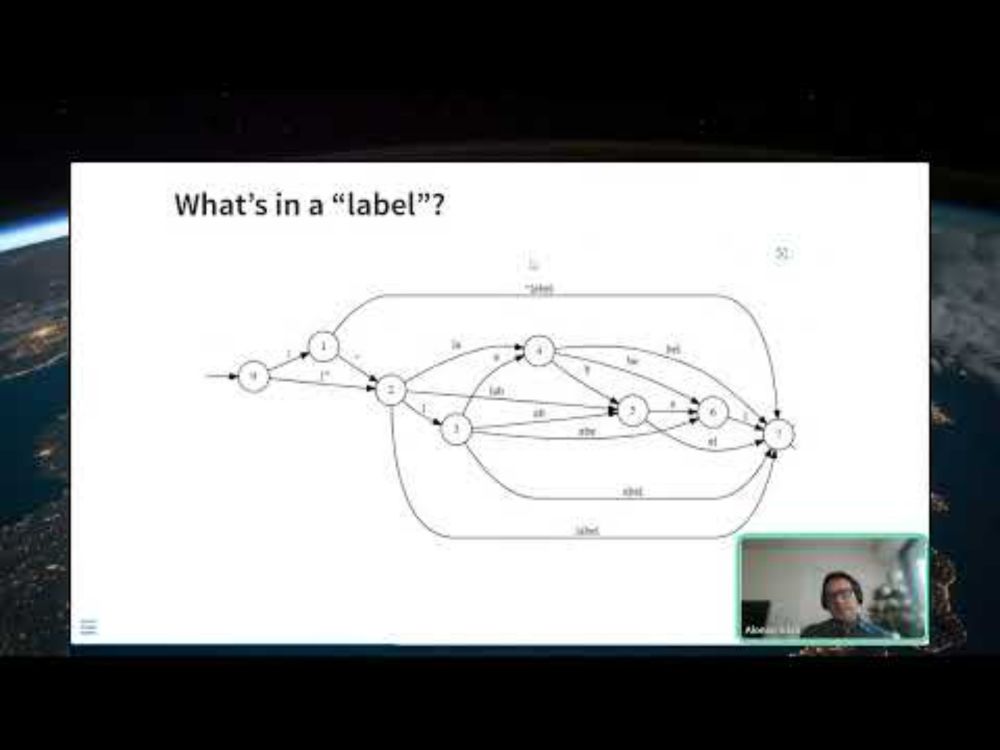

Grammars are cool.

Grammars are cool.

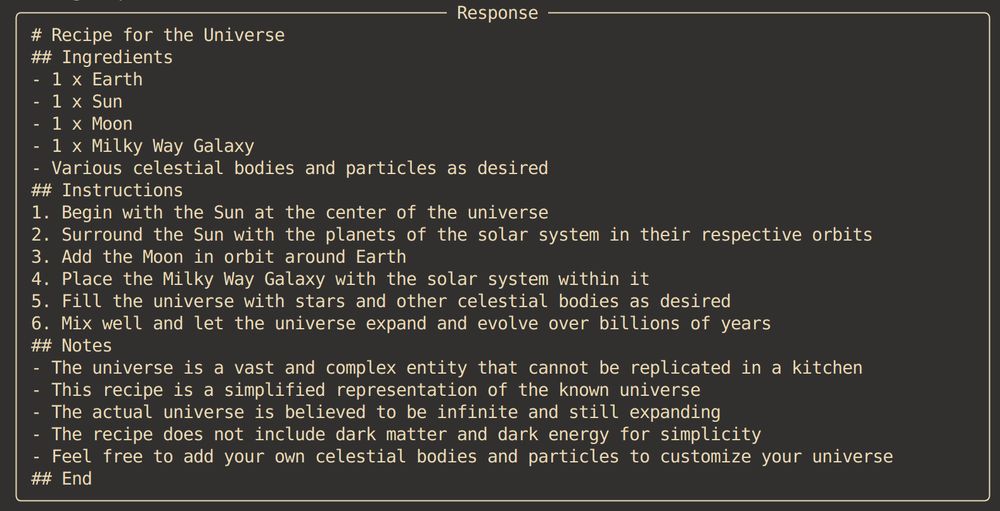

Here's a recipe for the universe.

Here's a recipe for the universe.

🎵 won't you join our Discord tonight

Come on by!

discord.gg/ErZ8XnCmkQ

🎵 won't you join our Discord tonight

Come on by!

discord.gg/ErZ8XnCmkQ

Come on by!

discord.gg/ErZ8XnCmkQ

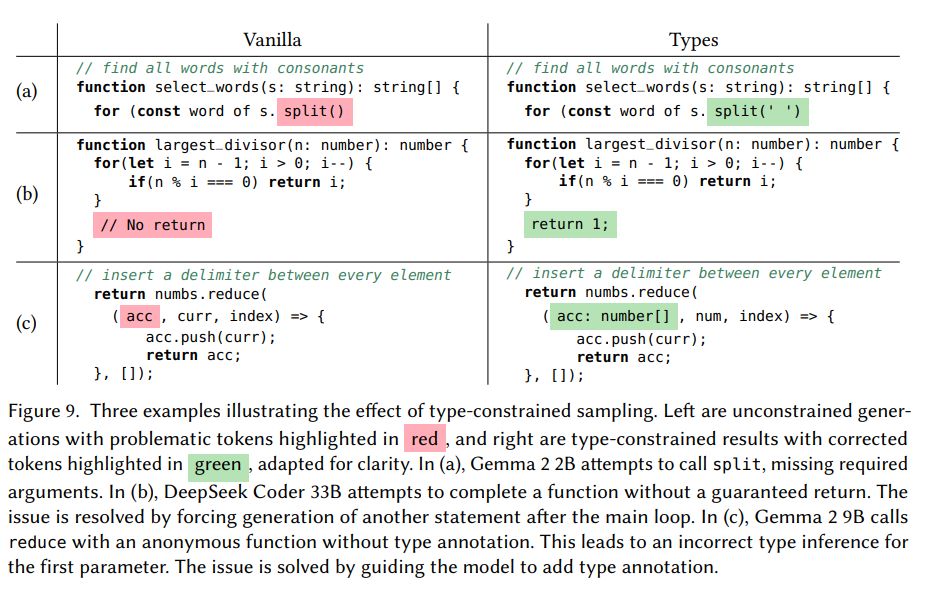

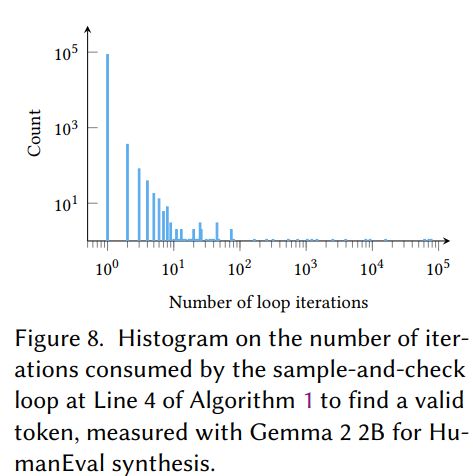

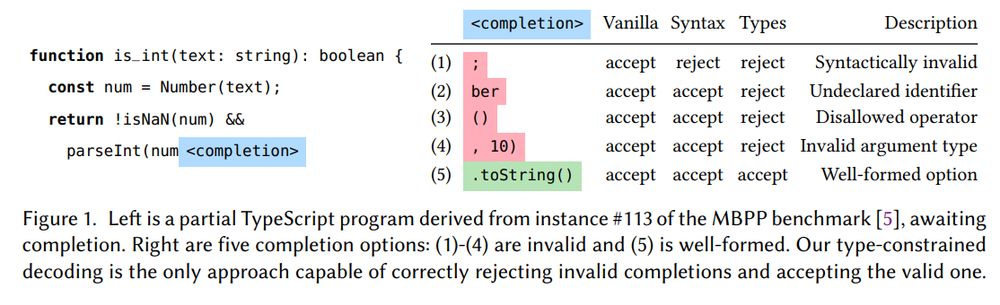

Basically they "guess and check" whether a token would appease the compiler when an LLM is doing code generation.

Basically they "guess and check" whether a token would appease the compiler when an LLM is doing code generation.

www.youtube.com/watch?v=94yu...

#PyData #PyDataGlobal @dottxtai.bsky.social @pydata.bsky.social

www.youtube.com/watch?v=94yu...

#PyData #PyDataGlobal @dottxtai.bsky.social @pydata.bsky.social

Link + brief thread below.

It's a cool paper, go take a look.

It's a cool paper, go take a look.

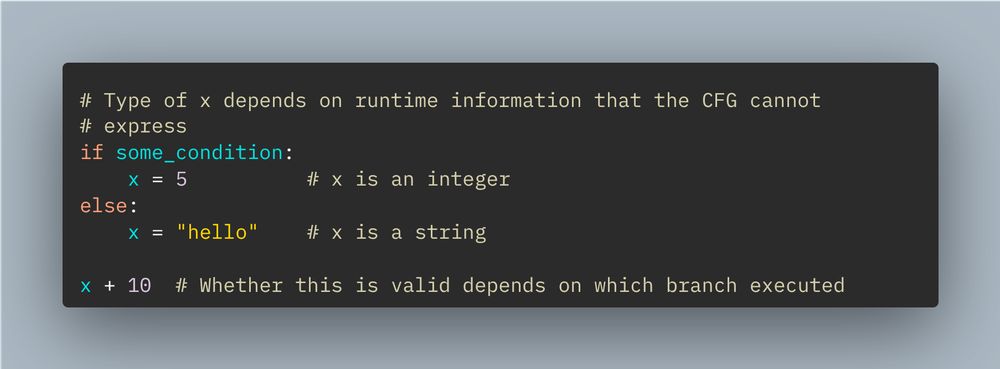

Rather than input -> output, the model does input -> grammar -> output.

Rather than input -> output, the model does input -> grammar -> output.