Thomas Nowotny

@drtnowotny.bsky.social

630 followers

160 following

36 posts

Professor of Informatics at the University of Sussex, Brighton, UK. President of @cnsorg.bsky.social

I do research in bio-inspired AI and computational neuroscience.

See more at

https://profiles.sussex.ac.uk/p206151-thomas-nowotny/about

Posts

Media

Videos

Starter Packs

Thomas Nowotny

@drtnowotny.bsky.social

· Jul 16

Jamie

@neworderofjamie.bsky.social

· Jul 16

FeNN: A RISC-V vector processor for Spiking Neural Network acceleration

Spiking Neural Networks (SNNs) have the potential to drastically reduce the energy requirements of AI systems. However, mainstream accelerators like GPUs and TPUs are designed for the high arithmetic ...

ieeexplore.ieee.org

Thomas Nowotny

@drtnowotny.bsky.social

· Mar 17

Thomas Nowotny

@drtnowotny.bsky.social

· Mar 17

Reposted by Thomas Nowotny

Jamie

@neworderofjamie.bsky.social

· Mar 6

Reposted by Thomas Nowotny

Reposted by Thomas Nowotny

Jamie

@neworderofjamie.bsky.social

· Jan 23

Balázs

@mbalazs98.bsky.social

· Jan 23

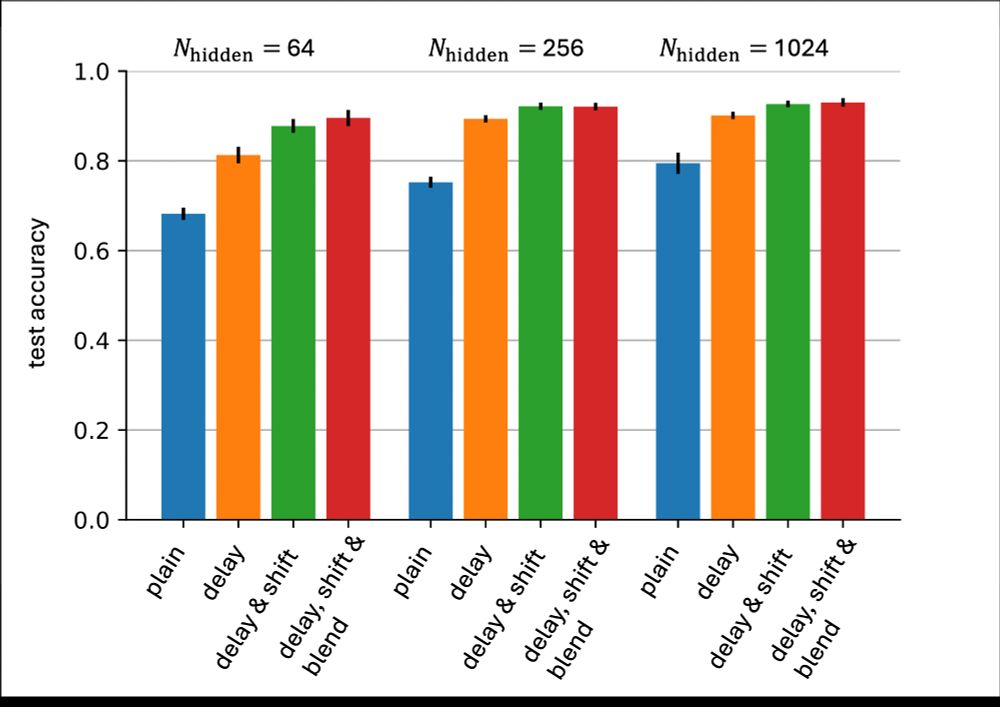

Efficient Event-based Delay Learning in Spiking Neural Networks

Spiking Neural Networks (SNNs) are attracting increased attention as a more energy-efficient alternative to traditional Artificial Neural Networks. Spiking neurons are stateful and intrinsically recur...

arxiv.org

Reposted by Thomas Nowotny

Tim Verstynen

@tdverstynen.bsky.social

· Jan 22

Thomas Nowotny

@drtnowotny.bsky.social

· Jan 21

Thomas Nowotny

@drtnowotny.bsky.social

· Jan 21

Loss shaping enhances exact gradient learning with Eventprop in spiking neural networks - IOPscienceSearch

Loss shaping enhances exact gradient learning with Eventprop in spiking neural networks, Nowotny, Thomas, Turner, James P, Knight, James C

doi.org

Reposted by Thomas Nowotny

Reposted by Thomas Nowotny

Thomas Nowotny

@drtnowotny.bsky.social

· Dec 20

Reposted by Thomas Nowotny

Blake Richards

@tyrellturing.bsky.social

· Dec 11

Cian O'Donnell

@cianodonnell.bsky.social

· Dec 11