www.youtube.com/@Eleuther_AI...

www.youtube.com/@Eleuther_AI...

"BPE Gets Picky: Efficient Vocabulary Refinement During Tokenizer Training" arxiv.org/abs/2409.04599

"BPE Stays on SCRIPT: Structured Encoding for Robust Multilingual Pretokenization" arxiv.org/abs/2505.24689

"BPE Gets Picky: Efficient Vocabulary Refinement During Tokenizer Training" arxiv.org/abs/2409.04599

"BPE Stays on SCRIPT: Structured Encoding for Robust Multilingual Pretokenization" arxiv.org/abs/2505.24689

Our first talk is by @catherinearnett.bsky.social on tokenizers, their limitations, and how to improve them.

Our first talk is by @catherinearnett.bsky.social on tokenizers, their limitations, and how to improve them.

Read more about the event: blog.mozilla.org/en/mozilla/d...

And the paper distilling the best practices participants identified: arxiv.org/abs/2501.08365

Read more about the event: blog.mozilla.org/en/mozilla/d...

And the paper distilling the best practices participants identified: arxiv.org/abs/2501.08365

This work was supported by @mozilla.org @mozilla.ai, Sutter Hill Ventures, the National Sciences and Engineering Research Council of Canada, and Lawrence Livermore National Laboratory.

This work was supported by @mozilla.org @mozilla.ai, Sutter Hill Ventures, the National Sciences and Engineering Research Council of Canada, and Lawrence Livermore National Laboratory.

Paper: arxiv.org/abs/2506.05209

Artifacts: huggingface.co/common-pile

GitHub: github.com/r-three/comm...

EleutherAI's blog post: huggingface.co/blog/stellaa...

Coverage in @washingtonpost.com by @nitasha.bsky.social: www.washingtonpost.com/politics/202...

Paper: arxiv.org/abs/2506.05209

Artifacts: huggingface.co/common-pile

GitHub: github.com/r-three/comm...

EleutherAI's blog post: huggingface.co/blog/stellaa...

Coverage in @washingtonpost.com by @nitasha.bsky.social: www.washingtonpost.com/politics/202...

If you know datasets we should include in the next version, open an issue: github.com/r-three/comm...

If you know datasets we should include in the next version, open an issue: github.com/r-three/comm...

Succinctly put, it's data that anyone can use, modify, and share for any purpose.

Succinctly put, it's data that anyone can use, modify, and share for any purpose.

We are thrilled to announce the Common Pile v0.1, an 8TB dataset of openly licensed and public domain text. We train 7B models for 1T and 2T tokens and match the performance similar models like LLaMA 1 & 2

We are thrilled to announce the Common Pile v0.1, an 8TB dataset of openly licensed and public domain text. We train 7B models for 1T and 2T tokens and match the performance similar models like LLaMA 1 & 2

We are organising the 1st Workshop on Multilingual Data Quality Signals with @mlcommons.org and @eleutherai.bsky.social, held in tandem with @colmweb.org. Submit your research on multilingual data quality!

Submission deadline is 23 June, more info: wmdqs.org

We are organising the 1st Workshop on Multilingual Data Quality Signals with @mlcommons.org and @eleutherai.bsky.social, held in tandem with @colmweb.org. Submit your research on multilingual data quality!

Submission deadline is 23 June, more info: wmdqs.org

ArXiv: arxiv.org/abs/2502.02289

#NLProc #LLM #Evaluation

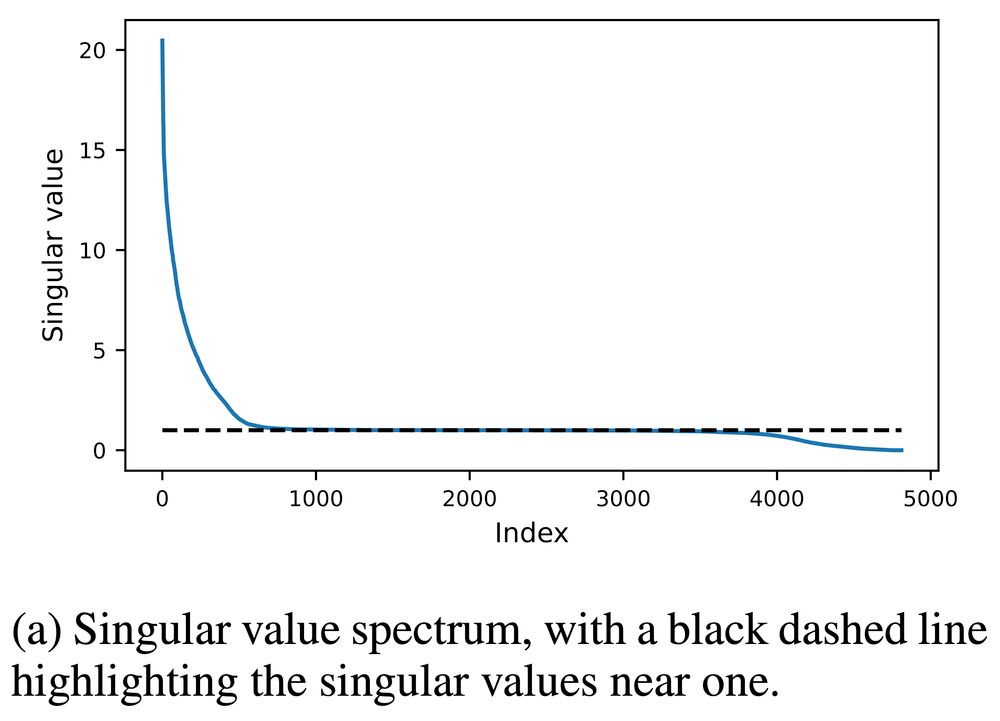

In this new paper, we answer this question by analyzing the training Jacobian, the matrix of derivatives of the final parameters with respect to the initial parameters.

https://arxiv.org/abs/2412.07003

In this new paper, we answer this question by analyzing the training Jacobian, the matrix of derivatives of the final parameters with respect to the initial parameters.

https://arxiv.org/abs/2412.07003

If you're interested in helping out with this kind of research, check out the concept-editing channel on our Discord.

This work is by Thomas Marshall, Adam Scherlis, and @norabelrose.bsky.social. It is funded in part by a grant from OpenPhil.

If you're interested in helping out with this kind of research, check out the concept-editing channel on our Discord.

This work is by Thomas Marshall, Adam Scherlis, and @norabelrose.bsky.social. It is funded in part by a grant from OpenPhil.

Is a linear representation a linear function (that preserves the origin) or an affine function (that does not)? This distinction matters in practice. arxiv.org/abs/2411.09003

Is a linear representation a linear function (that preserves the origin) or an affine function (that does not)? This distinction matters in practice. arxiv.org/abs/2411.09003