Apply by *December 15* for full consideration.

Apply by *December 15* for full consideration.

Please RT for reach :)

If you've changed your name and dealt with updating publications, we want to hear your experience. Any reason counts: transition, marriage, cultural reasons, etc.

forms.cloud.microsoft/e/E0XXBmZdEP

Please RT for reach :)

📢 I'm recruiting PhD students in Computer Science or Information Science @cornellbowers.bsky.social!

If you're interested, apply to either department (yes, either program!) and list me as a potential advisor!

📢 I'm recruiting PhD students in Computer Science or Information Science @cornellbowers.bsky.social!

If you're interested, apply to either department (yes, either program!) and list me as a potential advisor!

Our student-run application feedback program will be open from October 20th through 1st November 2025.

Everyone applying, especially those from historically underrepresented groups or who have faced barriers in higher ed are highly encouraged to apply.

Our student-run application feedback program will be open from October 20th through 1st November 2025.

Everyone applying, especially those from historically underrepresented groups or who have faced barriers in higher ed are highly encouraged to apply.

Call for Papers: facctconference.org/2026/cfp

Important info in thread →

Call for Papers: facctconference.org/2026/cfp

Important info in thread →

Apply to the Graduate Applicant Support Program by Oct 13 to receive feedback on your application materials:

Apply to the Graduate Applicant Support Program by Oct 13 to receive feedback on your application materials:

computersciencelaw.org/2026

We have a great selection of speakers and alongside

talks, we’ll feature student posters + demos.

🔗 Details, registration, and poster submission: dli.tech.cornell.edu/nyc-privacy-...

We have a great selection of speakers and alongside

talks, we’ll feature student posters + demos.

🔗 Details, registration, and poster submission: dli.tech.cornell.edu/nyc-privacy-...

#STS #4S

@mfranchi.bsky.social @jennahgosciak.bsky.social and I organize an EAAMO Bridges working group on Urban Data Science and we are looking for new members!

Fill the interest form on our page: urban-data-science-eaamo.github.io

@mfranchi.bsky.social @jennahgosciak.bsky.social and I organize an EAAMO Bridges working group on Urban Data Science and we are looking for new members!

Fill the interest form on our page: urban-data-science-eaamo.github.io

Given the sheer scale of these events, its really helpful to see what caught people's eye at these conferences...

I'll aim to post new ones throughout the summer and will tag all the authors I can find on Bsky. Please feel welcome to chime in with thoughts / paper recs / etc.!!

🧵⬇️:

Given the sheer scale of these events, its really helpful to see what caught people's eye at these conferences...

🔗: arxiv.org/pdf/2506.04419

We’re thrilled to pre-celebrate the incredible research 📚 ✨ that will be presented starting Monday next week in Vienna 🇦🇹 !

Start exploring 👉 aclanthology.org/events/acl-2...

#NLProc #ACL2025NLP #ACLAnthology

We’re thrilled to pre-celebrate the incredible research 📚 ✨ that will be presented starting Monday next week in Vienna 🇦🇹 !

Start exploring 👉 aclanthology.org/events/acl-2...

#NLProc #ACL2025NLP #ACLAnthology

@farhana-shahid.bsky.social & I are conducting research on peer review experience of research by and about Global Majority.

participation form: docs.google.com/forms/d/e/1F...

@farhana-shahid.bsky.social & I are conducting research on peer review experience of research by and about Global Majority.

participation form: docs.google.com/forms/d/e/1F...

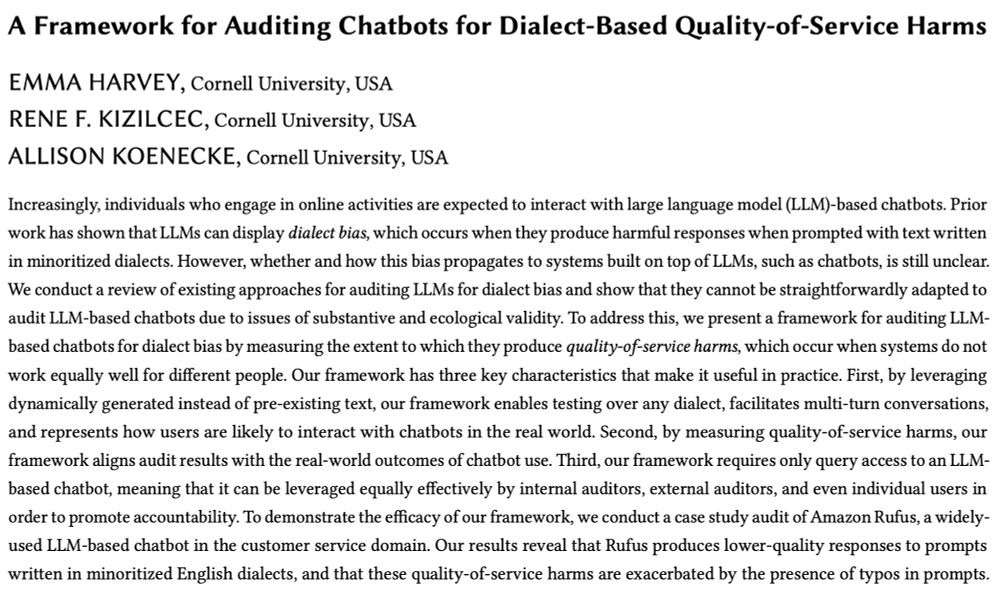

Check it out, and don't forget to read @emmharv.bsky.social's award-winning FAccT paper with @allisonkoe.bsky.social and @kizilcec.bsky.social: dl.acm.org/doi/10.1145/...

Check it out, and don't forget to read @emmharv.bsky.social's award-winning FAccT paper with @allisonkoe.bsky.social and @kizilcec.bsky.social: dl.acm.org/doi/10.1145/...

I'll aim to post new ones throughout the summer and will tag all the authors I can find on Bsky. Please feel welcome to chime in with thoughts / paper recs / etc.!!

🧵⬇️:

I'll aim to post new ones throughout the summer and will tag all the authors I can find on Bsky. Please feel welcome to chime in with thoughts / paper recs / etc.!!

🧵⬇️:

Most conjoints estimate average effects for each attribute.

But what if the effect of one attribute depends on the others?

This paper has got you covered!

Most conjoints estimate average effects for each attribute.

But what if the effect of one attribute depends on the others?

This paper has got you covered!

I recorded my presentation on how Technology Design Students use GenAI in class projects, accelerating design iteration but causing negative sentiment about learning and reflection skills.

supercut.ai/share/cornel...

I recorded my presentation on how Technology Design Students use GenAI in class projects, accelerating design iteration but causing negative sentiment about learning and reflection skills.

supercut.ai/share/cornel...