I will be supervised by @manoelhortaribeiro.bsky.social and affiliated with Princeton CITP.

It's only been a month, but the energy feels amazing —very grateful for such a welcoming community. Excited for what’s ahead! 🚀

I will be supervised by @manoelhortaribeiro.bsky.social and affiliated with Princeton CITP.

It's only been a month, but the energy feels amazing —very grateful for such a welcoming community. Excited for what’s ahead! 🚀

In a new paper, we introduce Bonsai, a tool to create feeds based on stated preferences, rather than predicted engagement.

arxiv.org/abs/2509.10776

In a new paper, we introduce Bonsai, a tool to create feeds based on stated preferences, rather than predicted engagement.

arxiv.org/abs/2509.10776

Read it at: t.co/MipJKWbb1h.

Thanks @andyluttrell.bsky.social @prpietromonaco.bsky.social @spspnews.bsky.social for your invitation and feedback!

Read it at: t.co/MipJKWbb1h.

Thanks @andyluttrell.bsky.social @prpietromonaco.bsky.social @spspnews.bsky.social for your invitation and feedback!

To understand the scale of such misinformation, our #EMNLP2025 paper introduces MythTriage, a scalable system to detect OUD myth🧵

To understand the scale of such misinformation, our #EMNLP2025 paper introduces MythTriage, a scalable system to detect OUD myth🧵

Trained on 15T tokens in 1,000+ languages, it’s built for transparency, responsibility & the public good.

Read more: actu.epfl.ch/news/apertus...

Trained on 15T tokens in 1,000+ languages, it’s built for transparency, responsibility & the public good.

Read more: actu.epfl.ch/news/apertus...

In this case, either AI or humans (paid if they were persuasive) tried to convince quiz takers (paid for accuracy) to pick either right or wrong answers on a quiz.

In this case, either AI or humans (paid if they were persuasive) tried to convince quiz takers (paid for accuracy) to pick either right or wrong answers on a quiz.

Check out our website: sites.google.com/andrew.cmu.e...

Call for submissions (extended abstracts) due June 19, 11:59pm AoE

#COLM2025 #LLMs #NLP #NLProc #ComputationalSocialScience

Check out our website: sites.google.com/andrew.cmu.e...

Call for submissions (extended abstracts) due June 19, 11:59pm AoE

#COLM2025 #LLMs #NLP #NLProc #ComputationalSocialScience

🤖 Key takeaway: LLMs can already reach superhuman persuasiveness, especially when given access to personalized information

www.nature.com/articles/s41...

🤖 Key takeaway: LLMs can already reach superhuman persuasiveness, especially when given access to personalized information

www.nature.com/articles/s41...

Read more on persuasive chatbots in my rather terrifying piece for @nature.com 🧪

www.nature.com/articles/d41...

Read more on persuasive chatbots in my rather terrifying piece for @nature.com 🧪

www.nature.com/articles/d41...

airtable.com/appGKlSVeXni...

Collecting this information is supremely helpful to organize and facilitate a response.

airtable.com/appGKlSVeXni...

Collecting this information is supremely helpful to organize and facilitate a response.

Details: www.cs.au.dk/~clan/openings

Deadline: May 1, 2025

Please boost!

cc: @aicentre.dk @wikiresearch.bsky.social

Details: www.cs.au.dk/~clan/openings

Deadline: May 1, 2025

Please boost!

cc: @aicentre.dk @wikiresearch.bsky.social

Submit your abstract now: www.ic2s2-2025.org/submit-abstr... and join us in Norrköping, Sweden.

Tutorials announcement coming soon!

Submit your abstract now: www.ic2s2-2025.org/submit-abstr... and join us in Norrköping, Sweden.

Tutorials announcement coming soon!

Applied to social media, it can produce large-scale and granular estimates of behavior change wrt collective action.

github.com/ariannap13/e...

@nerdsitu.bsky.social @itu.dk @carlsbergfondet.dk

Check it out: arxiv.org/abs/2501.07368

@nerdsitu.bsky.social

Applied to social media, it can produce large-scale and granular estimates of behavior change wrt collective action.

github.com/ariannap13/e...

@nerdsitu.bsky.social @itu.dk @carlsbergfondet.dk

🤖 I'll be presenting our work on AI persuasion [1] tomorrow morning at 11:15 to session 1A — come say hello!

[1] arxiv.org/abs/2403.14380

🤖 I'll be presenting our work on AI persuasion [1] tomorrow morning at 11:15 to session 1A — come say hello!

[1] arxiv.org/abs/2403.14380

arxiv.org/abs/2412.17128

arxiv.org/abs/2412.17128

They are now professors at EPFL. Welcome!!! 🤗🚀

actu.epfl.ch/news/appoint...

They are now professors at EPFL. Welcome!!! 🤗🚀

actu.epfl.ch/news/appoint...

Timely large-scale study mobilising an army of scholars across EPFL, including my small contribution to the evaluation efforts ✍️

More below ⬇️

Timely large-scale study mobilising an army of scholars across EPFL, including my small contribution to the evaluation efforts ✍️

More below ⬇️

Post Guidance lets moderators prevent rule-breaking by triggering interventions as users write posts!

We implemented PG on Reddit and tested it in a massive field experiment (n=97k). It became a feature!

arxiv.org/abs/2411.16814

Post Guidance lets moderators prevent rule-breaking by triggering interventions as users write posts!

We implemented PG on Reddit and tested it in a massive field experiment (n=97k). It became a feature!

arxiv.org/abs/2411.16814

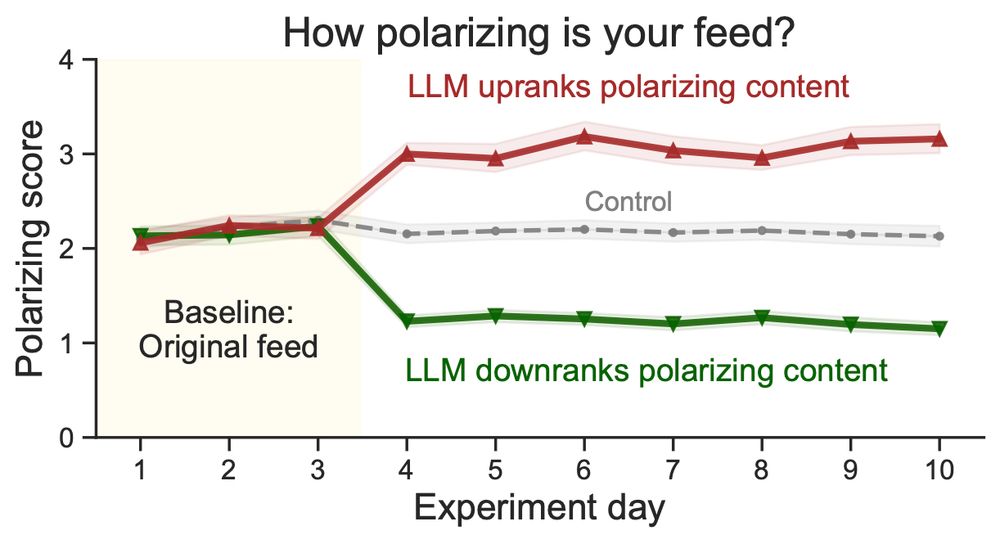

We ran a field experiment on X/Twitter (N=1,256) using LLMs to rerank content in real-time, adjusting exposure to polarizing posts. Result: Algorithmic ranking impacts feelings toward the political outgroup! 🧵⬇️

Here is number 2! More amazing folks to follow! Many students and the next gen represented!

go.bsky.app/GoEyD7d

Here is number 2! More amazing folks to follow! Many students and the next gen represented!

go.bsky.app/GoEyD7d

go.bsky.app/CYmRvcK

go.bsky.app/CYmRvcK