Let's take a trip down memory lane!

[1/N]

Let's take a trip down memory lane!

[1/N]

Let's take a trip down memory lane!

[1/N]

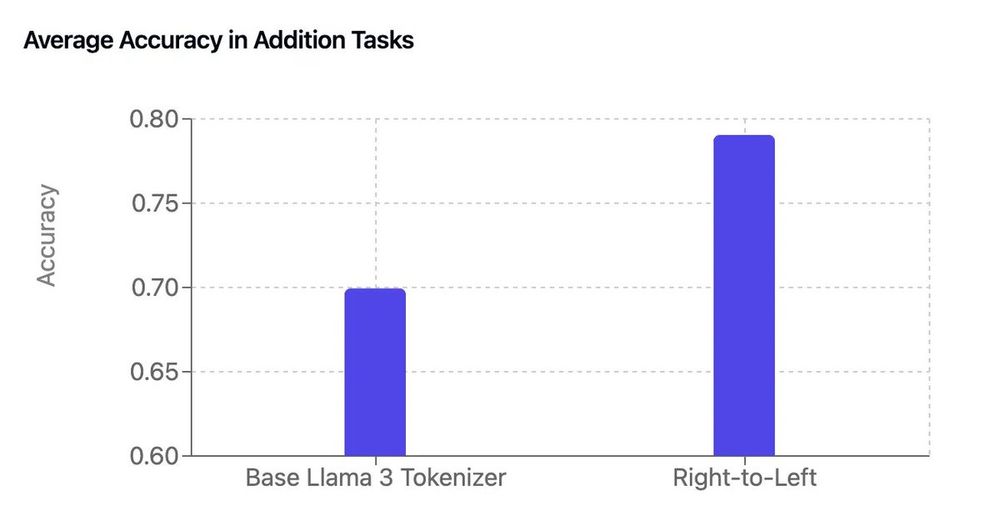

By adding a few lines of code to the base Llama 3 tokenizer, he got a free boost in arithmetic performance 😮

[thread]

➡️ To install: `pip install gcmt`

➡️ To install: `pip install gcmt`