https://hazeldoughty.github.io

I’ll retrace some history and show how precognition enables assistive downstream tasks and representation learning for procedural understanding.

I’ll retrace some history and show how precognition enables assistive downstream tasks and representation learning for procedural understanding.

📅 09:45 Thursday 12th June

📍 208 A

👉 sites.google.com/view/ivise2025

📅 09:45 Thursday 12th June

📍 208 A

👉 sites.google.com/view/ivise2025

Attending #CVPR2025

Multiple opportunities to know about the most highly-detailed video dataset with a digital twin, long-term object tracks, VQA,…

hd-epic.github.io

1. Find any of the 10 authors attending @cvprconference.bsky.social

– identified by this badge.

🧵

Attending #CVPR2025

Multiple opportunities to know about the most highly-detailed video dataset with a digital twin, long-term object tracks, VQA,…

hd-epic.github.io

1. Find any of the 10 authors attending @cvprconference.bsky.social

– identified by this badge.

🧵

Be the 🥇st to win HD-EPIC VQA challenge

hd-epic.github.io/index#vqa-be...

DL 19 May

Winners announced @cvprconference.bsky.social #EgoVis workshop

Be the 🥇st to win HD-EPIC VQA challenge

hd-epic.github.io/index#vqa-be...

DL 19 May

Winners announced @cvprconference.bsky.social #EgoVis workshop

Also, HD-EPIC VQA challenge is open [Leaderboard closes 19 May]... can you be 1st winner?

codalab.lisn.upsaclay.fr/competitions...

Btw, HD-EPIC was accepted @cvprconference.bsky.social #CVPR2025

HD-EPIC: A Highly-Detailed Egocentric Video Dataset

hd-epic.github.io

arxiv.org/abs/2502.04144

New collected videos

263 annotations/min: recipe, nutrition, actions, sounds, 3D object movement &fixture associations, masks.

26K VQA benchmark to challenge current VLMs

1/N

Also, HD-EPIC VQA challenge is open [Leaderboard closes 19 May]... can you be 1st winner?

codalab.lisn.upsaclay.fr/competitions...

Btw, HD-EPIC was accepted @cvprconference.bsky.social #CVPR2025

See how your model stacks up against Gemini and LLaVA Video on a wide range of video understanding tasks.

See how your model stacks up against Gemini and LLaVA Video on a wide range of video understanding tasks.

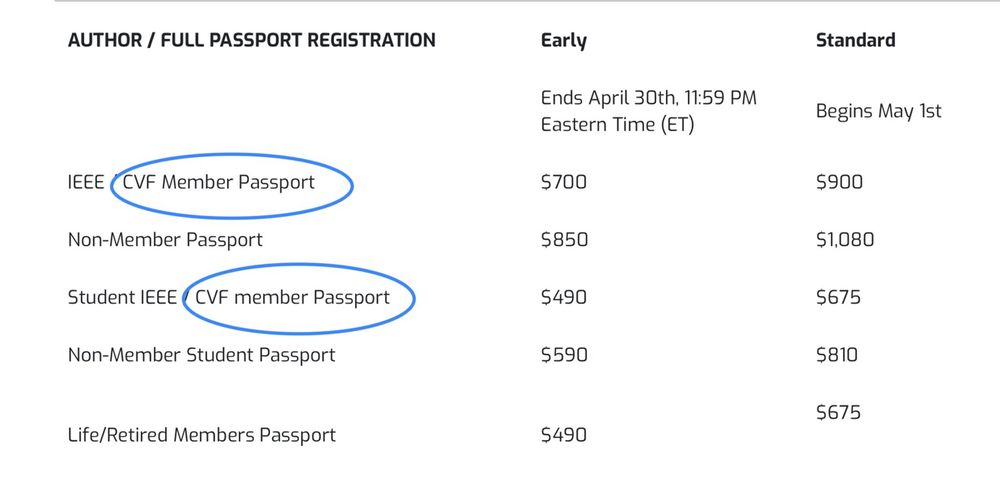

CVF: thecvf.com

CVF: thecvf.com

Egocentric videos 👩🍳 with very rich annotations: the perfect testbed for many egocentric vision tasks 👌

arxiv.org/abs/2502.04144

hd-epic.github.io

What makes the dataset unique is the vast detail contained in the annotations with 263 annotations per minute over 41 hours of video.

Egocentric videos 👩🍳 with very rich annotations: the perfect testbed for many egocentric vision tasks 👌

arxiv.org/abs/2502.04144

hd-epic.github.io

What makes the dataset unique is the vast detail contained in the annotations with 263 annotations per minute over 41 hours of video.

arxiv.org/abs/2502.04144

hd-epic.github.io

What makes the dataset unique is the vast detail contained in the annotations with 263 annotations per minute over 41 hours of video.

HD-EPIC: A Highly-Detailed Egocentric Video Dataset

hd-epic.github.io

arxiv.org/abs/2502.04144

New collected videos

263 annotations/min: recipe, nutrition, actions, sounds, 3D object movement &fixture associations, masks.

26K VQA benchmark to challenge current VLMs

1/N

HD-EPIC: A Highly-Detailed Egocentric Video Dataset

hd-epic.github.io

arxiv.org/abs/2502.04144

New collected videos

263 annotations/min: recipe, nutrition, actions, sounds, 3D object movement &fixture associations, masks.

26K VQA benchmark to challenge current VLMs

1/N

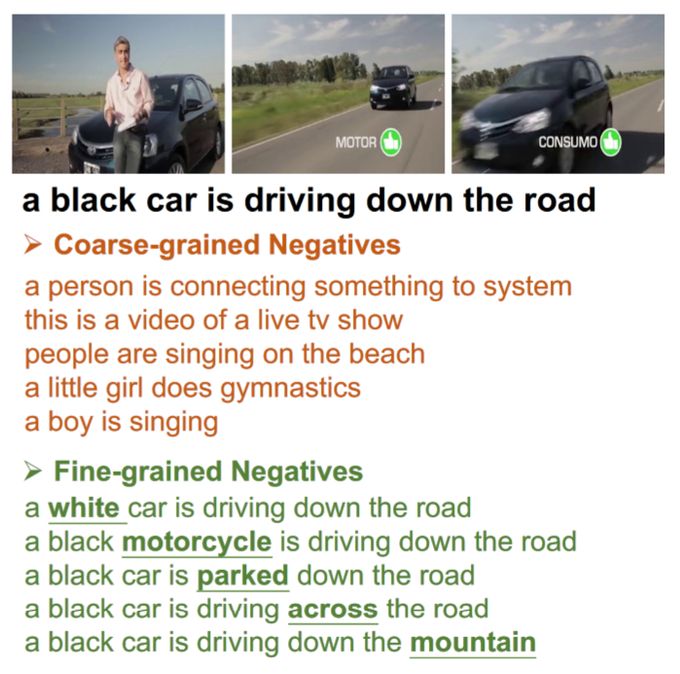

ArXiv: arxiv.org/abs/2410.12407

We go beyond coarse-grained retrieval and explore whether models can discern subtle single-word differences in captions.

ArXiv: arxiv.org/abs/2410.12407

We go beyond coarse-grained retrieval and explore whether models can discern subtle single-word differences in captions.

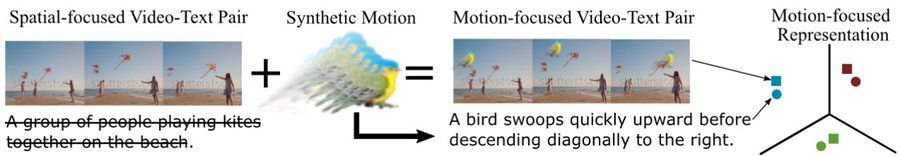

We remove the spatial focus of video-language representations and instead train representations to have a motion focus.

We remove the spatial focus of video-language representations and instead train representations to have a motion focus.

Permanent Assistant Professor (Lecturer) position in Computer Vision @bristoluni.bsky.social [DL 6 Jan 2025]

This is a research+teaching permanent post within MaVi group uob-mavi.github.io in Computer Science. Suitable for strong postdocs or exceptional PhD graduates.

t.co/k7sRRyfx9o

1/2

Permanent Assistant Professor (Lecturer) position in Computer Vision @bristoluni.bsky.social [DL 6 Jan 2025]

This is a research+teaching permanent post within MaVi group uob-mavi.github.io in Computer Science. Suitable for strong postdocs or exceptional PhD graduates.

t.co/k7sRRyfx9o

1/2