hirokatsukataoka.net/temp/presen/...

This includes the following papers:

- Industrial Synthetic Segment Pre-training arxiv.org/abs/2505.13099

- S3OD: Towards Generalizable Salient Object Detection with Synthetic Data arxiv.org/abs/2510.21605

hirokatsukataoka.net/temp/presen/...

This includes the following papers:

- Industrial Synthetic Segment Pre-training arxiv.org/abs/2505.13099

- S3OD: Towards Generalizable Salient Object Detection with Synthetic Data arxiv.org/abs/2510.21605

Even Gemini-2.5-Pro reaches 73% & 97% human score, revealing key issue in space-time task.

Project: masatate.github.io/HanDyVQA-pro...

Even Gemini-2.5-Pro reaches 73% & 97% human score, revealing key issue in space-time task.

Project: masatate.github.io/HanDyVQA-pro...

Outperforms several SotA in zero-shot classification, retrieval, robustness, and compositional tasks!

arxiv.org/abs/2511.23170

Outperforms several SotA in zero-shot classification, retrieval, robustness, and compositional tasks!

arxiv.org/abs/2511.23170

- Project: kodaikawamura.github.io/Domain_Unlea...

- Paper: arxiv.org/abs/2510.08132

- Project: kodaikawamura.github.io/Domain_Unlea...

- Paper: arxiv.org/abs/2510.08132

hirokatsukataoka.net/temp/presen/...

Compiled during ICCV in collaboration with LIMIT.Lab, cvpaper.challenge, and Visual Geometry Group (VGG), this report offers meta insights into the trends and tendencies observed at this year’s conference.

#ICCV2025

hirokatsukataoka.net/temp/presen/...

Compiled during ICCV in collaboration with LIMIT.Lab, cvpaper.challenge, and Visual Geometry Group (VGG), this report offers meta insights into the trends and tendencies observed at this year’s conference.

#ICCV2025

Here are my accepted papers and roles at this year’s #ICCV2025 / @iccv.bsky.social .

Please check out the threads below:

Here are my accepted papers and roles at this year’s #ICCV2025 / @iccv.bsky.social .

Please check out the threads below:

cambridgecv-workshop-2025sep.limitlab.xyz

cambridgecv-workshop-2025sep.limitlab.xyz

--

- OpenReview: openreview.net/group?id=the...

- Website: iccv2025-limit-workshop.limitlab.xyz

--

- OpenReview: openreview.net/group?id=the...

- Website: iccv2025-limit-workshop.limitlab.xyz

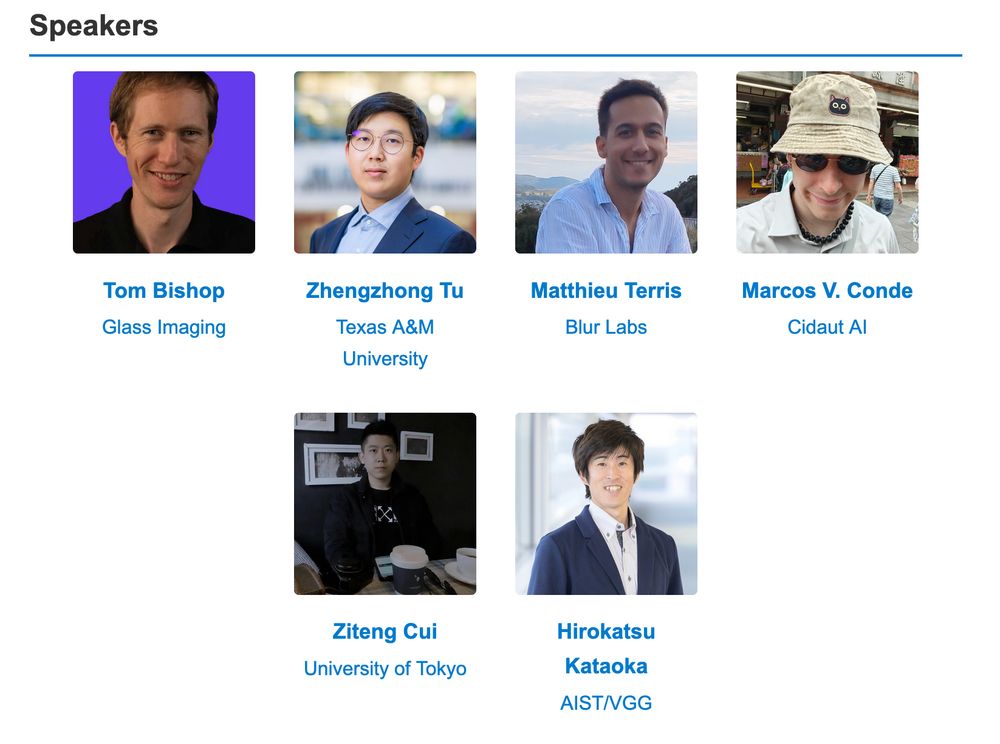

◆ LIMIT Workshop (19 Oct, PM): iccv2025-limit-workshop.limitlab.xyz

◆ FOUND Workshop (19 Oct, AM): iccv2025-found-workshop.limitlab.xyz

We warmly invite you to attend at these workshops in ICCV 2025 Hawaii!

◆ LIMIT Workshop (19 Oct, PM): iccv2025-limit-workshop.limitlab.xyz

◆ FOUND Workshop (19 Oct, AM): iccv2025-found-workshop.limitlab.xyz

We warmly invite you to attend at these workshops in ICCV 2025 Hawaii!

supercamerai.github.io

supercamerai.github.io

Project page: dahlian00.github.io/AnimalCluePa...

Dataset: huggingface.co/risashinoda

Press: www.aist.go.jp/aist_j/press...

Project page: dahlian00.github.io/AnimalCluePa...

Dataset: huggingface.co/risashinoda

Press: www.aist.go.jp/aist_j/press...

Project page: dahlian00.github.io/AgroBenchPage/

Paper: arxiv.org/abs/2507.20519

Code: huggingface.co/datasets/ris...

Dataset: github.com/dahlian00/Ag...

Project page: dahlian00.github.io/AgroBenchPage/

Paper: arxiv.org/abs/2507.20519

Code: huggingface.co/datasets/ris...

Dataset: github.com/dahlian00/Ag...

hirokatsukataoka.net/temp/presen/...

Compiled during CVPR in collaboration with LIMIT.Lab, cvpaper.challenge, and Visual Geometry Group (VGG), this report offers meta insights into the trends and tendencies observed at this year’s conference.

#CVPR2025

hirokatsukataoka.net/temp/presen/...

Compiled during CVPR in collaboration with LIMIT.Lab, cvpaper.challenge, and Visual Geometry Group (VGG), this report offers meta insights into the trends and tendencies observed at this year’s conference.

#CVPR2025

limitlab.xyz

We’ve established "LIMIT.Lab" a collaboration hub for building multimodal AI models under limited resources, covering images, videos, 3D, and text, when any resource (e.g., compute, data, or labels) is constrained.

limitlab.xyz

We’ve established "LIMIT.Lab" a collaboration hub for building multimodal AI models under limited resources, covering images, videos, 3D, and text, when any resource (e.g., compute, data, or labels) is constrained.

Formula-driven supervised learning (FDSL) has surpassed the vision foundation model "SAM" on industrial data. It delivers strong transfer performance to industry while minimizing IP-related concerns.

arxiv.org/abs/2505.13099

Formula-driven supervised learning (FDSL) has surpassed the vision foundation model "SAM" on industrial data. It delivers strong transfer performance to industry while minimizing IP-related concerns.

arxiv.org/abs/2505.13099

cvpr.thecvf.com/Conferences/...

cvpr.thecvf.com/Conferences/...

7 years later, I am very grateful for your *50 minutes* talk and full day visit to my group...

Thanks for the personal touch ☺️

2/2

7 years later, I am very grateful for your *50 minutes* talk and full day visit to my group...

Thanks for the personal touch ☺️

2/2

This was a very motivating talk on training from limited synthetic data.

Thanks for bringing up our first encounter #CVPR2018

....

1/2

This was a very motivating talk on training from limited synthetic data.

Thanks for bringing up our first encounter #CVPR2018

....

1/2

--

Formula-Supervised Sound Event Detection: Pre-Training Without Real Data, ICASSP 2025.

- Paper: arxiv.org/abs/2504.04428

- Project: yutoshibata07.github.io/Formula-SED/

--

Formula-Supervised Sound Event Detection: Pre-Training Without Real Data, ICASSP 2025.

- Paper: arxiv.org/abs/2504.04428

- Project: yutoshibata07.github.io/Formula-SED/

# The shared images are coming from our paper

# The shared images are coming from our paper

Project Page: vgg-t.github.io

Code & Weights: github.com/facebookrese...

Project Page: vgg-t.github.io

Code & Weights: github.com/facebookrese...

My research has reached 5,000 citations on Google Scholar! This wouldn’t have been possible without the support of my co-authors, colleagues, mentors, and the entire research community.

Looking forward to the next phase of collaboration! 🙌

My research has reached 5,000 citations on Google Scholar! This wouldn’t have been possible without the support of my co-authors, colleagues, mentors, and the entire research community.

Looking forward to the next phase of collaboration! 🙌

Paper: arxiv.org/abs/2502.01490

Paper: arxiv.org/abs/2502.01490

Given it a read or paste it into NotebookLM to listen, if you prefer!

blog.google/technology/a...

Given it a read or paste it into NotebookLM to listen, if you prefer!

blog.google/technology/a...

Our paper, "Efficient Load Interference Detection with Limited Labeled Data," has won the SICE International Young Authors Award (SIYA) 2025🎉🎉🎉 This work is the family of FDSL pre-training and its real-world application in advanced logistics using forklists.

Our paper, "Efficient Load Interference Detection with Limited Labeled Data," has won the SICE International Young Authors Award (SIYA) 2025🎉🎉🎉 This work is the family of FDSL pre-training and its real-world application in advanced logistics using forklists.