DNS-HyXNet = xLSTM for DNS tunnels.

DNS-HyXNet has 99.99% accuracy, with F1-scores exceeding 99.96%, and per-sample detection latency of just 0.041 ms, confirming its scalability and real-time readiness. wow!

DNS-HyXNet = xLSTM for DNS tunnels.

DNS-HyXNet has 99.99% accuracy, with F1-scores exceeding 99.96%, and per-sample detection latency of just 0.041 ms, confirming its scalability and real-time readiness. wow!

“Across four PDEs under matched size and budget, xLSTM-PINN consistently reduces MSE, RMSE, MAE, and MaxAE with markedly narrower error bands.”

“cleaner boundary transitions with attenuated high-frequency ripples”

“Across four PDEs under matched size and budget, xLSTM-PINN consistently reduces MSE, RMSE, MAE, and MaxAE with markedly narrower error bands.”

“cleaner boundary transitions with attenuated high-frequency ripples”

2015-2025: turns out that there's hardly any improvement. AI bubble?

GPT is at 70% for this task, whereas the best methods get close to 85%.

Leaderboard: huggingface.co/spaces/ml-jk...

P: arxiv.org/abs/2511.14744

2015-2025: turns out that there's hardly any improvement. AI bubble?

GPT is at 70% for this task, whereas the best methods get close to 85%.

Leaderboard: huggingface.co/spaces/ml-jk...

P: arxiv.org/abs/2511.14744

X-TRACK based on xLSTM achieves SOTA.

“Compared to state-of-the-art baselines, X-TRACK achieves performance improvement by 79% at the 1-second prediction and 20% at the 5-second prediction in the case of highD”

Again xLSTM excels.

X-TRACK based on xLSTM achieves SOTA.

“Compared to state-of-the-art baselines, X-TRACK achieves performance improvement by 79% at the 1-second prediction and 20% at the 5-second prediction in the case of highD”

Again xLSTM excels.

PMP leverages xLSTM to denoise actions for robotics.

“PMP not only achieves state-of-the-art performance but also offers significantly faster training and inference.”

xLSTM excels in robotics.

PMP leverages xLSTM to denoise actions for robotics.

“PMP not only achieves state-of-the-art performance but also offers significantly faster training and inference.”

xLSTM excels in robotics.

“On the Jigsaw Toxic Comment benchmark, xLSTM attains 96.0% accuracy and 0.88 macro-F1, outperforming BERT by 33% on threat and 28% on identity_hate categories, with 15× fewer parameters and <50 ms inference latency.”

xLSTM is fast!

“On the Jigsaw Toxic Comment benchmark, xLSTM attains 96.0% accuracy and 0.88 macro-F1, outperforming BERT by 33% on threat and 28% on identity_hate categories, with 15× fewer parameters and <50 ms inference latency.”

xLSTM is fast!

"gLSTM mitigates sensitivity over-squashing and capacity over-squashing."

"gLSTM achieves comfortably state of the art results on the Diameter and Eccentricity Graph Property Prediction tasks"

"gLSTM mitigates sensitivity over-squashing and capacity over-squashing."

"gLSTM achieves comfortably state of the art results on the Diameter and Eccentricity Graph Property Prediction tasks"

"The xLSTM-based IDS achieves an F1-score of 98.9%, surpassing the transformer-based model at 94.3%."

xLSTM is faster than transformer when using fast kernels as provided in github.com/nx-ai/mlstm_... and github.com/NX-AI/flashrnn

"The xLSTM-based IDS achieves an F1-score of 98.9%, surpassing the transformer-based model at 94.3%."

xLSTM is faster than transformer when using fast kernels as provided in github.com/nx-ai/mlstm_... and github.com/NX-AI/flashrnn

"SWAX, a hybrid consisting of sliding-window attention and xLSTM."

"SWAX trained with stochastic window sizes significantly outperforms regular window attention both on short and long-context problems."

"SWAX, a hybrid consisting of sliding-window attention and xLSTM."

"SWAX trained with stochastic window sizes significantly outperforms regular window attention both on short and long-context problems."

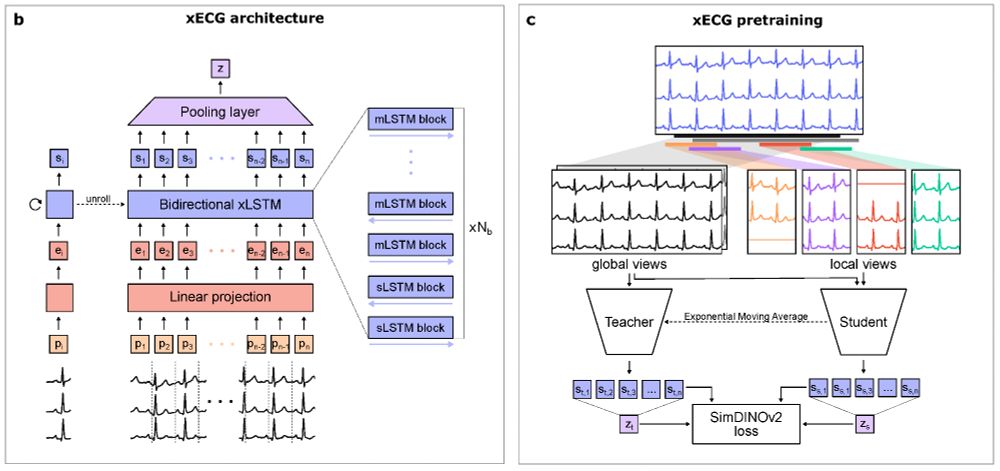

"xECG achieves superior performance over earlier approaches, defining a new baseline for future ECG foundation models."

xLSTM is perfectly suited for time series prediction as shown by TiRex.

"xECG achieves superior performance over earlier approaches, defining a new baseline for future ECG foundation models."

xLSTM is perfectly suited for time series prediction as shown by TiRex.

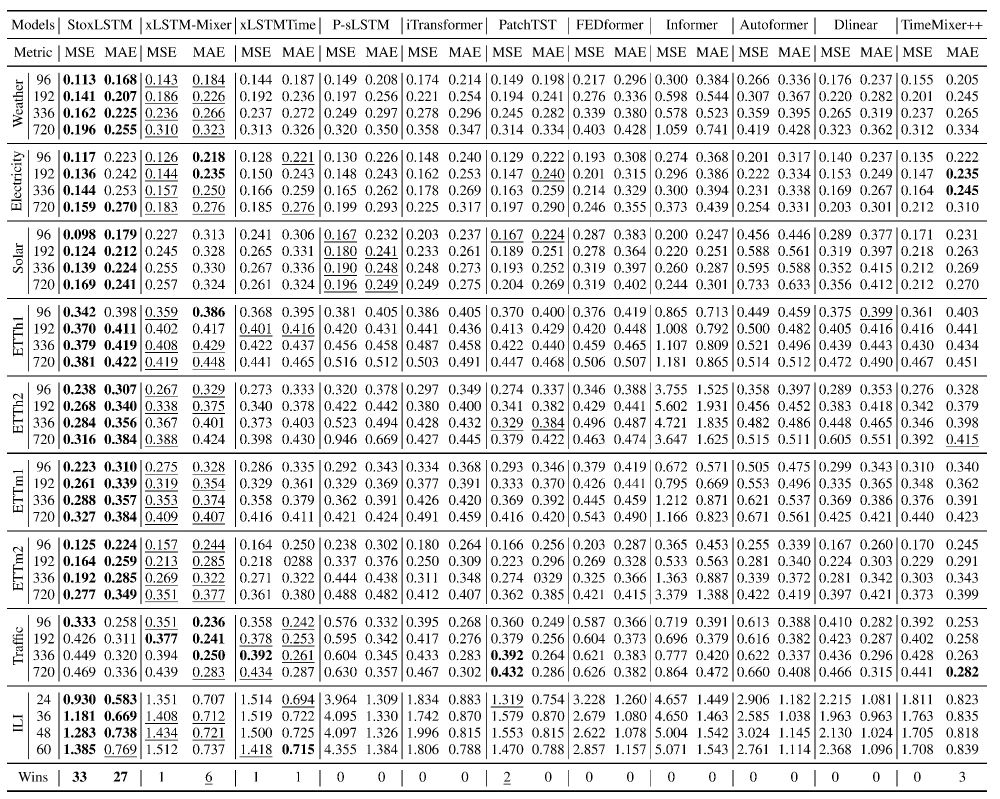

Introduces "stochastic xLSTM" (StoxLSTM).

"StoxLSTM consistently outperforms state-of-the-art baselines with better robustness and stronger generalization ability."

We know that xLSTM is king at time series from our TiRex.

Introduces "stochastic xLSTM" (StoxLSTM).

"StoxLSTM consistently outperforms state-of-the-art baselines with better robustness and stronger generalization ability."

We know that xLSTM is king at time series from our TiRex.

"Empirical results showed a 23% MAE reduction over the original STN and a 30% improvement on unseen data, highlighting strong generalization."

xLSTM shines again in time series forecasting.

"Empirical results showed a 23% MAE reduction over the original STN and a 30% improvement on unseen data, highlighting strong generalization."

xLSTM shines again in time series forecasting.

xLSTM has superior performance vs. Mamba and Transformers but is slower than Mamba.

New Triton kernels: xLSTM is faster than MAMBA at training and inference: arxiv.org/abs/2503.13427 and arxiv.org/abs/2503.14376

xLSTM has superior performance vs. Mamba and Transformers but is slower than Mamba.

New Triton kernels: xLSTM is faster than MAMBA at training and inference: arxiv.org/abs/2503.13427 and arxiv.org/abs/2503.14376

Another success story of xLSTM. MEGA: xLSTM with Multihead Exponential Gated Fusion.

Experiments on 3 benchmarks show that MEGA outperforms state-of-the-art baselines with superior accuracy and efficiency”

Another success story of xLSTM. MEGA: xLSTM with Multihead Exponential Gated Fusion.

Experiments on 3 benchmarks show that MEGA outperforms state-of-the-art baselines with superior accuracy and efficiency”

“In our results, xLSTM showcases state-of-the-art accuracy, outperforming 23 popular anomaly detection baselines.”

Again, xLSTM excels in time series analysis.

“In our results, xLSTM showcases state-of-the-art accuracy, outperforming 23 popular anomaly detection baselines.”

Again, xLSTM excels in time series analysis.

"HopaDIFF, leveraging a novel cross-input gate attentional xLSTM to enhance holistic-partial long-range reasoning"

"HopaDIFF achieves state-of-theart results on RHAS133 in diverse evaluation settings."

"HopaDIFF, leveraging a novel cross-input gate attentional xLSTM to enhance holistic-partial long-range reasoning"

"HopaDIFF achieves state-of-theart results on RHAS133 in diverse evaluation settings."

Paper: arxiv.org/abs/2505.23719

Code: github.com/NX-AI/tirex

Paper: arxiv.org/abs/2505.23719

Code: github.com/NX-AI/tirex

➡️ Outperforms models by Amazon, Google, Datadog, Salesforce, Alibaba

➡️ industrial applications

➡️ limited data

➡️ embedded AI and edge devices

➡️ Europe is leading

Code: lnkd.in/eHXb-XwZ

Paper: lnkd.in/e8e7xnri

shorturl.at/jcQeq

➡️ Outperforms models by Amazon, Google, Datadog, Salesforce, Alibaba

➡️ industrial applications

➡️ limited data

➡️ embedded AI and edge devices

➡️ Europe is leading

Code: lnkd.in/eHXb-XwZ

Paper: lnkd.in/e8e7xnri

shorturl.at/jcQeq

Lots of fun anecdotes and easily accessible basics on AI!

www.beneventopublishing.com/ecowing/prod...

Lots of fun anecdotes and easily accessible basics on AI!

www.beneventopublishing.com/ecowing/prod...

"xLSTM model demonstrated better generalization capabilities to new operators. The results clearly show that for this type of classification, the xLSTM model offers a slight edge over Transformers."

"xLSTM model demonstrated better generalization capabilities to new operators. The results clearly show that for this type of classification, the xLSTM model offers a slight edge over Transformers."

We show how trajectories of spatial dynamical systems can be modeled in latent space by

--> leveraging IDENTIFIERS.

📚Paper: arxiv.org/abs/2502.12128

💻Code: github.com/ml-jku/LaM-S...

📝Blog: ml-jku.github.io/LaM-SLidE/

1/n

We show how trajectories of spatial dynamical systems can be modeled in latent space by

--> leveraging IDENTIFIERS.

📚Paper: arxiv.org/abs/2502.12128

💻Code: github.com/ml-jku/LaM-S...

📝Blog: ml-jku.github.io/LaM-SLidE/

1/n

#CombinatorialOptimization #StatisticalPhysics #DiffusionModels

#CombinatorialOptimization #StatisticalPhysics #DiffusionModels