I've started a company!

Introducing Sophont

We’re building open multimodal foundation models for the future of healthcare. We need a DeepSeek for medical AI, and @sophontai.bsky.social will be that company!

Check out our website & blog post for more info (link below)

I've started a company!

Introducing Sophont

We’re building open multimodal foundation models for the future of healthcare. We need a DeepSeek for medical AI, and @sophontai.bsky.social will be that company!

Check out our website & blog post for more info (link below)

brain-image alignment | brain scaling laws | brain-to-text decoding

Sharing quick takeaways on these papers alongside raw technical notes: paulscotti.substack.com/p/neuroai-im...

brain-image alignment | brain scaling laws | brain-to-text decoding

Sharing quick takeaways on these papers alongside raw technical notes: paulscotti.substack.com/p/neuroai-im...

A personal retrospective on my journey with open science communities and how Stability AI’s role in the “science-in-the-open” movement was such a pivotal phenomenon: paulscotti.com/blog/leaving...

A personal retrospective on my journey with open science communities and how Stability AI’s role in the “science-in-the-open” movement was such a pivotal phenomenon: paulscotti.com/blog/leaving...

but maybe the brain actually does do self-attention + feed-forward operations

astrocytes could enable a biologically plausible solution to transformer mechanisms in the brain

www.pnas.org/doi/pdf/10.1...

but maybe the brain actually does do self-attention + feed-forward operations

astrocytes could enable a biologically plausible solution to transformer mechanisms in the brain

www.pnas.org/doi/pdf/10.1...

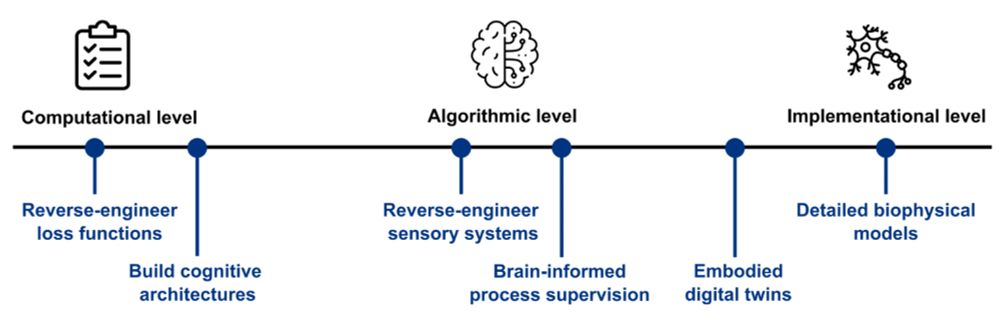

Read more: e11.bio/news/roadmap

Foundation brain models

Sensory and embodied digital twins

Brain data to improve AI models

Neuro-inspired mech. interpretability

Foundation brain models

Sensory and embodied digital twins

Brain data to improve AI models

Neuro-inspired mech. interpretability

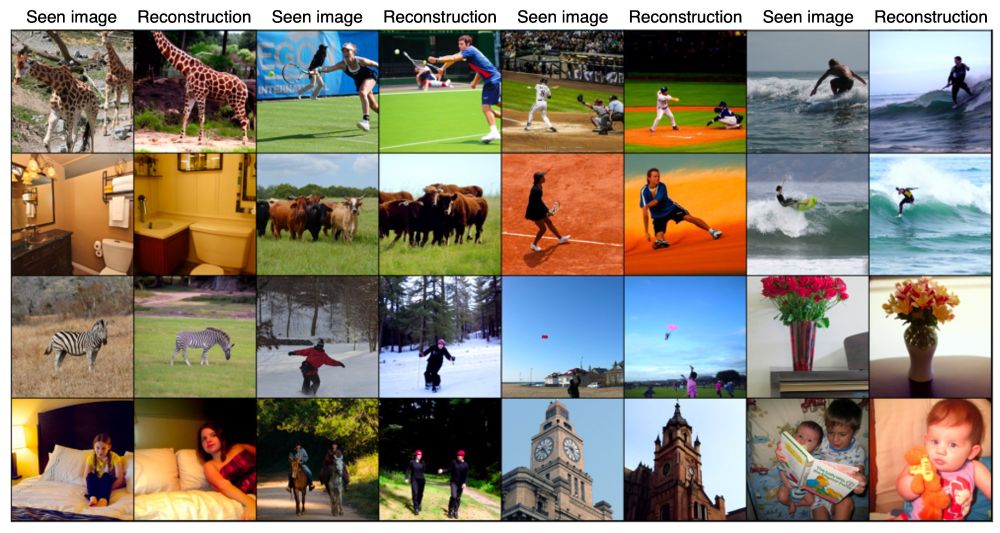

We reconstruct seen images from fMRI activity using only 1 hour of training data.

This is possible by first pretraining a shared-subject model using other people's data, and then fine-tuning on a held-out subject with only 1 hr of data.

arxiv.org/abs/2403.11207

We reconstruct seen images from fMRI activity using only 1 hour of training data.

This is possible by first pretraining a shared-subject model using other people's data, and then fine-tuning on a held-out subject with only 1 hr of data.

arxiv.org/abs/2403.11207

My role is to lead the MedARC Neuroimaging & AI Lab: medarc.ai/fmri

The lab is remote, open-source, and open to the public to join. Tons of potential to rethink what a research lab is and benefit from crowd-sourced intelligence.

My role is to lead the MedARC Neuroimaging & AI Lab: medarc.ai/fmri

The lab is remote, open-source, and open to the public to join. Tons of potential to rethink what a research lab is and benefit from crowd-sourced intelligence.

Updated camera ready paper is also now live on arxiv: arxiv.org/abs/2305.182...

Includes new expts, appendix figures, more references to other work 🧠📈

Updated camera ready paper is also now live on arxiv: arxiv.org/abs/2305.182...

Includes new expts, appendix figures, more references to other work 🧠📈