Intersecting mechanistic interpretability and health AI 😎

We trained and interpreted sparse autoencoders on MAIRA-2, our radiology MLLM. We found a range of human-interpretable radiology reporting concepts, but also many uninterpretable SAE features.

Intersecting mechanistic interpretability and health AI 😎

We trained and interpreted sparse autoencoders on MAIRA-2, our radiology MLLM. We found a range of human-interpretable radiology reporting concepts, but also many uninterpretable SAE features.

Read more in our blog:

blog.neurips.cc/2025/07/16/n...

Read more in our blog:

blog.neurips.cc/2025/07/16/n...

This led us to write a position paper (accepted at #ICML2025) that attempts to identify the problem and to propose a solution.

arxiv.org/abs/2402.02870

👇🧵

This led us to write a position paper (accepted at #ICML2025) that attempts to identify the problem and to propose a solution.

arxiv.org/abs/2402.02870

👇🧵

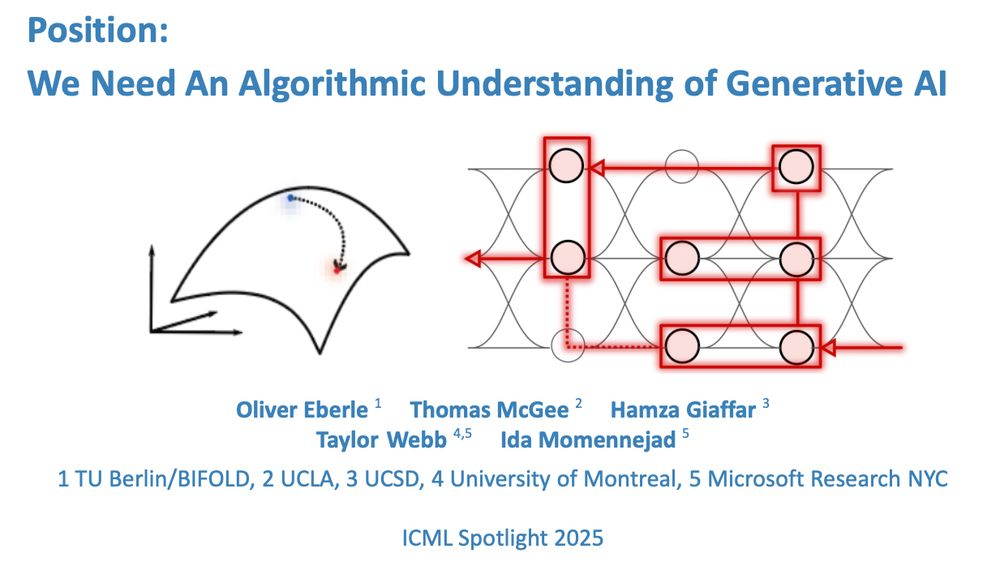

Position: We Need An Algorithmic Understanding of Generative AI

What algorithms do LLMs actually learn and use to solve problems?🧵1/n

openreview.net/forum?id=eax...

Position: We Need An Algorithmic Understanding of Generative AI

What algorithms do LLMs actually learn and use to solve problems?🧵1/n

openreview.net/forum?id=eax...

Linear representation hypothesis discourse needs more differential geometry i m o

Linear representation hypothesis discourse needs more differential geometry i m o

irenechen.net/sail2025/

irenechen.net/sail2025/

🔹Keynote presentations

🔹Panel discussions

🔹Year in Review

🔹Posters sessions

🔹Doctoral Symposium lightning talks

👉 Schedule: chil.ahli.cc/attend/sched...

👉 Register: ahli.cc/chil25-regis...

🔹Keynote presentations

🔹Panel discussions

🔹Year in Review

🔹Posters sessions

🔹Doctoral Symposium lightning talks

👉 Schedule: chil.ahli.cc/attend/sched...

👉 Register: ahli.cc/chil25-regis...