Interested in AI, RecSys, Maths.

Trains and fine-tunes models.

januverma.substack.com

Link: open.substack.com/pub/januverm...

Link: open.substack.com/pub/januverm...

Often, the most significant performance gains come from enriching models with related, contextual info. Models get better by being exposed to auxiliary signals that deepen their understanding of the task.

Often, the most significant performance gains come from enriching models with related, contextual info. Models get better by being exposed to auxiliary signals that deepen their understanding of the task.

open.substack.com/pub/januverm...

open.substack.com/pub/januverm...

tinyurl.com/mrytfn86

#MathSky #WomenInSTEM #HistSci 🧮🧪

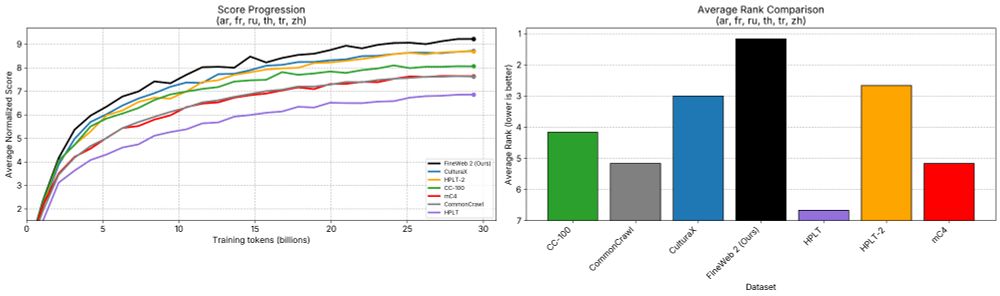

FineWeb 2 extends the data driven approach to pre-training dataset design that was introduced in FineWeb 1 to now covers 1893 languages/scripts

Details: huggingface.co/datasets/Hug...

A detailed open-science tech report is coming soon

FineWeb 2 extends the data driven approach to pre-training dataset design that was introduced in FineWeb 1 to now covers 1893 languages/scripts

Details: huggingface.co/datasets/Hug...

A detailed open-science tech report is coming soon

youtu.be/2AthqCX3h8U

youtu.be/2AthqCX3h8U