jennhu.github.io

Our PhD students also run an application mentoring program for prospective students. Mentoring requests due November 15.

tinyurl.com/2nrn4jf9

Topics of interest include pragmatics, metacognition, reasoning, & interpretability (in humans and AI).

Check out JHU's mentoring program (due 11/15) for help with your SoP 👇

Preprint (pre-TACL version): arxiv.org/abs/2510.16227

10/10

Preprint (pre-TACL version): arxiv.org/abs/2510.16227

10/10

This connects to tensions btwn language generation/identification (e.g., openreview.net/forum?id=FGT...)

9/10

This connects to tensions btwn language generation/identification (e.g., openreview.net/forum?id=FGT...)

9/10

This overlap is to be expected if prob is influenced by factors other than gram. 7/10

This overlap is to be expected if prob is influenced by factors other than gram. 7/10

1. Correlation btwn the prob of string probs within minimal pairs

2. Correlation btwn LMs’ and humans’ deltas within minimal pairs

3. Poor separation btwn prob of unpaired grammatical and ungrammatical strings

6/10

1. Correlation btwn the prob of string probs within minimal pairs

2. Correlation btwn LMs’ and humans’ deltas within minimal pairs

3. Poor separation btwn prob of unpaired grammatical and ungrammatical strings

6/10

This phenomenon has previously been used to argue that probability is a bad tool for measuring grammatical knowledge -- but in fact, it follows directly from our framework! 5/10

This phenomenon has previously been used to argue that probability is a bad tool for measuring grammatical knowledge -- but in fact, it follows directly from our framework! 5/10

Good LMs have low P(g=0), so they prefer the grammatical string in the minimal pair.

But for non-minimal string pairs with different underlying messages, differences in P(m) can overwhelm even good LMs. 4/10

Good LMs have low P(g=0), so they prefer the grammatical string in the minimal pair.

But for non-minimal string pairs with different underlying messages, differences in P(m) can overwhelm even good LMs. 4/10

In our framework, the probability of a string comes from two latent variables: m, the message to be conveyed; and g, whether the message is realized grammatically.

Ungrammatical strings get probability mass when g=0: the message is not realized grammatically. 3/10

In our framework, the probability of a string comes from two latent variables: m, the message to be conveyed; and g, whether the message is realized grammatically.

Ungrammatical strings get probability mass when g=0: the message is not realized grammatically. 3/10

Our framework provides theoretical justification for the widespread practice of using *minimal pairs* to test what grammatical generalizations LMs have acquired. 2/10

Our framework provides theoretical justification for the widespread practice of using *minimal pairs* to test what grammatical generalizations LMs have acquired. 2/10

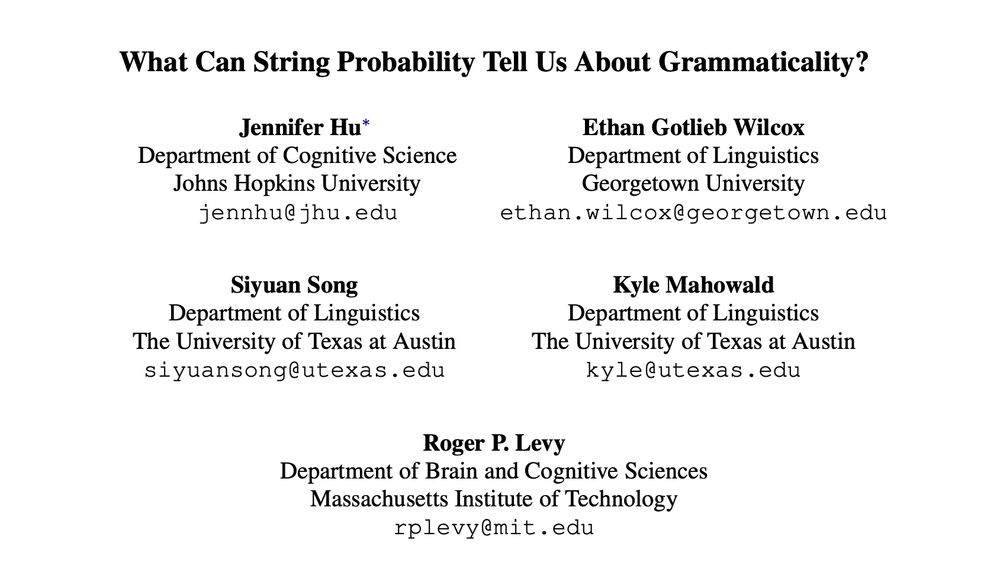

Language models (LMs) are remarkably good at generating novel well-formed sentences, leading to claims that they have mastered grammar.

Yet they often assign higher probability to ungrammatical strings than to grammatical strings.

How can both things be true? 🧵👇

Language models (LMs) are remarkably good at generating novel well-formed sentences, leading to claims that they have mastered grammar.

Yet they often assign higher probability to ungrammatical strings than to grammatical strings.

How can both things be true? 🧵👇

Caveats:

-*-*-*-*

> These are my opinions, based on my experiences, they are not secret tricks or guarantees

> They are general guidelines, not meant to cover a host of idiosyncrasies and special cases

Caveats:

-*-*-*-*

> These are my opinions, based on my experiences, they are not secret tricks or guarantees

> They are general guidelines, not meant to cover a host of idiosyncrasies and special cases

Topics of interest include pragmatics, metacognition, reasoning, & interpretability (in humans and AI).

Check out JHU's mentoring program (due 11/15) for help with your SoP 👇

Our PhD students also run an application mentoring program for prospective students. Mentoring requests due November 15.

tinyurl.com/2nrn4jf9

Topics of interest include pragmatics, metacognition, reasoning, & interpretability (in humans and AI).

Check out JHU's mentoring program (due 11/15) for help with your SoP 👇

"Non-commitment in mental imagery is distinct from perceptual inattention, and supports hierarchical scene construction"

(by Li, Hammond, & me)

link: doi.org/10.31234/osf...

-- the title's a bit of a mouthful, but the nice thing is that it's a pretty decent summary

"Non-commitment in mental imagery is distinct from perceptual inattention, and supports hierarchical scene construction"

(by Li, Hammond, & me)

link: doi.org/10.31234/osf...

-- the title's a bit of a mouthful, but the nice thing is that it's a pretty decent summary

👉 I'm presenting at two workshops (PragLM, Visions) on Fri

👉 Also check out "Language Models Fail to Introspect About Their Knowledge of Language" (presented by @siyuansong.bsky.social Tue 11-1)

👉 I'm presenting at two workshops (PragLM, Visions) on Fri

👉 Also check out "Language Models Fail to Introspect About Their Knowledge of Language" (presented by @siyuansong.bsky.social Tue 11-1)

We revisit a recent proposal by Comșa & Shanahan, and provide new experiments + an alternate definition of introspection.

Check out this new work w/ @siyuansong.bsky.social, @harveylederman.bsky.social, & @kmahowald.bsky.social 👇

We revisit a recent proposal by Comșa & Shanahan, and provide new experiments + an alternate definition of introspection.

Check out this new work w/ @siyuansong.bsky.social, @harveylederman.bsky.social, & @kmahowald.bsky.social 👇

Submit by *8/27* (midnight AoE)

How can we interpret the algorithms and representations underlying complex behavior in deep learning models?

🌐 coginterp.github.io/neurips2025/

1/4

Submit by *8/27* (midnight AoE)

Looking forward to seeing your submissions!

How can we interpret the algorithms and representations underlying complex behavior in deep learning models?

🌐 coginterp.github.io/neurips2025/

1/4

Looking forward to seeing your submissions!

Find me giving talks on:

💬 Prod-comp asymmetry in children and LMs (Thu 7/31)

💬 How people make sense of nonsense (Sat 8/2)

📣 Also, I’m recruiting grad students + postdocs for my new lab at Hopkins! 📣

If you’re interested in language / cognition / AI, let’s chat! 😄

Find me giving talks on:

💬 Prod-comp asymmetry in children and LMs (Thu 7/31)

💬 How people make sense of nonsense (Sat 8/2)

📣 Also, I’m recruiting grad students + postdocs for my new lab at Hopkins! 📣

If you’re interested in language / cognition / AI, let’s chat! 😄

🗓️ Submit your 4-page paper (non-archival) by August 15!

4/4

🗓️ Submit your 4-page paper (non-archival) by August 15!

4/4

3/4

3/4

Cognitive science offers a rich body of theories and frameworks which can help answer these questions.

2/4

Cognitive science offers a rich body of theories and frameworks which can help answer these questions.

2/4

How can we interpret the algorithms and representations underlying complex behavior in deep learning models?

🌐 coginterp.github.io/neurips2025/

1/4

How can we interpret the algorithms and representations underlying complex behavior in deep learning models?

🌐 coginterp.github.io/neurips2025/

1/4

How do LLMs engage in pragmatic reasoning, and what core pragmatic capacities remain beyond their reach?

🌐 sites.google.com/berkeley.edu/praglm/

📅 Submit by June 23rd

How do LLMs engage in pragmatic reasoning, and what core pragmatic capacities remain beyond their reach?

🌐 sites.google.com/berkeley.edu/praglm/

📅 Submit by June 23rd

A huge thank you to my amazing collaborators Michael Lepori (@michael-lepori.bsky.social) & Michael Franke (@meanwhileina.bsky.social)!

(12/12)

A huge thank you to my amazing collaborators Michael Lepori (@michael-lepori.bsky.social) & Michael Franke (@meanwhileina.bsky.social)!

(12/12)