jonhue.github.io

Today, we introduce a simple algorithm that enables the model to learn from any rich feedback!

And then turns it into dense supervision.

(1/n)

Today, we introduce a simple algorithm that enables the model to learn from any rich feedback!

And then turns it into dense supervision.

(1/n)

Today, we introduce a simple algorithm that enables the model to learn from any rich feedback!

And then turns it into dense supervision.

(1/n)

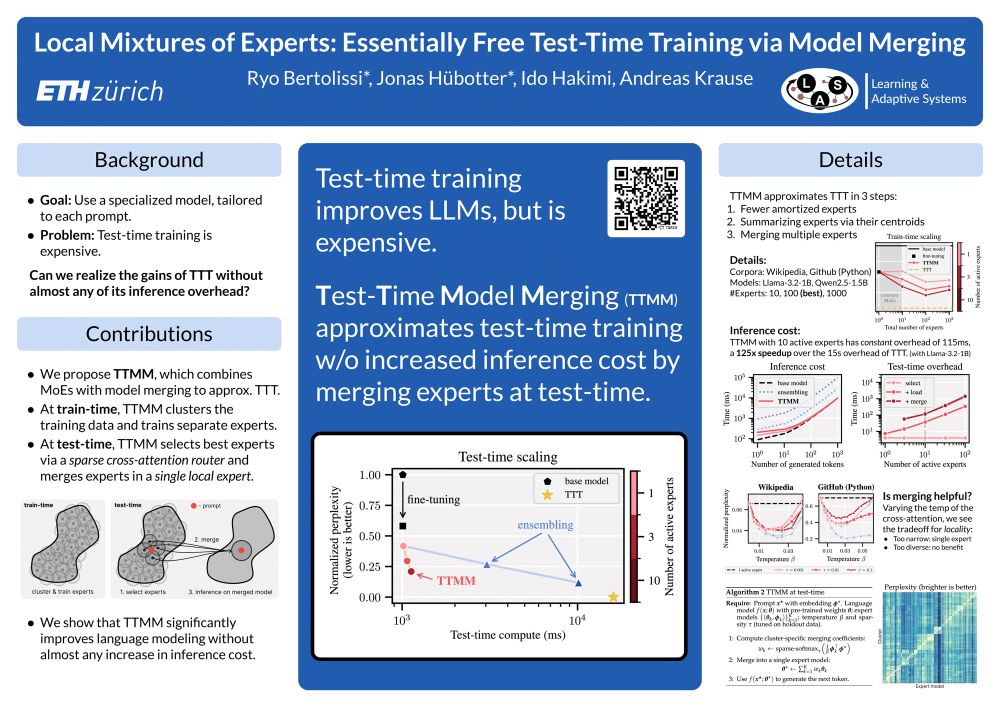

We present our poster at #1013 in the Wednesday morning session.

Joint work with the amazing Ryo Bertolissi, @idoh.bsky.social, @arkrause.bsky.social.

We present our poster at #1013 in the Wednesday morning session.

Joint work with the amazing Ryo Bertolissi, @idoh.bsky.social, @arkrause.bsky.social.

📌 We are presenting a possible solution on Wed, 11am to 1.30pm at B2-B3 W-609!

📌 We are presenting a possible solution on Wed, 11am to 1.30pm at B2-B3 W-609!

🗓️ Wednesday, April 23rd, 7:00–9:30 p.m. PDT

📍 Hall 3 + Hall 2B #257

Joint work with my fantastic collaborators Sascha Bongni,

@idoh.bsky.social, @arkrause.bsky.social

🗓️ Wednesday, April 23rd, 7:00–9:30 p.m. PDT

📍 Hall 3 + Hall 2B #257

Joint work with my fantastic collaborators Sascha Bongni,

@idoh.bsky.social, @arkrause.bsky.social

arxiv.org/pdf/2502.05244

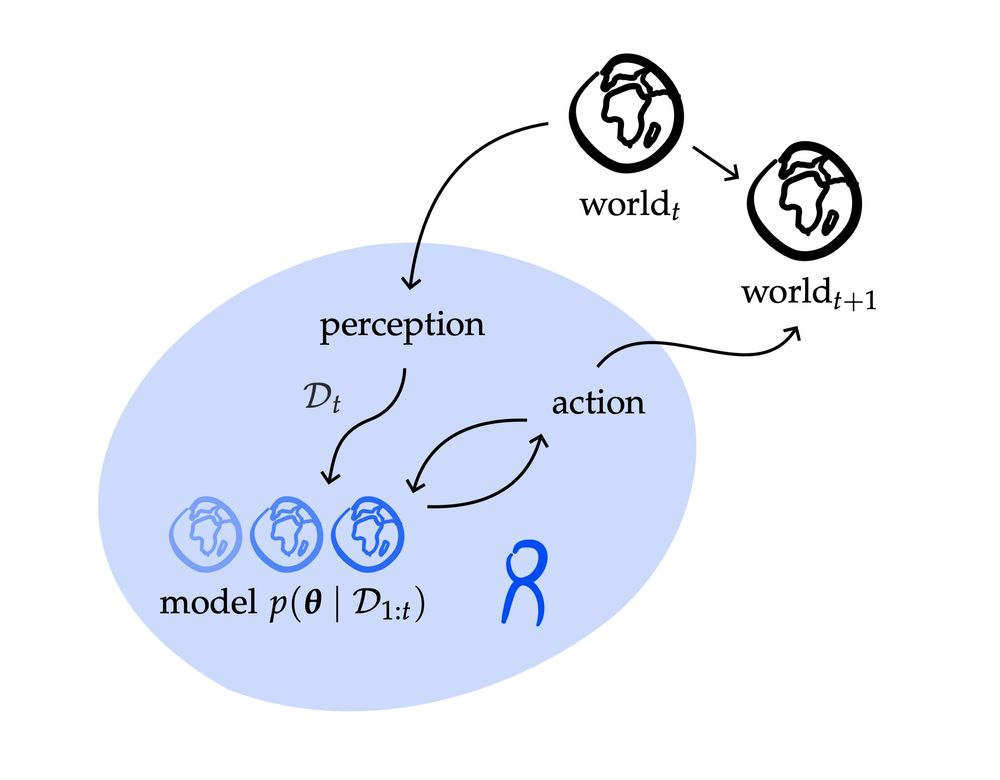

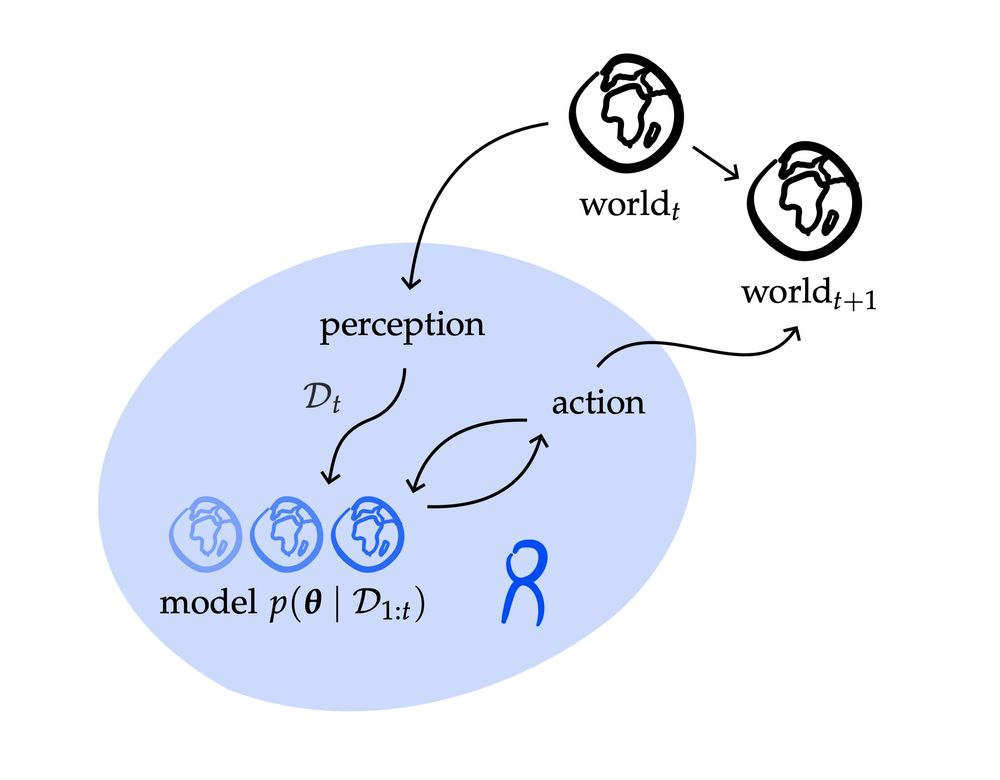

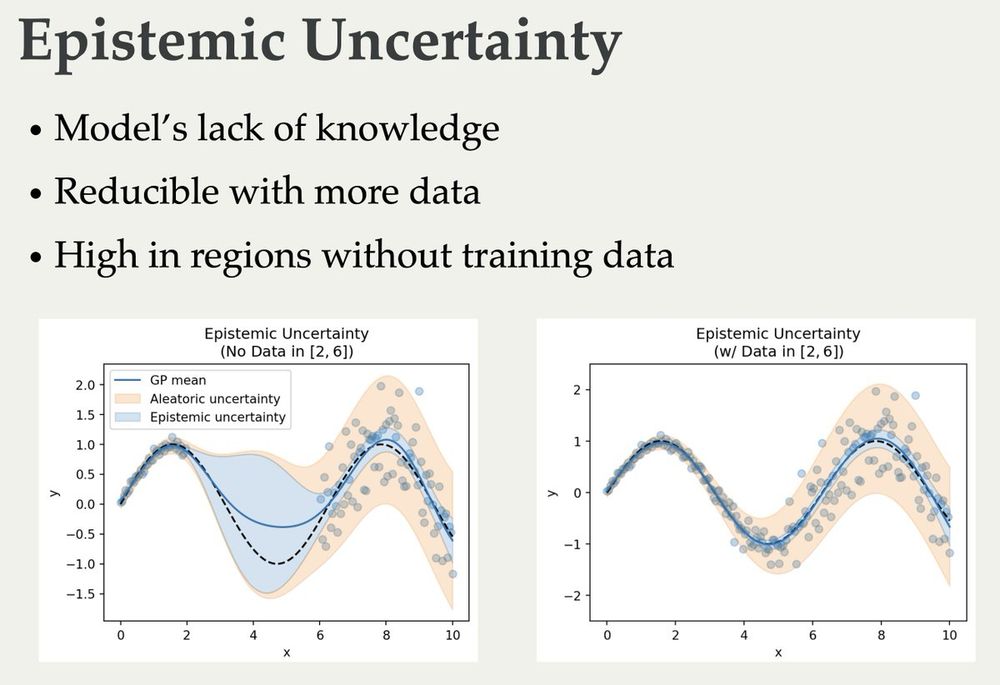

These notes aim to give a graduate-level introduction to probabilistic ML + sequential decision-making.

I'm super glad to be able to share them with all of you now!

arxiv.org/pdf/2502.05244

These notes aim to give a graduate-level introduction to probabilistic ML + sequential decision-making.

I'm super glad to be able to share them with all of you now!

arxiv.org/pdf/2502.05244

These notes aim to give a graduate-level introduction to probabilistic ML + sequential decision-making.

I'm super glad to be able to share them with all of you now!

blackhc.github.io/balitu/

and I'll try to add proper course notes over time 🤗

blackhc.github.io/balitu/

and I'll try to add proper course notes over time 🤗

Join us for our ✨oral✨ at 10:30am in east exhibition hall A.

Joint work with my fantastic collaborators Sascha Bongni, @idoh.bsky.social, @arkrause.bsky.social

Join us for our ✨oral✨ at 10:30am in east exhibition hall A.

Joint work with my fantastic collaborators Sascha Bongni, @idoh.bsky.social, @arkrause.bsky.social

Joint work with my fantastic collaborators Bhavya Sukhija, Lenart Treven, Yarden As, @arkrause.bsky.social

Joint work with my fantastic collaborators Bhavya Sukhija, Lenart Treven, Yarden As, @arkrause.bsky.social

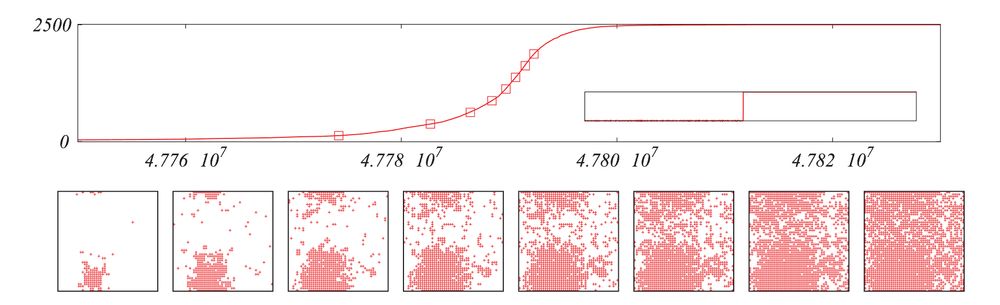

A node using B receives a benefit with respect to X, but there is a benefit to using the same tech as the majority of your neighbors.

Assume everyone uses X at time t=0. Will they switch to B?

A node using B receives a benefit with respect to X, but there is a benefit to using the same tech as the majority of your neighbors.

Assume everyone uses X at time t=0. Will they switch to B?