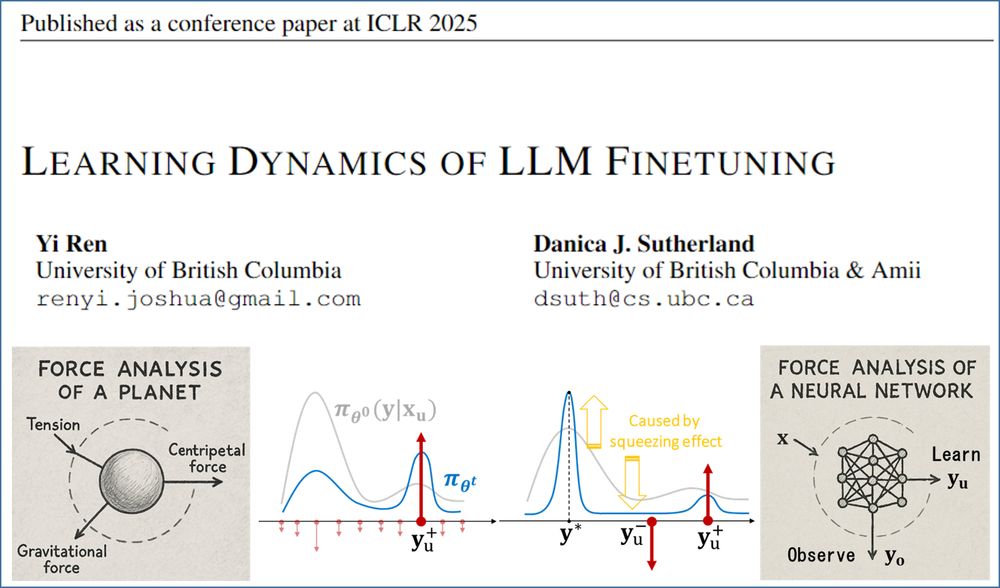

We offer a fresh perspective—consider doing a "force analysis" on your model’s behavior.

Check out our #ICLR2025 Oral paper:

Learning Dynamics of LLM Finetuning!

(0/12)

We offer a fresh perspective—consider doing a "force analysis" on your model’s behavior.

Check out our #ICLR2025 Oral paper:

Learning Dynamics of LLM Finetuning!

(0/12)