Preprint: arxiv.org/abs/2410.00752

Leaderboard: testgeneval.github.io/leaderboard....

Preprint: arxiv.org/abs/2410.00752

Leaderboard: testgeneval.github.io/leaderboard....

I’m Catarina, a dual PhD student in 🖥️ Software Engineering with the CMU Portugal program ( @carnegiemellon.bsky.social and U. Lisbon).

Imagine a world with reliable software and user-friendly verification tools. Let’s build it together! 🚀

#PhDlife #SE #PL #HCI #CMU-Portugal

I’m Catarina, a dual PhD student in 🖥️ Software Engineering with the CMU Portugal program ( @carnegiemellon.bsky.social and U. Lisbon).

Imagine a world with reliable software and user-friendly verification tools. Let’s build it together! 🚀

#PhDlife #SE #PL #HCI #CMU-Portugal

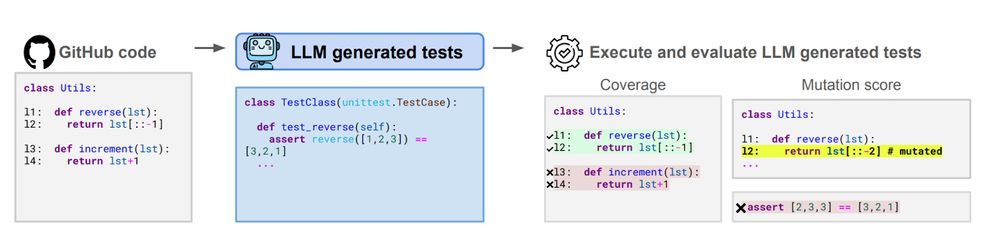

There’s growing concern that LLMs for SE are prone to data leakage, but no one has quantified it... until now. 🕵️♂️ 1/

There’s growing concern that LLMs for SE are prone to data leakage, but no one has quantified it... until now. 🕵️♂️ 1/