Initial seed noise matters. And you can optimize it **without** any backprop through your denoiser via good-ol linearization. Importantly, you need to do this in the Fourier space.

Initial seed noise matters. And you can optimize it **without** any backprop through your denoiser via good-ol linearization. Importantly, you need to do this in the Fourier space.

Essentially learning create an upsample kernel via looking at encoded images. Generalizes well to various foundational models. + it beats bicubic upsampling.

Essentially learning create an upsample kernel via looking at encoded images. Generalizes well to various foundational models. + it beats bicubic upsampling.

Fine-tune a depth estimator to SVG layers for the ordinal depth estimator. Very cute outcomes with highly useful results.

Fine-tune a depth estimator to SVG layers for the ordinal depth estimator. Very cute outcomes with highly useful results.

image (point map) + mask -> Transformer -> pose / voxel -> transformer (image/mask/voxel) -> mesh / splat. Staged training with synthetic and real data & RL. I wish I could see more failure examples to know the limits.

image (point map) + mask -> Transformer -> pose / voxel -> transformer (image/mask/voxel) -> mesh / splat. Staged training with synthetic and real data & RL. I wish I could see more failure examples to know the limits.

V2 of RoMA is out :) 1.7x faster with improved performance! Dense feature matching, still outperforms feed-forward 3D methods by a far in outdoor scenarios.

V2 of RoMA is out :) 1.7x faster with improved performance! Dense feature matching, still outperforms feed-forward 3D methods by a far in outdoor scenarios.

Train a confidence predictor for tokens and merge low-confidence ones for acceleration -> faster reconstruction with VGGT/MapAnything.

Train a confidence predictor for tokens and merge low-confidence ones for acceleration -> faster reconstruction with VGGT/MapAnything.

Foundational backbone, finetuned DINOv3, trained with synthetic renders of materials, EMA student-teacher training with multiple losses.

Foundational backbone, finetuned DINOv3, trained with synthetic renders of materials, EMA student-teacher training with multiple losses.

Very simple idea -- for inverse problems, you can introduce equivariant augmentations which do not change the solution, and find a solution that matches everything. Augmentation in denoising.

Very simple idea -- for inverse problems, you can introduce equivariant augmentations which do not change the solution, and find a solution that matches everything. Augmentation in denoising.

VGGT-like architecture, but simplified to estimate depth and ray maps (not point maps). Uses teacher-student training of Depth Anything v2.

VGGT-like architecture, but simplified to estimate depth and ray maps (not point maps). Uses teacher-student training of Depth Anything v2.

Make a rough warp, push it through Image-to-Video model with denoise together up until a timestep, then let it finish the rest without interference.

Make a rough warp, push it through Image-to-Video model with denoise together up until a timestep, then let it finish the rest without interference.

3D Gaussian Splatting for Ultrasounds. Tailored parameterization and Field-of-view adaptation for Ultrasound use cases.

3D Gaussian Splatting for Ultrasounds. Tailored parameterization and Field-of-view adaptation for Ultrasound use cases.

Is all you need just very good captioning? Makes a lot of sense given that this makes your problem closer to a 1-to-1 mapping instead of many-to-1.

Is all you need just very good captioning? Makes a lot of sense given that this makes your problem closer to a 1-to-1 mapping instead of many-to-1.

The existence of single (few) step denoisers, and many recent works hinted at this, but another one. You can decode the image structure from the initial noise fairly easily.

The existence of single (few) step denoisers, and many recent works hinted at this, but another one. You can decode the image structure from the initial noise fairly easily.

I like simple ideas -- this one says you should consider multiple views when you prune/clone, which allows fewer Gaussians to be used for training.

I like simple ideas -- this one says you should consider multiple views when you prune/clone, which allows fewer Gaussians to be used for training.

Gaussian Splatting + Image-based rendering (ie, copy things over directly from nearby views). When your Gaussians cannot describe highlights, let your nearby images guide you.

Gaussian Splatting + Image-based rendering (ie, copy things over directly from nearby views). When your Gaussians cannot describe highlights, let your nearby images guide you.

Extract Dynamic 3D Gaussians for an object -> Vision Language Models to extract physics parameters -> model force field (wind). Leads to some fun.

Extract Dynamic 3D Gaussians for an object -> Vision Language Models to extract physics parameters -> model force field (wind). Leads to some fun.

VGGT extended to dynamic scenes with a dynamic mask predictor.

VGGT extended to dynamic scenes with a dynamic mask predictor.

Apparently no. This indeed matches the empirical behavior of these models that I experienced. These models are approximate, but then what actually is their mathematical property?

Apparently no. This indeed matches the empirical behavior of these models that I experienced. These models are approximate, but then what actually is their mathematical property?

Tracking, waaaaaay back in the days, used to be solved using sampling methods. They are now back. Also reminds me of my first major conference work, where I looked into how much impact the initial target point has.

Tracking, waaaaaay back in the days, used to be solved using sampling methods. They are now back. Also reminds me of my first major conference work, where I looked into how much impact the initial target point has.

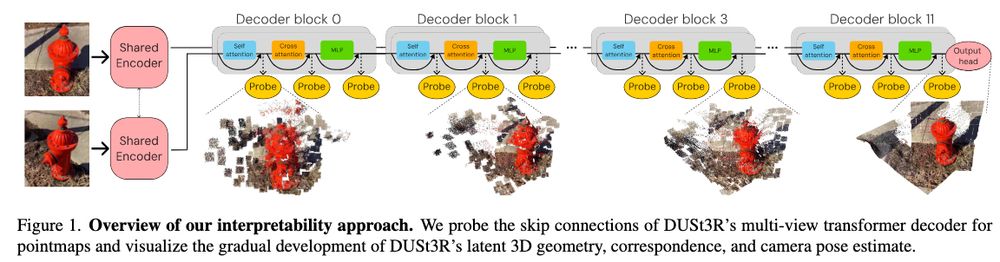

We use Dust3r as a black box. This work looks under the hood at what is going on. The internal representations seem to "iteratively" refine towards the final answer. Quite similar to what goes on in point cloud net

We use Dust3r as a black box. This work looks under the hood at what is going on. The internal representations seem to "iteratively" refine towards the final answer. Quite similar to what goes on in point cloud net

Note: this paper does not claim that diffusion models are better; in fact, specialized models are. Just shows the potential that you can use diffusion models to solve geometric problems.

Note: this paper does not claim that diffusion models are better; in fact, specialized models are. Just shows the potential that you can use diffusion models to solve geometric problems.

Pretty much a deep image prior for denoising models. Without ANY data, with a single image, you can train a denoiser via diffusion training, and it just magically learns to solve inverse problems.

Pretty much a deep image prior for denoising models. Without ANY data, with a single image, you can train a denoiser via diffusion training, and it just magically learns to solve inverse problems.

Make your RoPE encoding 3D by including a z axis, then manipulate your image by simply manipulating your positional encoding in 3D --> novel view synthesis. Neat idea.

Make your RoPE encoding 3D by including a z axis, then manipulate your image by simply manipulating your positional encoding in 3D --> novel view synthesis. Neat idea.

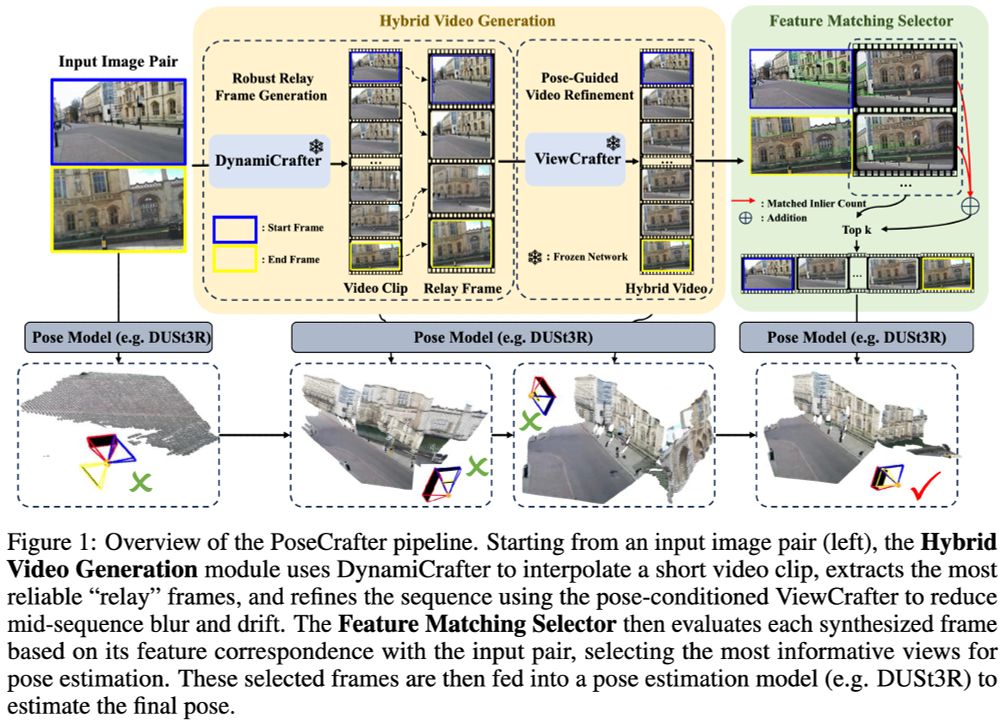

Hybrid Video Synthesis"

While not perfect, video models do an okay job of creating novel views. Use them to "bridge" between extreme views for pose estimation.

Hybrid Video Synthesis"

While not perfect, video models do an okay job of creating novel views. Use them to "bridge" between extreme views for pose estimation.

Transformer patches don't need to be of uniform size -- choose sizes based on entropy --> faster training/inference. Are scale-spaces gonna make a comeback?

Transformer patches don't need to be of uniform size -- choose sizes based on entropy --> faster training/inference. Are scale-spaces gonna make a comeback?