Prev: @CornellORIE @MSFTResearch, @IBMResearch, @uoftmie 🌈

When: Tuesday, Jan 20, 2026, 8:30am–12:30pm SGT

Where: Garnet 216 (Singapore EXPO)

(Connor’s intro slides are shown here.)

CC @aaai.org

When: Tuesday, Jan 20, 2026, 8:30am–12:30pm SGT

Where: Garnet 216 (Singapore EXPO)

(Connor’s intro slides are shown here.)

CC @aaai.org

I am on the job market this year - check out my website (conlaw.github.io) for more details on what I've been up to.

I am on the job market this year - check out my website (conlaw.github.io) for more details on what I've been up to.

doi.org/10.1007/978-...

doi.org/10.1007/978-...

DiffCoALG bridges the gap between classic algorithms & differentiable learning.

Think: LLM reasoning, routing, SAT, MIP — neurally optimized.

📌 Submit by Aug 22! 🤖🧠

🔗 sites.google.com/view/diffcoa...

#NeurIPS2025

DiffCoALG bridges the gap between classic algorithms & differentiable learning.

Think: LLM reasoning, routing, SAT, MIP — neurally optimized.

📌 Submit by Aug 22! 🤖🧠

🔗 sites.google.com/view/diffcoa...

#NeurIPS2025

Come chat with Haotian at poster W-515 to learn about our work on automatic equivalence checking for optimization models!

Come chat with Haotian at poster W-515 to learn about our work on automatic equivalence checking for optimization models!

🕐 Wed 16 Jul 4:30 p.m. PDT — 7 p.m. PDT

📍East Exhibition Hall A-B #E-1104

🔗 arxiv.org/abs/2502.16380

🕐 Wed 16 Jul 4:30 p.m. PDT — 7 p.m. PDT

📍East Exhibition Hall A-B #E-1104

🔗 arxiv.org/abs/2502.16380

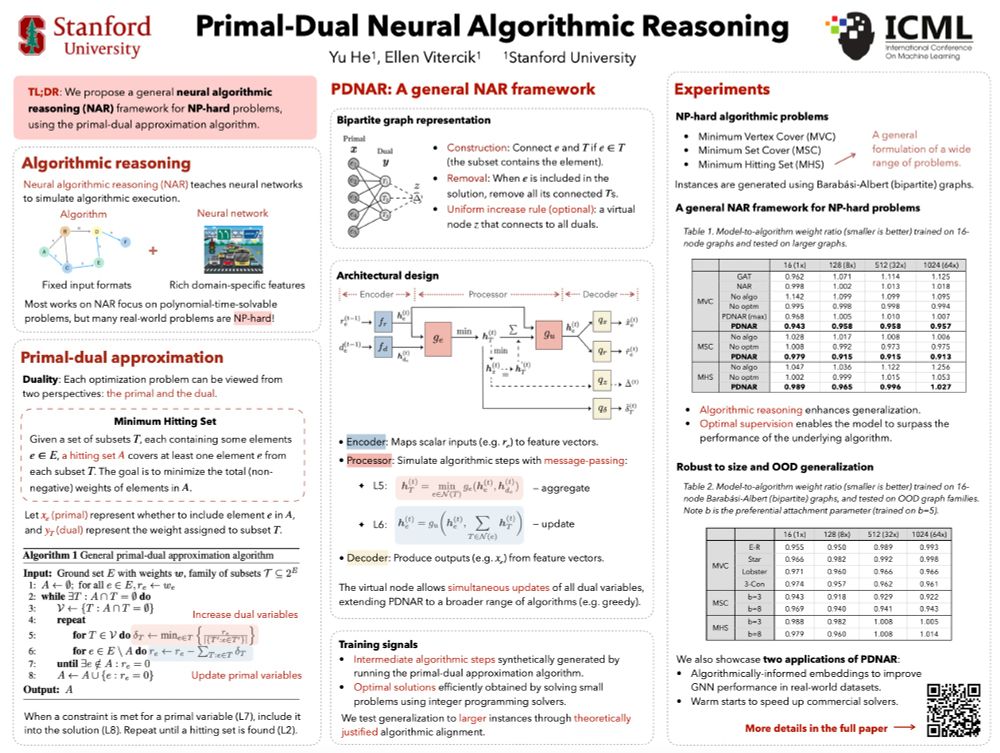

We bring Neural Algorithmic Reasoning (NAR) to the NP-hard frontier 💥

🗓 Poster session: Tuesday 11:00–13:30

📍 East Exhibition Hall A-B, # E-3003

🔗 openreview.net/pdf?id=iBpkz...

🧵

We bring Neural Algorithmic Reasoning (NAR) to the NP-hard frontier 💥

🗓 Poster session: Tuesday 11:00–13:30

📍 East Exhibition Hall A-B, # E-3003

🔗 openreview.net/pdf?id=iBpkz...

🧵

"I Want it That Way": Enabling Interactive Decision Support via Large Language Models and Constraint Programming

🔗: arxiv.org/abs/2312.06908

"I Want it That Way": Enabling Interactive Decision Support via Large Language Models and Constraint Programming

🔗: arxiv.org/abs/2312.06908

LLMs for Cold-Start Cutting Plane Separator Configuration

🔗: arxiv.org/abs/2412.12038

LLMs for Cold-Start Cutting Plane Separator Configuration

🔗: arxiv.org/abs/2412.12038

Possibly --- even a step _forward_?

/s

Possibly --- even a step _forward_?

/s

jobs.uiowa.edu/postdoc/view...

jobs.uiowa.edu/postdoc/view...