Come and explore how representational similarities behave across datasets :)

📅 Thu Jul 17, 11 AM-1:30 PM PDT

📍 East Exhibition Hall A-B #E-2510

Huge thanks to @lorenzlinhardt.bsky.social, Marco Morik, Jonas Dippel, Simon Kornblith, and @lukasmut.bsky.social!

Come and explore how representational similarities behave across datasets :)

📅 Thu Jul 17, 11 AM-1:30 PM PDT

📍 East Exhibition Hall A-B #E-2510

Huge thanks to @lorenzlinhardt.bsky.social, Marco Morik, Jonas Dippel, Simon Kornblith, and @lukasmut.bsky.social!

Our #icml2025 paper analyses how vision model similarities generalize across datasets, the factors that influence them, and their link to downstream task behavior. 🧵1/7

Our #icml2025 paper analyses how vision model similarities generalize across datasets, the factors that influence them, and their link to downstream task behavior. 🧵1/7

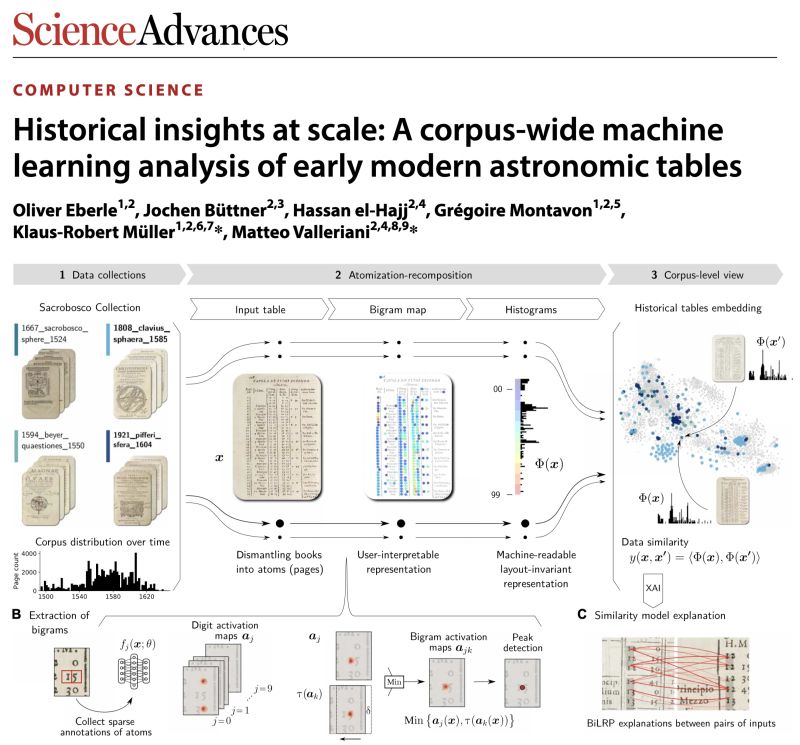

www.science.org/doi/10.1126/...

@mpiwg.bsky.social @tuberlin.bsky.social @bifold.berlin @science.org

www.science.org/doi/10.1126/...

@mpiwg.bsky.social @tuberlin.bsky.social @bifold.berlin @science.org

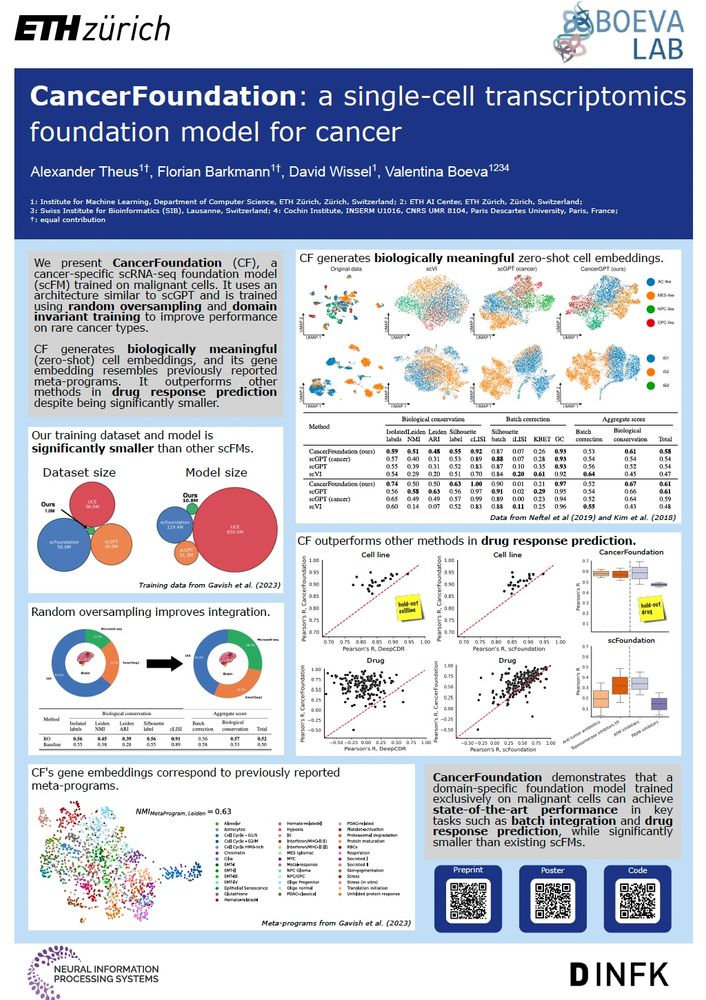

Preprint: biorxiv.org/content/10.1...

Preprint: biorxiv.org/content/10.1...

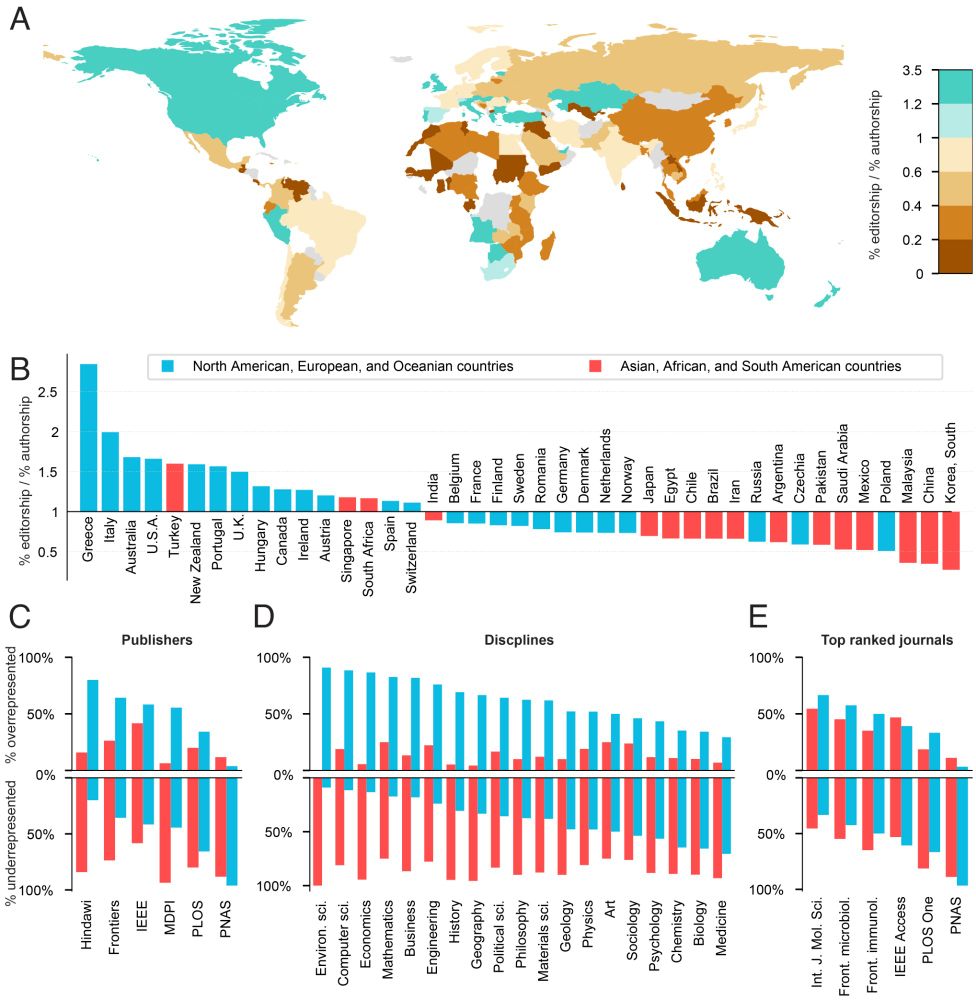

www.pnas.org/doi/abs/10.1...

www.pnas.org/doi/abs/10.1...