Website: https://ml.informatik.uni-freiburg.de/profile/purucker/

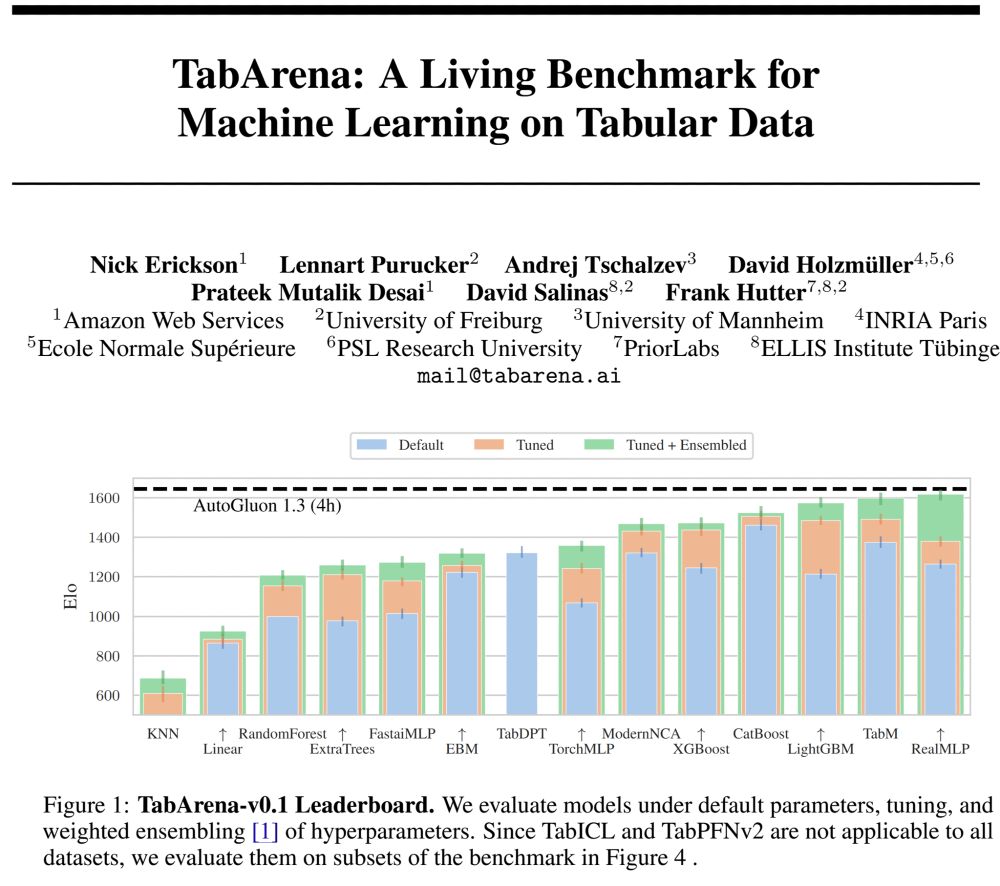

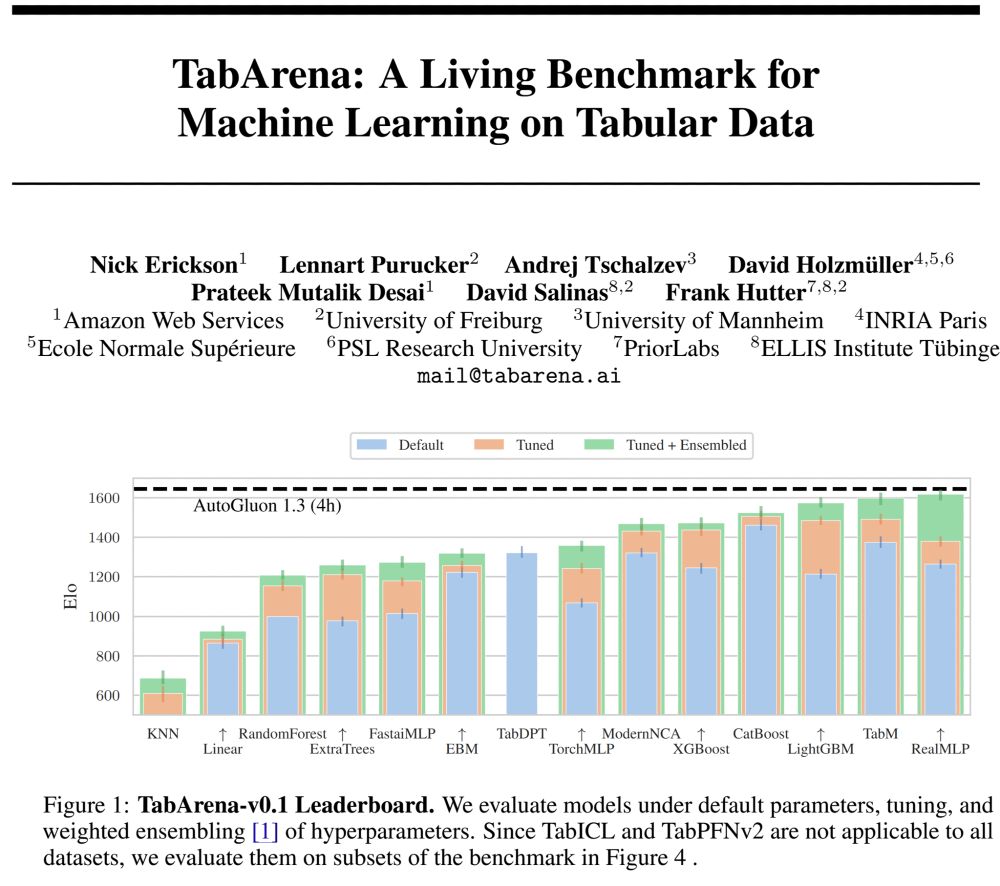

📊 an online leaderboard (submit!)

📑 carefully curated datasets

📈 strong tree-based, deep learning, and foundation models

🧵

CfP: sites.google.com/view/eurips2... (papers due 20 Oct)

Join us @euripsconf.bsky.social to discuss neural tabular models and systems for predictive ML, tabular reasoning and retrieval, table synthesis and more ✨

CfP: sites.google.com/view/eurips2... (papers due 20 Oct)

Join us @euripsconf.bsky.social to discuss neural tabular models and systems for predictive ML, tabular reasoning and retrieval, table synthesis and more ✨

📊 an online leaderboard (submit!)

📑 carefully curated datasets

📈 strong tree-based, deep learning, and foundation models

🧵

📊 an online leaderboard (submit!)

📑 carefully curated datasets

📈 strong tree-based, deep learning, and foundation models

🧵

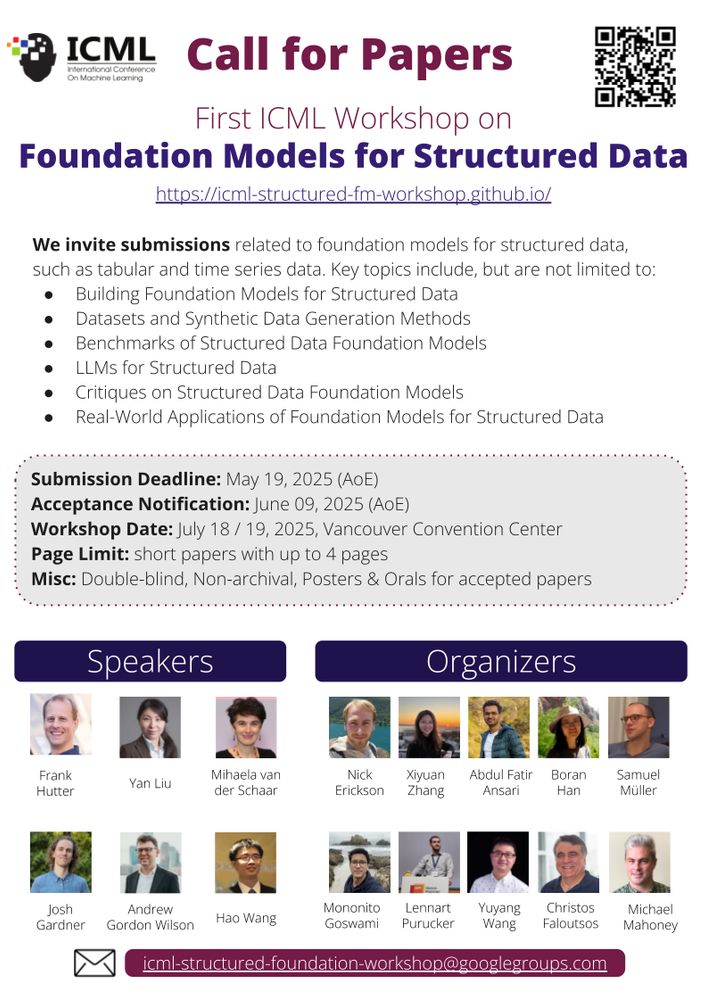

Call for Papers: icml-structured-fm-workshop.github.io

Call for Papers: icml-structured-fm-workshop.github.io

This is excellent news for (small) tabular ML! Checkout our Nature article (nature.com/articles/s41...) and code (github.com/PriorLabs/Ta...)

This is excellent news for (small) tabular ML! Checkout our Nature article (nature.com/articles/s41...) and code (github.com/PriorLabs/Ta...)

🤖 AutoML (e.g., AutoGluon)

📊 Data Science (e.g., LLMs for Feature Engineering)

🏛️ Foundation Models (e.g., TabPFN)

Looking forward to insightful discussions—feel free to reach out!

🤖 AutoML (e.g., AutoGluon)

📊 Data Science (e.g., LLMs for Feature Engineering)

🏛️ Foundation Models (e.g., TabPFN)

Looking forward to insightful discussions—feel free to reach out!

1. 70% win-rate vs version 1.1.

2. TabPFNMix foundation model, parallel fit

3. AutoGluon-Assistant: Zero-code ML with LLMs.

Also, very cool improvements for TimeSeries related to Chronos!

#AutoML #TabularData

1. 70% win-rate vs version 1.1.

2. TabPFNMix foundation model, parallel fit

3. AutoGluon-Assistant: Zero-code ML with LLMs.

Also, very cool improvements for TimeSeries related to Chronos!

#AutoML #TabularData

-->happy to add AutoML folks and friends, just reply/PN to suggest yourself or others

go.bsky.app/5PnMDUK

-->happy to add AutoML folks and friends, just reply/PN to suggest yourself or others

go.bsky.app/5PnMDUK