I teach C++ & computer graphics and make videogames

Working on a medieval village building game: https://youtube.com/playlist?list=PLSGI94QoFYJwGaieAkqw5_qfoupdppxHN&cbrd=1

Check out my cozy road building traffic sim: https://t.ly/FfOwR

#indiedev #gamedev #indiegames #devlog

www.youtube.com/watch?v=fymx...

(this is from here: agraphicsguynotes.com/posts/understanding_the_math_behind_restir_gi)

(this is from here: agraphicsguynotes.com/posts/understanding_the_math_behind_restir_gi)

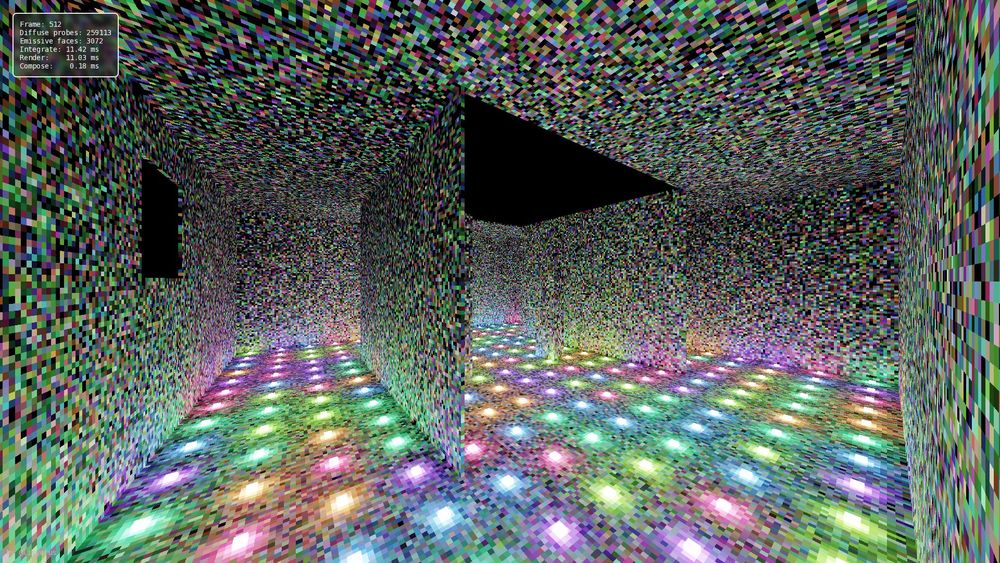

v i b e s

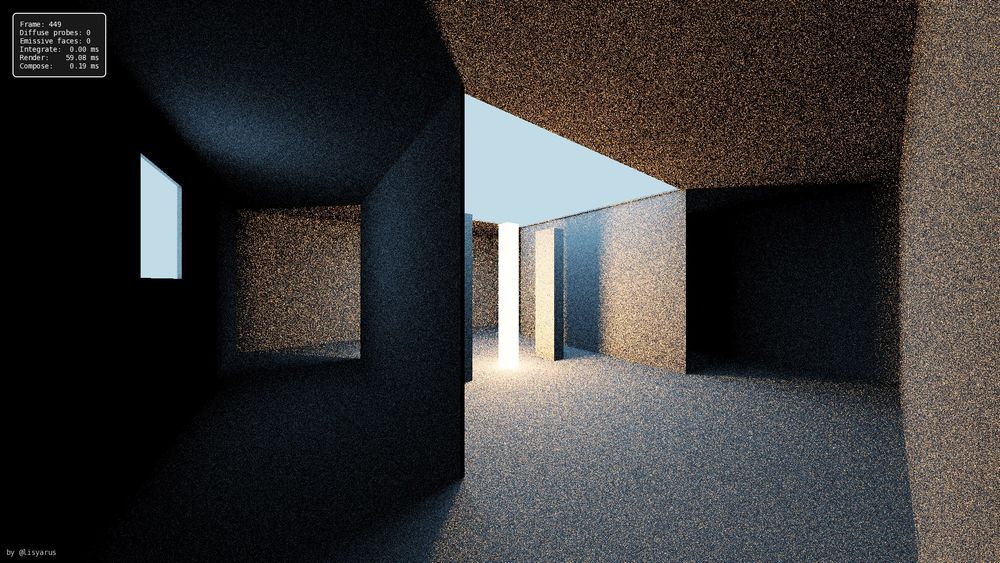

v i b e s