Thanks for putting it on!

www.youtube.com/watch?v=XWZg...

Very grateful for all our donors. Your support enables everything we do. Also grateful for the awesome gang at MIRI who worked their asses off this year. You guys crushed it!

Thanks everyone 🙏 Happy New Year 🎉

4 hours left to go, and by golly it looks like we’ve got a real shot at securing all the matching.

Thanks everyone! Happy New Year 🎉

This is real counterfactual matching: whatever doesn’t get matched by the end of Dec 31, we don’t get. 🧵

Very grateful for all our donors. Your support enables everything we do. Also grateful for the awesome gang at MIRI who worked their asses off this year. You guys crushed it!

Thanks everyone 🙏 Happy New Year 🎉

4 hours left to go, and by golly it looks like we’ve got a real shot at securing all the matching.

Thanks everyone! Happy New Year 🎉

This is real counterfactual matching: whatever doesn’t get matched by the end of Dec 31, we don’t get. 🧵

4 hours left to go, and by golly it looks like we’ve got a real shot at securing all the matching.

Thanks everyone! Happy New Year 🎉

Thanks to all those who stepped up in the last couple of days to close the gap by ~$500k. ❤️

This is real counterfactual matching: whatever doesn’t get matched by the end of Dec 31, we don’t get. 🧵

Thanks to all those who stepped up in the last couple of days to close the gap by ~$500k. ❤️

~$700k in matching funds remaining.

This is real counterfactual matching: whatever doesn’t get matched by the end of Dec 31, we don’t get. 🧵

~$700k in matching funds remaining.

This is real counterfactual matching: whatever doesn’t get matched by the end of Dec 31, we don’t get. 🧵

This is real counterfactual matching: whatever doesn’t get matched by the end of Dec 31, we don’t get. 🧵

newyorker.com/best-books-2...

newyorker.com/best-books-2...

His latest video, an hour+ long interview with Nate Soares about “If Anyone Builds It, Everyone Dies,” is a banger. My new favorite!

www.youtube.com/watch?v=5CKu...

His latest video, an hour+ long interview with Nate Soares about “If Anyone Builds It, Everyone Dies,” is a banger. My new favorite!

www.youtube.com/watch?v=5CKu...

🗓️ Tuesday Oct 28 @ 7:30pm at Manny’s in SF.

Get your tickets:

🗓️ Tuesday Oct 28 @ 7:30pm at Manny’s in SF.

Get your tickets:

I'll be there, if you're in town come say hi!

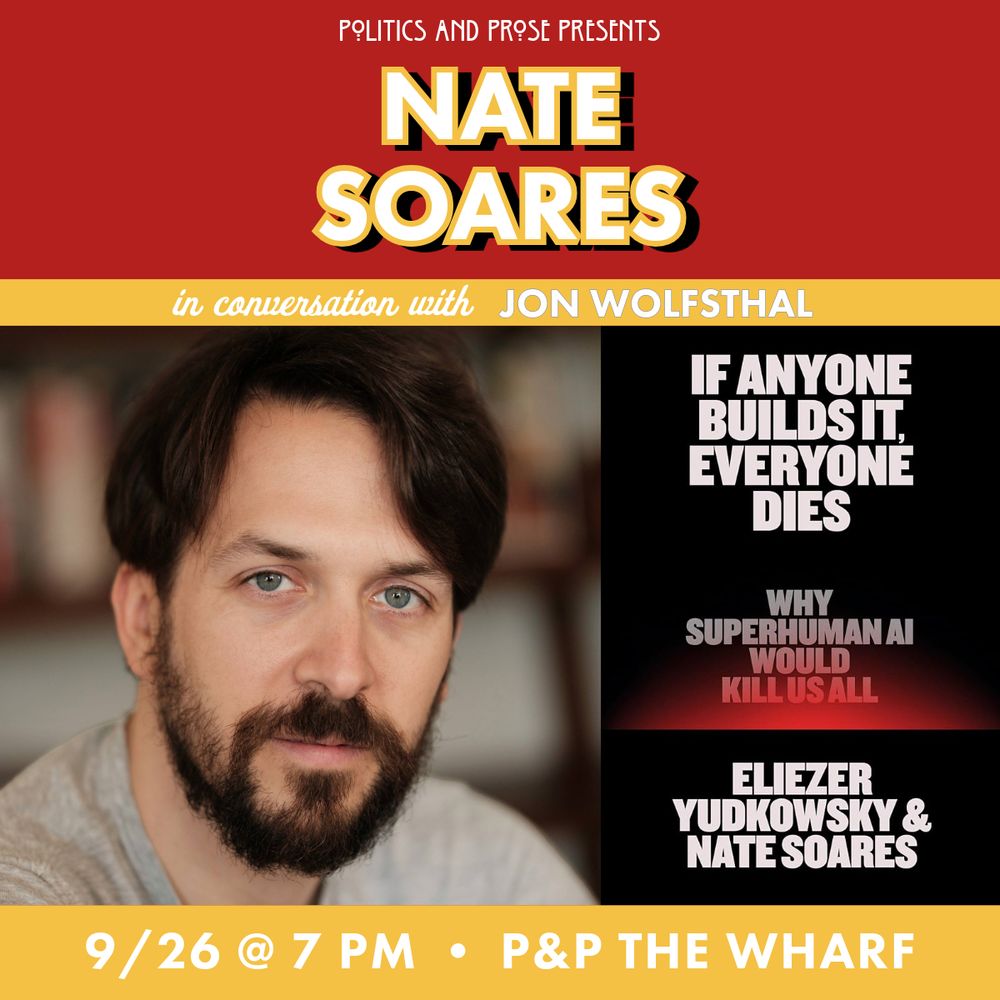

Join us for a conversation between co-author Nate Soares and @jonatomic.bsky.social, Director of Global Risk at the Federation of American Scientists.

Audience Q&A, book signing, and more:

politics-prose.com/nate-soares

I'll be there, if you're in town come say hi!

#8 Hardcover Nonfiction (www.nytimes.com/books/best-s...)

Thanks @scientistsorg.bsky.social and @futureoflife.org for putting this event together.

We kicked off our AGI x Global Risk day with remarks from @repbillfoster.bsky.social, @reptedlieu.bsky.social, and John Bailey — setting the stage for a day of bold dialogue on the future of AGI 🌎

Thanks @scientistsorg.bsky.social and @futureoflife.org for putting this event together.

Unfortunately the full episode is for subscribers only.

Fortunately, as a subscriber, I can share the full thing 🙂

Unfortunately the full episode is for subscribers only.

Fortunately, as a subscriber, I can share the full thing 🙂