Formerly Crunchy Data, Microsoft, Citus Data, AWS, TCD, VU

Our principles were:

- Most data lives in S3

- @duckdb.org has the best query engine

- Iceberg will be the dominant table format

- No compromise on Postgres features

So, we built Crunchy Data Warehouse.

1/n

Join us!

www.meetup.com/postgres-mee...

Join us!

www.meetup.com/postgres-mee...

pg_lake is a set of extensions (from Crunchy Data Warehouse) that add comprehensive Iceberg support and data lake access to Postgres, with @duckdb.org transparently integrated into the query engine.

Announcement blog: www.snowflake.com/en/engineeri...

pg_lake is a set of extensions (from Crunchy Data Warehouse) that add comprehensive Iceberg support and data lake access to Postgres, with @duckdb.org transparently integrated into the query engine.

Announcement blog: www.snowflake.com/en/engineeri...

Thanks to @marcoslot.com + @daveandersen.bsky.social for their collaboration on this project

Thanks to @marcoslot.com + @daveandersen.bsky.social for their collaboration on this project

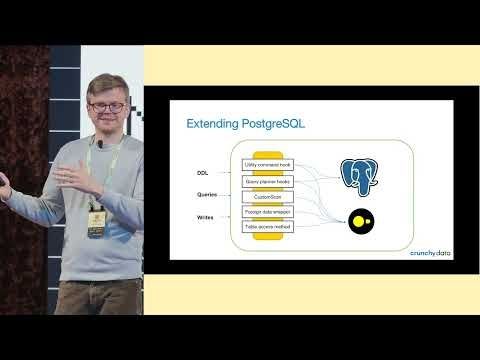

TLDR: Postgres extns ecosystem is fraught with footguns. Other DBMSs have fewer extns but less problems. DuckDB has cleanest API.

👥 Authors: Abigale Kim, Marco Slot, David Andersen, Andrew Pavlo

📄 PDF: https://www.vldb.org/pvldb/vol18/p1962-kim.pdf

TLDR: Postgres extns ecosystem is fraught with footguns. Other DBMSs have fewer extns but less problems. DuckDB has cleanest API.

www.crunchydata.com/blog/crunchy...

www.crunchydata.com/blog/crunchy...

www.youtube.com/watch?v=HZAr...

www.youtube.com/watch?v=HZAr...

Speed up Postgres analytical queries 100x with 2 commands.

Speed up Postgres analytical queries 100x with 2 commands.

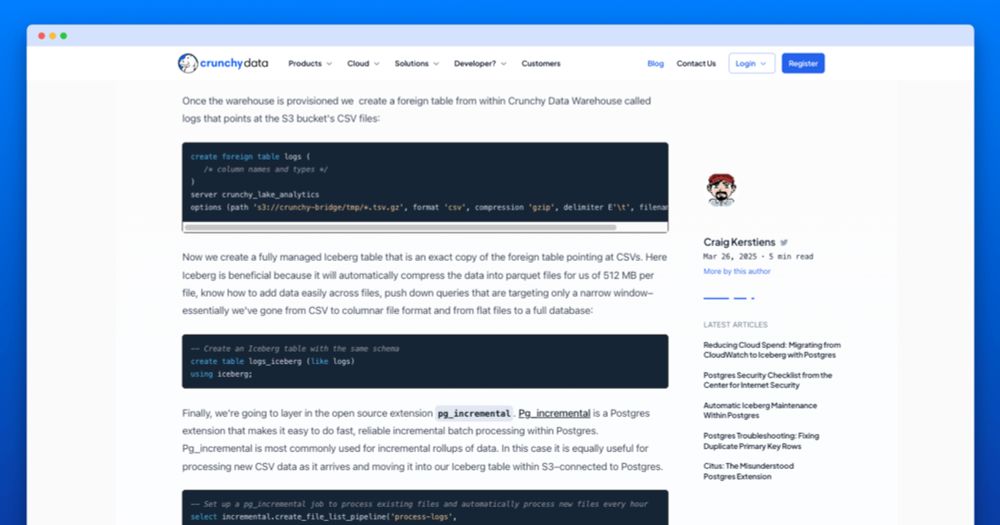

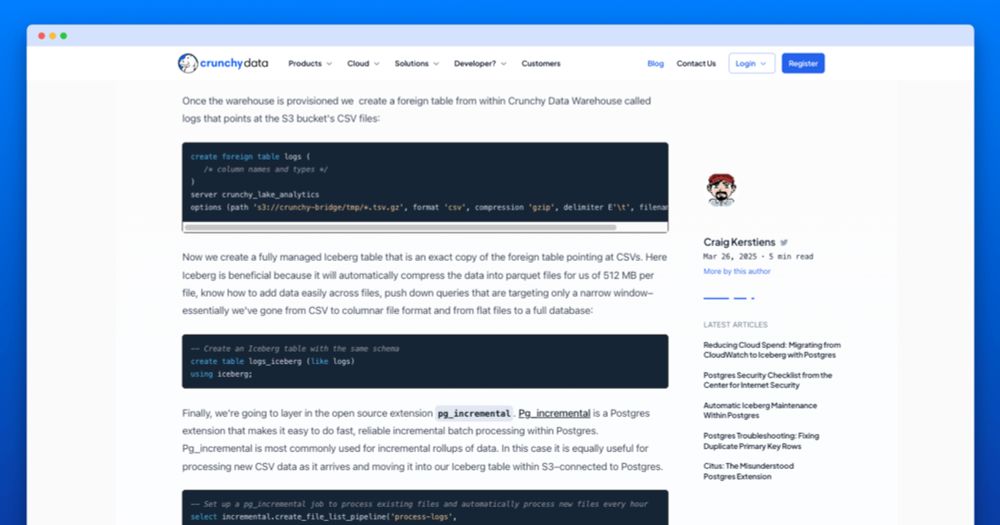

It's an introduction to how and why we used Iceberg and DuckDB to build a Postgres Data Warehouse:

www.youtube.com/watch?v=cEnq...

It's an introduction to how and why we used Iceberg and DuckDB to build a Postgres Data Warehouse:

www.youtube.com/watch?v=cEnq...

You can now use Postgres as a modern Data Warehouse anywhere, using any S3-compatible storage API. Query, import, or export files in your data lake or store data in Iceberg with automatic maintenance and very fast queries.

You can now use Postgres as a modern Data Warehouse anywhere, using any S3-compatible storage API. Query, import, or export files in your data lake or store data in Iceberg with automatic maintenance and very fast queries.

Now within Crunchy Data Warehouse we will automatically vacuum and continuously optimize your Iceberg data by compacting and cleaning up files.

Dig into the details of how this works www.crunchydata.com/blog/automat...

Now within Crunchy Data Warehouse we will automatically vacuum and continuously optimize your Iceberg data by compacting and cleaning up files.

Dig into the details of how this works www.crunchydata.com/blog/automat...

Then go build products for that customer.

This works.

Then go build products for that customer.

This works.

We're always aiming for a 0-touch experience where possible, so we went out of our way to make Iceberg compaction & cleanup fully automatic without any configuration.

Still pretty interesting to see a manual vacuum:

We're always aiming for a 0-touch experience where possible, so we went out of our way to make Iceberg compaction & cleanup fully automatic without any configuration.

Still pretty interesting to see a manual vacuum:

Step 1: Point at your dataset and we'll load it for you

Step 2: Query it

Step 3: Profit

Step 1: Point at your dataset and we'll load it for you

Step 2: Query it

Step 3: Profit

I like that it's fast, to the point, and quite clever.

I was impressed with a SQL query it came up with today for finding contiguous ranges of integers. ChatGPT's version was 3x slower.

I like that it's fast, to the point, and quite clever.

I was impressed with a SQL query it came up with today for finding contiguous ranges of integers. ChatGPT's version was 3x slower.

Using pg_parquet you can trivially export data to S3, and using Crunchy Data Warehouse you can just as easily query or import Parquet files from PostgreSQL.

Using pg_parquet you can trivially export data to S3, and using Crunchy Data Warehouse you can just as easily query or import Parquet files from PostgreSQL.

I created the initial version with Vinod Sridharan (an absolutely brilliant engineer) at Microsoft a few years ago and it's come a long way since.

It reimplements Mongo API with exact semantics in PostgreSQL. Already used by FerretDB!

github.com/microsoft/do...

I created the initial version with Vinod Sridharan (an absolutely brilliant engineer) at Microsoft a few years ago and it's come a long way since.

It reimplements Mongo API with exact semantics in PostgreSQL. Already used by FerretDB!

github.com/microsoft/do...

Solving the right problems in the right way is really hard.

Today, we are releasing Part 1 of our 3-part blog series on how we designed a new storage mechanism for search and analytics in Postgres.

www.paradedb.com/blog/block_s...

Solving the right problems in the right way is really hard.

Here's why we did it, how we did it, and why you should care. 🧵

Today, we are releasing Part 1 of our 3-part blog series on how we designed a new storage mechanism for search and analytics in Postgres.

www.paradedb.com/blog/block_s...

Here's why we did it, how we did it, and why you should care. 🧵