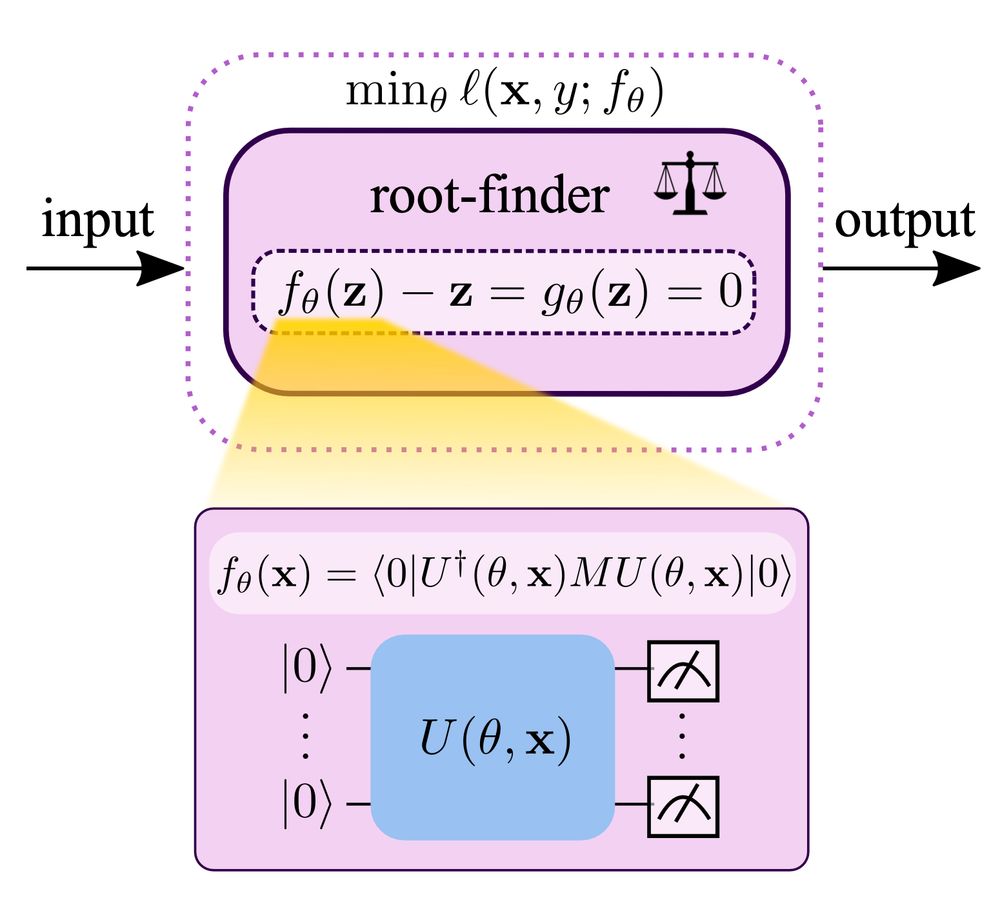

🚀 Introducing SuperDiff 🦹♀️ – a principled method for efficiently combining multiple pre-trained diffusion models solely during inference!

Check out the 🧵 to see how we superimposed proteins as well as images, all thanks to a fast new density estimator. Curious to see what 🍩 & 🗺️ would produce?

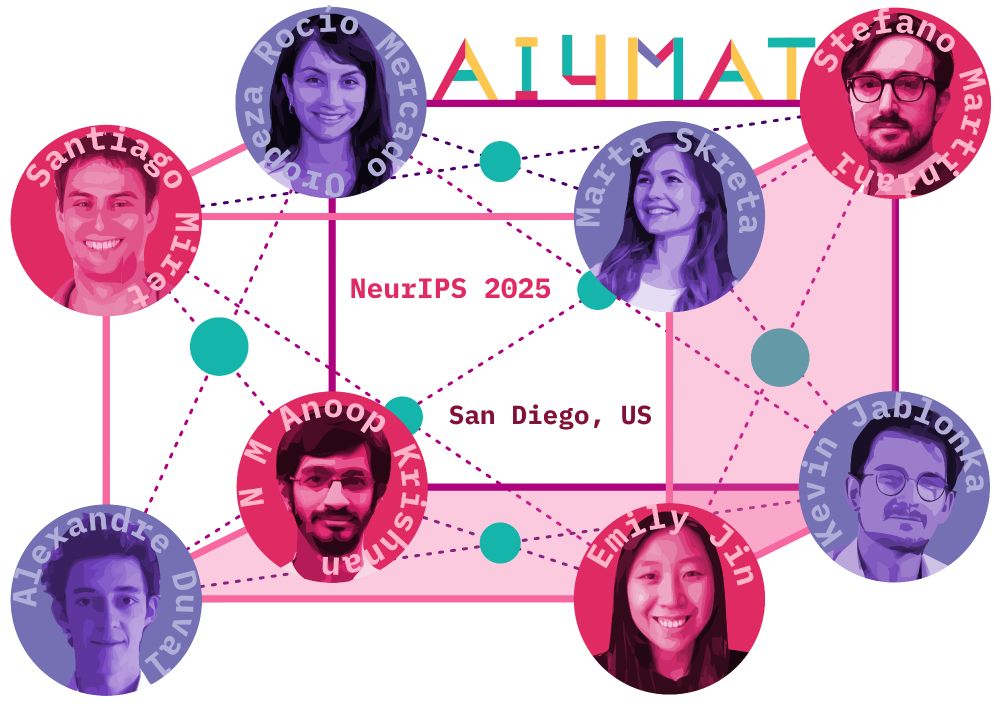

Consider submitting full-length papers or shorter-length findings. We also have a special track for papers on benchmarking AI for materials design.

sites.google.com/view/ai4mat/...

Consider submitting full-length papers or shorter-length findings. We also have a special track for papers on benchmarking AI for materials design.

sites.google.com/view/ai4mat/...

Now out on arXiv: arxiv.org/abs/2502.18966

A short explanation thread 👇

Now out on arXiv: arxiv.org/abs/2502.18966

A short explanation thread 👇

www.nature.com/articles/d41...

www.nature.com/articles/d41...

It's nice to see an easy-to-compute log-likelihood estimator for SDE sampling of diffusion models (not just ODE)

📄 arxiv.org/abs/2412.17762

🐍 github.com/necludov/sup...

It's nice to see an easy-to-compute log-likelihood estimator for SDE sampling of diffusion models (not just ODE)

📄 arxiv.org/abs/2412.17762

🐍 github.com/necludov/sup...

Turns out you can use our all new Ito density estimator 🔥 to compute densities under a diffusion model efficiently 🚀!

📄Paper: arxiv.org/abs/2412.17762

💻Code: github.com/necludov/sup...

🤗HuggingFace: huggingface.co/superdiff

Turns out you can use our all new Ito density estimator 🔥 to compute densities under a diffusion model efficiently 🚀!

Check out the 🧵 to see how we superimposed proteins as well as images, all thanks to a fast new density estimator. Curious to see what 🍩 & 🗺️ would produce?

🚀 Introducing SuperDiff 🦹♀️ – a principled method for efficiently combining multiple pre-trained diffusion models solely during inference!

Check out the 🧵 to see how we superimposed proteins as well as images, all thanks to a fast new density estimator. Curious to see what 🍩 & 🗺️ would produce?

🔗 website: sites.google.com/view/fpiwork...

🔥 Call for papers: sites.google.com/view/fpiwork...

more details in thread below👇 🧵