Soon @cnrs.fr @univ-amu.fr; currently postdoc at MIT, Cambridge, US.

osf.io/preprints/ps...

🧵1/12

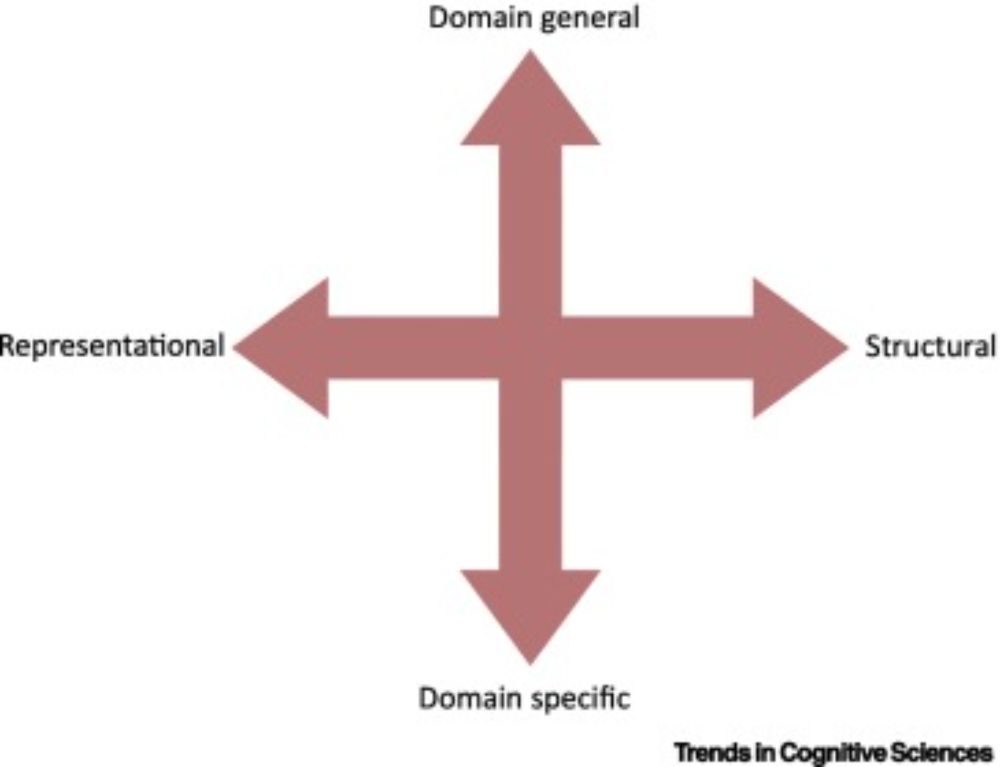

The project explores how human developmental processes can inspire more grounded and socially aware conversational AI (1/6).

The project explores how human developmental processes can inspire more grounded and socially aware conversational AI (1/6).

www.cell.com/trends/cogni... @mpi-nl.bsky.social

www.cell.com/trends/cogni... @mpi-nl.bsky.social

Join leading researchers for a deep dive into cutting-edge work in infancy research.

infantstudies.org/icis-online-...

#InfantResearch #InfantStudies

Join leading researchers for a deep dive into cutting-edge work in infancy research.

infantstudies.org/icis-online-...

#InfantResearch #InfantStudies

You know, beyond surveillance and chatbots 🙊

Sign-up and join our Interspeech tutorial: Speech Technology Meets Early Language Acquisition: How Interdisciplinary Efforts Benefit Both Fields. 🗣️👶

www.interspeech2025.org/tutorials

⬇️ (1/2)

You know, beyond surveillance and chatbots 🙊

We adapt the ABX task, commonly used in speech models, to investigate how multilingual text models represent form (language) vs content (meaning).

📄 arxiv.org/pdf/2505.17747

🙌 With Jie Chi, Skyler Seto, @maartjeterhoeve.bsky.social, Masha Fedzechkina & Natalie Schluter

If so, join us for our free, two-day workshop; 'Long Form Audio Recordings: A to Z', generously supported by Cardiff University Doctoral Academy.

If so, join us for our free, two-day workshop; 'Long Form Audio Recordings: A to Z', generously supported by Cardiff University Doctoral Academy.

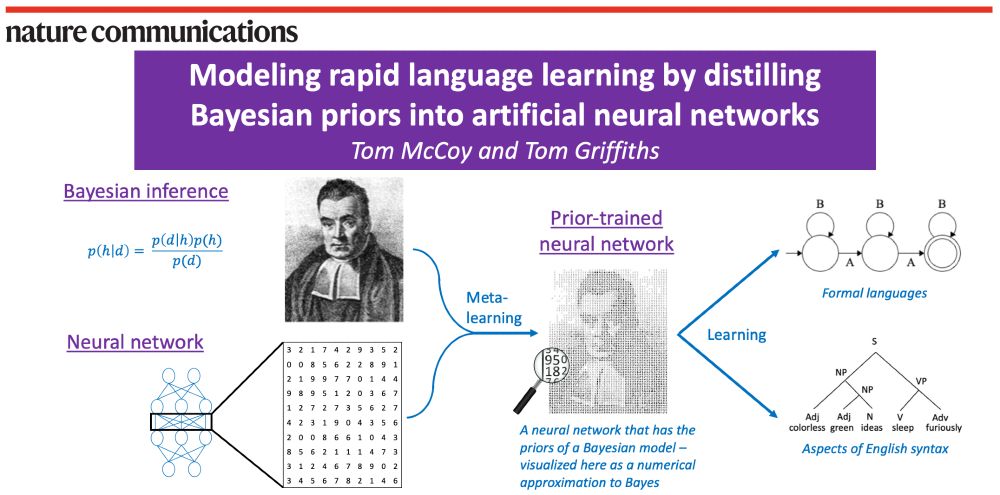

Bayesian models can learn rapidly. Neural networks can handle messy, naturalistic data. How can we combine these strengths?

Our answer: Use meta-learning to distill Bayesian priors into a neural network!

www.nature.com/articles/s41...

1/n

Bayesian models can learn rapidly. Neural networks can handle messy, naturalistic data. How can we combine these strengths?

Our answer: Use meta-learning to distill Bayesian priors into a neural network!

www.nature.com/articles/s41...

1/n

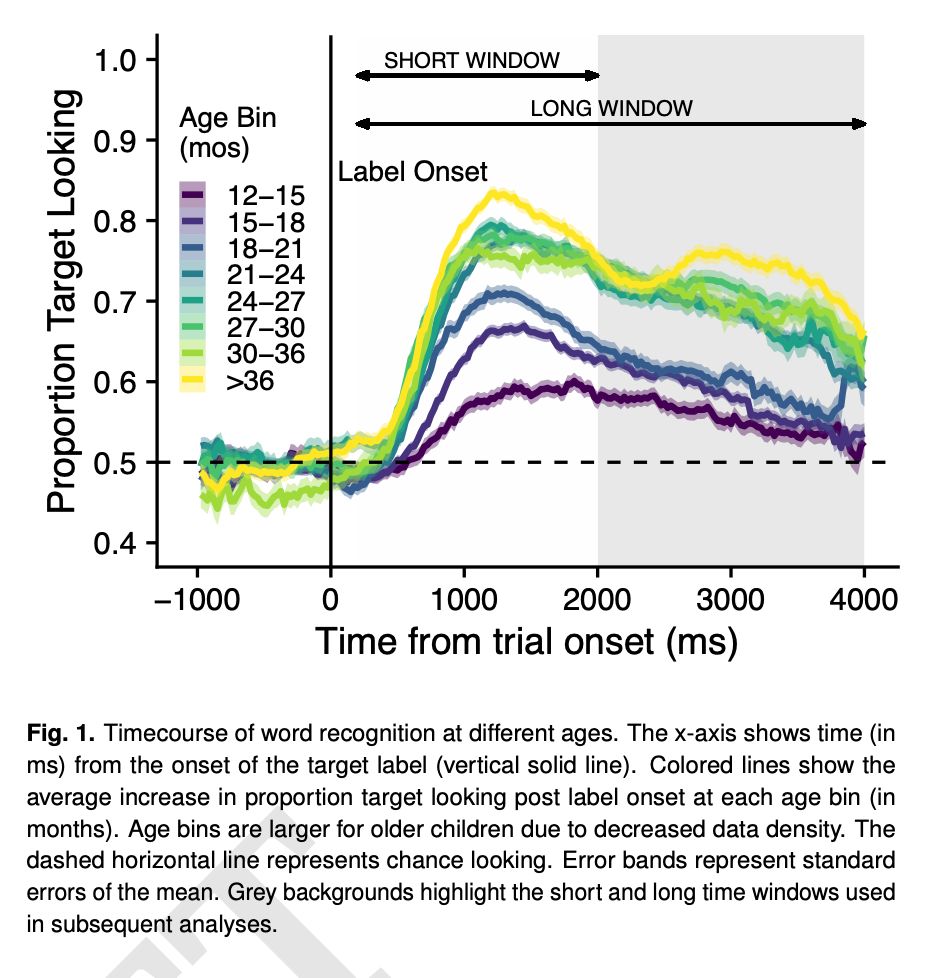

recognition support language learning across early

childhood": osf.io/preprints/ps...

recognition support language learning across early

childhood": osf.io/preprints/ps...

onlinelibrary.wiley.com/doi/10.1111/...

onlinelibrary.wiley.com/doi/10.1111/...

osf.io/preprints/ps...

🧵1/12

osf.io/preprints/ps...

🧵1/12