Studying work involving intelligent machines, especially robots. @MITSloan PhD, @Ucsb Asst Prof, @Stanford and @MIT Digital Fellow, @Tedtalks @Thinkers50

Oregon State University; University of California, Santa Barbara; University of California System; Massachusetts Institute of Technology • Digital Economy and Work Transformation, Innovation and Knowledge Management, Surgical Simulation and Training

Reposted by Matt Beane

Reposted by Helen Margetts, Evan Selinger, Dan MacLean , and 1 more Helen Margetts, Evan Selinger, Dan MacLean, Matt Beane

Reposted by Matt Beane

We're hosting our Wharton AI and the Future of Work Conference on 5/21-22. Last year was a great event with some of the top papers on AI and work.

Paper submission deadline is 3/3. Come join us! Submit papers here: forms.gle/ozJ5xEaktXDE...

Reposted by Matt Beane

Reposted by Amy X. Zhang, Matt Beane

Reposted by Matt Beane

Table of contents:

Reposted by Matt Beane

Reposted by Matt Beane

Linke below to hear the full discussion on Friday, December 13 at 11 am EST!

linktr.ee/RitaMcGrath

@mattbeane.bsky.social

Most engineers/CS working on AI presume away well established, profound brakes on AI diffusion.

Most social scientists presume away how AI use could reshape those brakes.

Let's gather these groups, examine these brakes 1-by-1, make grounded predictions.

Reposted by Matt Beane

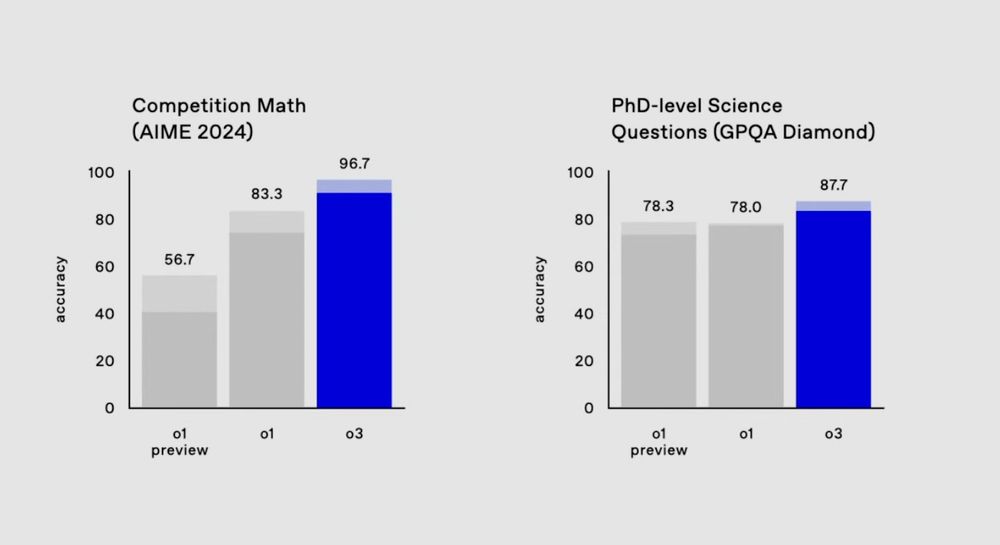

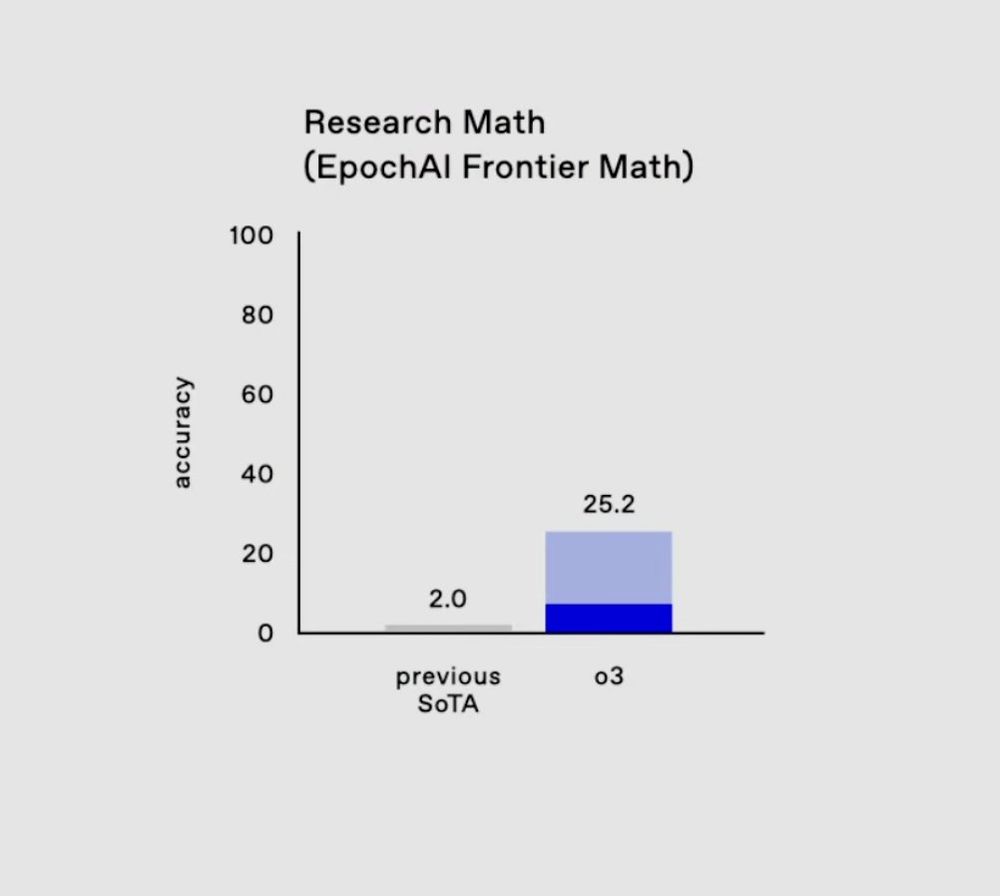

Most folks don’t regularly have a lot of tasks that bump up against the limits of human intelligence, so won’t see it

Some insightful opening remarks, followed by an absolutely stonking keynote by @mattbeane.bsky.social. Crystallised a lot of my worries around preserving expertise in software engineering during the age of GenAI. I have reading to do.

Reposted by Matt Beane

Some insightful opening remarks, followed by an absolutely stonking keynote by @mattbeane.bsky.social. Crystallised a lot of my worries around preserving expertise in software engineering during the age of GenAI. I have reading to do.

Reposted by Matt Beane

www.technologyreview.com/2012/07/18/1...

To attribute complex, intentional design or deeper meaning to simple emergent behaviors of large language models, especially when such behaviors are more likely explained by straightforward technical constraints or training artifacts.

And I can verify on your rule! I was so flabbergasted and honored. Your feedback was rich and so helpful. Remain grateful.

But ads? F*ck that noise. Seriously, straight up evil.

Only/ever the "following" page. Even there things got pretty intolerable towards/around the election, now settled down.

Reposted by Matt Beane

Joe said, "I've got something he can never have"

And I said, "What on earth could that be, Joe?"

And Joe said, "The knowledge that I've got enough"

www.linkedin.com/pulse/kurt-v...