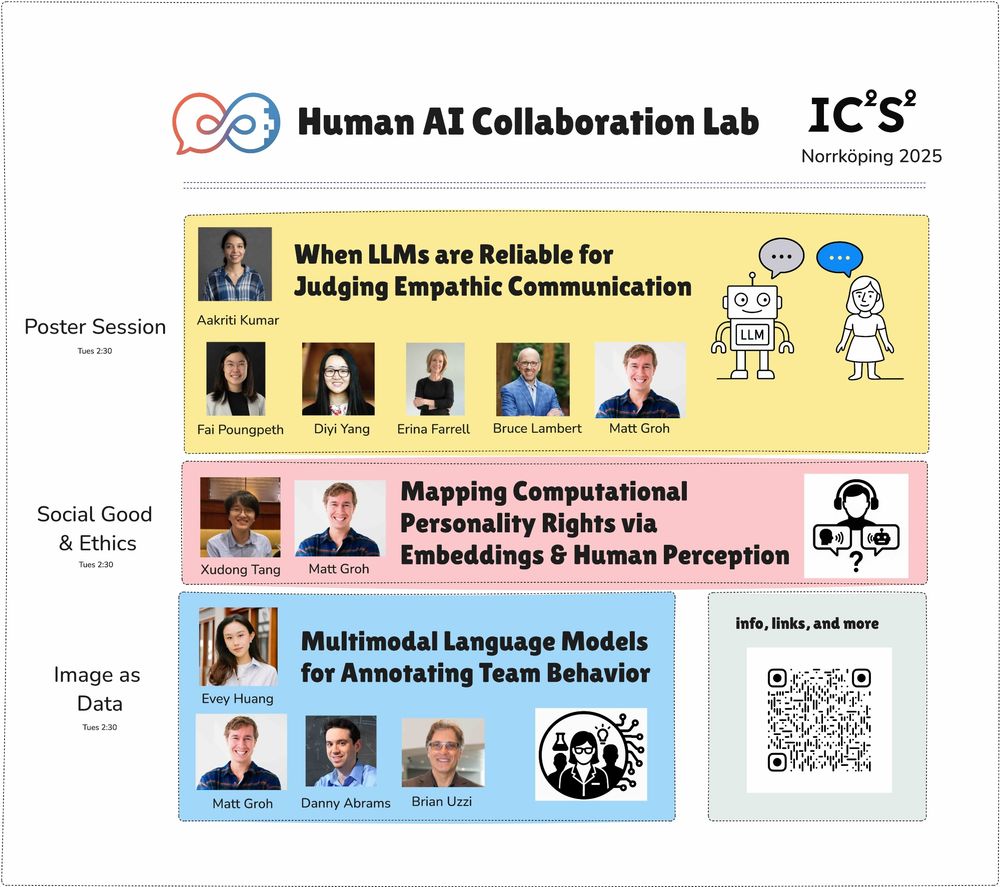

Excited to share the first paper from my postdoc (!!) investigating when LLMs are reliable judges - with empathic communication as a case study 🧐

🧵👇

That's a big question for frontier labs and it's a big question for computational social science.

Excited to share our findings (led by @aakriti1kumar.bsky.social!) on how to address this question for any subjective task & specifically for empathic communications

🗓️ Wed 10/22 at 12pm US Central

🔗 bit.ly/WedatNICO

🗓️ Wed 10/8 at 12pm US Central

🔗 bit.ly/WedatNICO

More at human-ai-collaboration-lab.kellogg.northwestern.edu/ic2s2

See you there!

#IC2S2

More at human-ai-collaboration-lab.kellogg.northwestern.edu/ic2s2

See you there!

#IC2S2

That's a big question for frontier labs and it's a big question for computational social science.

Excited to share our findings (led by @aakriti1kumar.bsky.social!) on how to address this question for any subjective task & specifically for empathic communications

Excited to share the first paper from my postdoc (!!) investigating when LLMs are reliable judges - with empathic communication as a case study 🧐

🧵👇

That's a big question for frontier labs and it's a big question for computational social science.

Excited to share our findings (led by @aakriti1kumar.bsky.social!) on how to address this question for any subjective task & specifically for empathic communications

insight.kellogg.northwestern.edu/article/are-...

insight.kellogg.northwestern.edu/article/are-...

Large scale experiment with 750k obs addressing

(1) How photorealistic are today's AI-generated images?

(2) What features of images influence people's ability to distinguish real/fake?

(3) How should we categorize artifacts?

Large scale experiment with 750k obs addressing

(1) How photorealistic are today's AI-generated images?

(2) What features of images influence people's ability to distinguish real/fake?

(3) How should we categorize artifacts?

Dashun Wang and I are seeking a creative, technical, interdisciplinary researcher for a joint postdoc fellowship between our labs.

If you're passionate about Human-AI Collaboration and Science of Science, this may be for you! 🚀

Please share widely!

Dashun Wang and I are seeking a creative, technical, interdisciplinary researcher for a joint postdoc fellowship between our labs.

If you're passionate about Human-AI Collaboration and Science of Science, this may be for you! 🚀

Please share widely!

So many fantastic discussions as we witnessed the frontier of AI shift even further into hyperdrive✨

Props to students for all the hard work and big thanks to teaching assistants and guest speakers 🙏

So many fantastic discussions as we witnessed the frontier of AI shift even further into hyperdrive✨

Props to students for all the hard work and big thanks to teaching assistants and guest speakers 🙏

And, why does the amodal completion illusion lead us to see a super long reindeer in the image on the right?

This week @chazfirestone.bsky.social joined the NU CogSci seminar series to address these fundamental questions

And, why does the amodal completion illusion lead us to see a super long reindeer in the image on the right?

This week @chazfirestone.bsky.social joined the NU CogSci seminar series to address these fundamental questions

And really cool to hear Dr. Katie Fraser's research on AI for early detection of neurodegenerative diseases in the first half of the episode!

open.spotify.com/episode/3X5H...

And really cool to hear Dr. Katie Fraser's research on AI for early detection of neurodegenerative diseases in the first half of the episode!

open.spotify.com/episode/3X5H...

I updated the syllabus with a couple 2024 books + new lectures and readings.

What else do you think MBA students should be reading on this topic?

docs.google.com/document/d/1...

I updated the syllabus with a couple 2024 books + new lectures and readings.

What else do you think MBA students should be reading on this topic?

docs.google.com/document/d/1...

We agree, which is why we have a page dedicated to teaching about #AI, with resources including quizzes & an infographic: newslit.org/ai/

www.fox47news.com/politics/dis...

We agree, which is why we have a page dedicated to teaching about #AI, with resources including quizzes & an infographic: newslit.org/ai/

www.fox47news.com/politics/dis...

A 🧵 about my JMP: Using language to generate hypotheses

This paper explores how language shapes behavior. Our contribution, however, is not in testing specific hypotheses- its in generating them (using #LLMs + #ML + #BehSci)

But how exactly?

www.rafaelmbatista.com/jmp/

A 🧵 about my JMP: Using language to generate hypotheses

This paper explores how language shapes behavior. Our contribution, however, is not in testing specific hypotheses- its in generating them (using #LLMs + #ML + #BehSci)

But how exactly?

www.rafaelmbatista.com/jmp/

Re-reading this before joining a panel of lawyers to speak on deepfakes

go.activecalendar.com/FordhamUnive...

Re-reading this before joining a panel of lawyers to speak on deepfakes

go.activecalendar.com/FordhamUnive...

Lack of domain expertise & lack of knowledge of AI's capabilities and limitations -> falling for & even preferring simulacra

Lack of domain expertise & lack of knowledge of AI's capabilities and limitations -> falling for & even preferring simulacra

If you're excited about computational social science, LLMs, digital experiments, real-world problem solving, this could be a great fit

Please reshare!

Deets 👇

If you're excited about computational social science, LLMs, digital experiments, real-world problem solving, this could be a great fit

Please reshare!

Deets 👇

go.bsky.app/MRV1Wa1

go.bsky.app/MRV1Wa1

User error seems to be the culprit

If users don't know how to interact with the technology (however easy it may seem), then the experiment misses out on what would happen if participants had basic knowledge LLMs

User error seems to be the culprit

If users don't know how to interact with the technology (however easy it may seem), then the experiment misses out on what would happen if participants had basic knowledge LLMs

www.kellogg.northwestern.edu/news/blog/20...

www.kellogg.northwestern.edu/news/blog/20...