MLPerf Client v1.5 is here!

New AI PC benchmarking features:

-Windows ML support for improved GPU/NPU performance

-Expanded platforms: Windows x64, Windows on Arm, macOS, Linux, plus iPad app

-Experimental power/energy measurement

GUI improvements out of beta

Download: github.com/mlcommons/ml...

They happen when researchers + practitioners + industry leaders are in the same room, working on shared challenges.

MLCommons Endpoints

Dec 1, San Diego (one day!)

Registration ↓

www.eventbrite.com/e/mlcommons-...

#Endpoints2025

They happen when researchers + practitioners + industry leaders are in the same room, working on shared challenges.

MLCommons Endpoints

Dec 1, San Diego (one day!)

Registration ↓

www.eventbrite.com/e/mlcommons-...

#Endpoints2025

Good, bad, or indifferent, AI is something we have to deal with. Join us for a bit more of a journey into the fundamentals of AI and some of the research we’ve done with our members and partners.

Cc @odihq.bsky.social

Learn, connect, and shape the future of AI with top experts at Qualcomm Hall.

🗓 Dec 1–2 | 🎟 Free tickets available now!

www.eventbrite.com/e/mlcommons-...

#AI #MachineLearning #SanDiego

Good, bad, or indifferent, AI is something we have to deal with. Join us for a bit more of a journey into the fundamentals of AI and some of the research we’ve done with our members and partners.

Cc @odihq.bsky.social

Learn, connect, and shape the future of AI with top experts at Qualcomm Hall.

🗓 Dec 1–2 | 🎟 Free tickets available now!

www.eventbrite.com/e/mlcommons-...

#AI #MachineLearning #SanDiego

Learn, connect, and shape the future of AI with top experts at Qualcomm Hall.

🗓 Dec 1–2 | 🎟 Free tickets available now!

www.eventbrite.com/e/mlcommons-...

#AI #MachineLearning #SanDiego

babeltechreviews.com/the-corsair-...

babeltechreviews.com/the-corsair-...

Standards are made by those in the room. mlcommons.org/2025/11/iso-...

#AIStandards #MLCommons

Standards are made by those in the room. mlcommons.org/2025/11/iso-...

#AIStandards #MLCommons

MLPerf Client v1.5 is here!

New AI PC benchmarking features:

-Windows ML support for improved GPU/NPU performance

-Expanded platforms: Windows x64, Windows on Arm, macOS, Linux, plus iPad app

-Experimental power/energy measurement

GUI improvements out of beta

Download: github.com/mlcommons/ml...

MLPerf Client v1.5 is here!

New AI PC benchmarking features:

-Windows ML support for improved GPU/NPU performance

-Expanded platforms: Windows x64, Windows on Arm, macOS, Linux, plus iPad app

-Experimental power/energy measurement

GUI improvements out of beta

Download: github.com/mlcommons/ml...

Record participation: 20 organizations submitted 65 unique systems featuring 12 different accelerators. Multi-node submissions increased 86% over last year, showing the industry's focus on scale.

Results: mlcommons.org/2025/11/trai...

#MLPerf

1/3

Record participation: 20 organizations submitted 65 unique systems featuring 12 different accelerators. Multi-node submissions increased 86% over last year, showing the industry's focus on scale.

Results: mlcommons.org/2025/11/trai...

#MLPerf

1/3

Learn, connect, and shape the future of AI with top experts at Qualcomm Hall.

🗓 Dec 1–2 | 🎟 Free tickets available now!

www.eventbrite.com/e/mlcommons-...

#AI #MachineLearning #SanDiego

Learn, connect, and shape the future of AI with top experts at Qualcomm Hall.

🗓 Dec 1–2 | 🎟 Free tickets available now!

www.eventbrite.com/e/mlcommons-...

#AI #MachineLearning #SanDiego

This pattern highlights why evolving benchmarks are important. Stay tuned for MLPerf Training v5.1, out on 11/12.

spectrum.ieee.org/mlperf-trends

This pattern highlights why evolving benchmarks are important. Stay tuned for MLPerf Training v5.1, out on 11/12.

spectrum.ieee.org/mlperf-trends

Not a single one was as secure as it was "safe."

Today, we're releasing the industry's first standardized jailbreak benchmark. Here's what we found 🧵1/6

mlcommons.org/2025/10/ailu...

Not a single one was as secure as it was "safe."

Today, we're releasing the industry's first standardized jailbreak benchmark. Here's what we found 🧵1/6

mlcommons.org/2025/10/ailu...

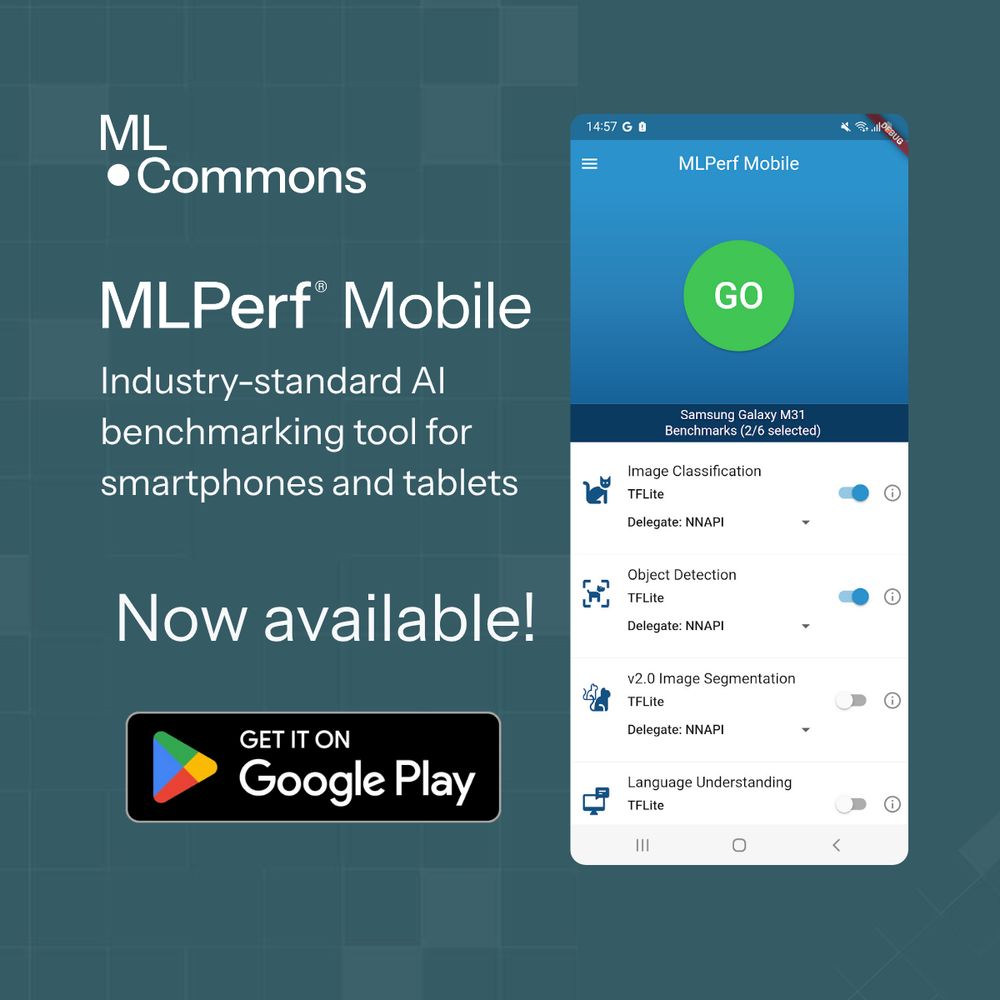

play.google.com/store/apps/d...

play.google.com/store/apps/d...

By combining:

📂 #Croissant – AI-ready #metadata

⚡ #MCP – agentic access to data & tools

Our new blog introduces Eclair: tools that let #LLMs discover, download & explore millions of #datasets.

mlcommons.org/2025/10/croi...

By combining:

📂 #Croissant – AI-ready #metadata

⚡ #MCP – agentic access to data & tools

Our new blog introduces Eclair: tools that let #LLMs discover, download & explore millions of #datasets.

mlcommons.org/2025/10/croi...

Technical deep dive: mlcommons.org/2025/09/mlpe... #MLPerf #TinyML #EdgeAI

Technical deep dive: mlcommons.org/2025/09/mlpe... #MLPerf #TinyML #EdgeAI

New streaming wake-word detection test + 70 results measuring sub-100KB neural networks across hardware platforms.

Thanks to Qualcomm , ST Microelectronics , Syntiantcorp & Kai Jiang for pushing tiny ML forward.

View results: mlcommons.org/2025/09/mlpe...

New streaming wake-word detection test + 70 results measuring sub-100KB neural networks across hardware platforms.

Thanks to Qualcomm , ST Microelectronics , Syntiantcorp & Kai Jiang for pushing tiny ML forward.

View results: mlcommons.org/2025/09/mlpe...

The app is available for Android phones via the Google Play Store and the MLCommons GitHub repo.

Let us know what you think!

t.co/715ENpV6W4

The app is available for Android phones via the Google Play Store and the MLCommons GitHub repo.

Let us know what you think!

t.co/715ENpV6W4

Catch up on this essential reading for AI decisions: www.techarena.ai/content/ai-b...

#MLCommons #MLPerf

Catch up on this essential reading for AI decisions: www.techarena.ai/content/ai-b...

#MLCommons #MLPerf

Record 27 organizations submitted 1,472 performance results across new and established AI workloads.

Three new benchmarks debut:

Reasoning with Deepseek R1

Speech to text with Whisper

Small LLM with Llama 3.1 8B

Read More: mlcommons.org/2025/09/mlpe...

Record 27 organizations submitted 1,472 performance results across new and established AI workloads.

Three new benchmarks debut:

Reasoning with Deepseek R1

Speech to text with Whisper

Small LLM with Llama 3.1 8B

Read More: mlcommons.org/2025/09/mlpe...

MLCommons & AVCC announce MLPerf Automotive v0.5 benchmark results—a major step for transparent, reproducible automotive AI performance data. mlcommons.org/2025/08/mlpe...

MLCommons & AVCC announce MLPerf Automotive v0.5 benchmark results—a major step for transparent, reproducible automotive AI performance data. mlcommons.org/2025/08/mlpe...

mlcommons.org/2025/08/mlpe...

mlcommons.org/2025/08/mlpe...

The new benchmark for LLMs on PCs and client systems is now available—featuring expanded model support, new workload scenarios, and broad hardware integration.

Thank you to all submitters! #AMD, #Intel, @microsoft.com, #NVIDIA, #Qualcomm

mlcommons.org/2025/07/mlpe...

The new benchmark for LLMs on PCs and client systems is now available—featuring expanded model support, new workload scenarios, and broad hardware integration.

Thank you to all submitters! #AMD, #Intel, @microsoft.com, #NVIDIA, #Qualcomm

mlcommons.org/2025/07/mlpe...

Benchmark your Android device’s AI performance on real-world ML tasks with this free, open-source app.

Try it now: play.google.com/store/apps/d...

Benchmark your Android device’s AI performance on real-world ML tasks with this free, open-source app.

Try it now: play.google.com/store/apps/d...

🔗https://mlcommons.org/2025/06/ares-announce/

1/3

🔗https://mlcommons.org/2025/06/ares-announce/

1/3

Watch @priya-kasimbeg.bsky.social & @fsschneider.bsky.social speedrun an explanation of the AlgoPerf benchmark, rules, and results all within a tight 5 minutes for our #ICLR2025 paper video on "Accelerating Neural Network Training". See you in Singapore!

Watch @priya-kasimbeg.bsky.social & @fsschneider.bsky.social speedrun an explanation of the AlgoPerf benchmark, rules, and results all within a tight 5 minutes for our #ICLR2025 paper video on "Accelerating Neural Network Training". See you in Singapore!

In her new op-ed, our President, Rebecca Weiss, breaks down how industry-led AI reliability standards can help executives avoid costly, high-profile failures.

📖 More: bit.ly/3FP0kjg

@fastcompany.com

In her new op-ed, our President, Rebecca Weiss, breaks down how industry-led AI reliability standards can help executives avoid costly, high-profile failures.

📖 More: bit.ly/3FP0kjg

@fastcompany.com

#MLCommons & @AVCConsortium are accepting submissions for the #MLPerf Automotive Benchmark Suite! Help drive fair comparisons & optimize AI systems in vehicles. Focus is on camera sensor perception.

📅 Submissions close June 13th, 2025

Join: mlcommons.org/community/su...

#MLCommons & @AVCConsortium are accepting submissions for the #MLPerf Automotive Benchmark Suite! Help drive fair comparisons & optimize AI systems in vehicles. Focus is on camera sensor perception.

📅 Submissions close June 13th, 2025

Join: mlcommons.org/community/su...