Read more: www.databricks.com/blog/reranki...

Read more: www.databricks.com/blog/reranki...

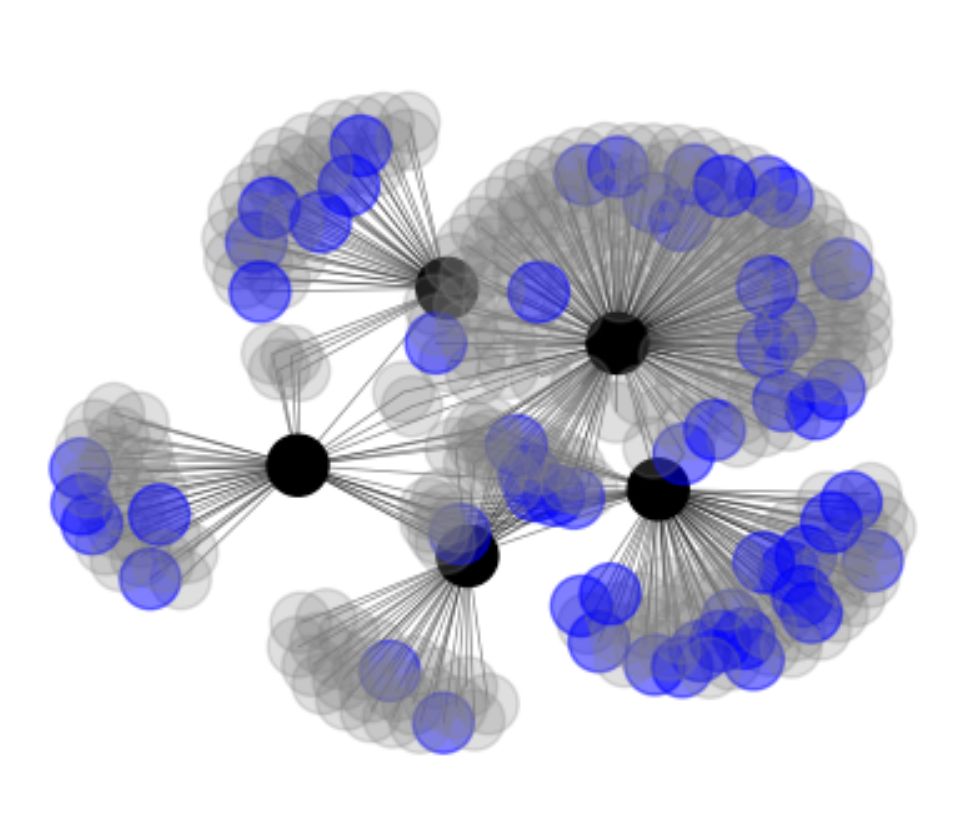

This is a Call for Participation to our CLEF 2025 Lab - try out how your IR system does in the long term.

Check the details on our page:

clef-longeval.github.io

This is a Call for Participation to our CLEF 2025 Lab - try out how your IR system does in the long term.

Check the details on our page:

clef-longeval.github.io

Down the corner comes one more

And we scream into that city night: “three plus one makes four!”

Well, they seem to think we’re disturbing the peace

But we won’t let them make us sad

’Cause kids like you and me baby, we were born to add

Down the corner comes one more

And we scream into that city night: “three plus one makes four!”

Well, they seem to think we’re disturbing the peace

But we won’t let them make us sad

’Cause kids like you and me baby, we were born to add

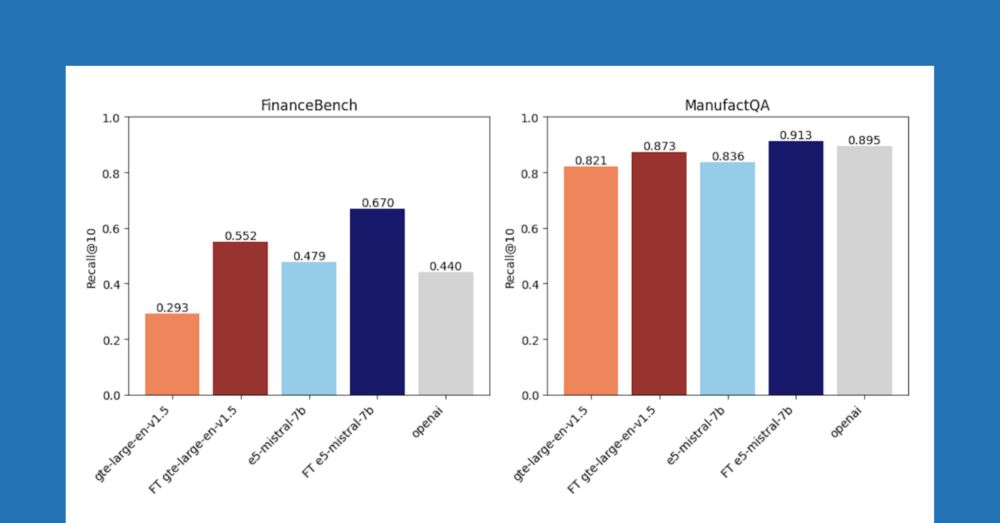

Among other things, I'm excited about embedding finetuning and reranking as modular ways to improve RAG pipelines. Everyone should use these more!

Among other things, I'm excited about embedding finetuning and reranking as modular ways to improve RAG pipelines. Everyone should use these more!

www.databricks.com/blog/improvi...

www.databricks.com/blog/improvi...

The pipeline that creates the data mix:

The pipeline that creates the data mix:

for more on my thoughts, see drive.google.com/file/d/1sk_t...

for more on my thoughts, see drive.google.com/file/d/1sk_t...

Introduces ModernBERT, a bidirectional encoder advancing BERT-like models with 8K context length.

📝 arxiv.org/abs/2412.13663

👨🏽💻 github.com/AnswerDotAI/...

Introduces ModernBERT, a bidirectional encoder advancing BERT-like models with 8K context length.

📝 arxiv.org/abs/2412.13663

👨🏽💻 github.com/AnswerDotAI/...

Shows Mamba-based models achieve comparable reranking performance to transformers while being more memory efficient, with Mamba-2 outperforming Mamba-1.

📝 arxiv.org/abs/2412.14354

Shows Mamba-based models achieve comparable reranking performance to transformers while being more memory efficient, with Mamba-2 outperforming Mamba-1.

📝 arxiv.org/abs/2412.14354

Is reasoning a task? Or is reasoning a method for generating answers, for any task?

Is reasoning a task? Or is reasoning a method for generating answers, for any task?

willwhitney.com/computing-in...

willwhitney.com/computing-in...

1. The importance of stupidity in scientific research

Open Access

journals.biologists.com/jcs/article/...

1. The importance of stupidity in scientific research

Open Access

journals.biologists.com/jcs/article/...