Tech Lead & Manager

Google DeepMind

msajjadi.com

EurIPS is a community-organized conference where you can present accepted NeurIPS 2025 papers, endorsed by @neuripsconf.bsky.social and @nordicair.bsky.social and is co-developed by @ellis.eu

eurips.cc

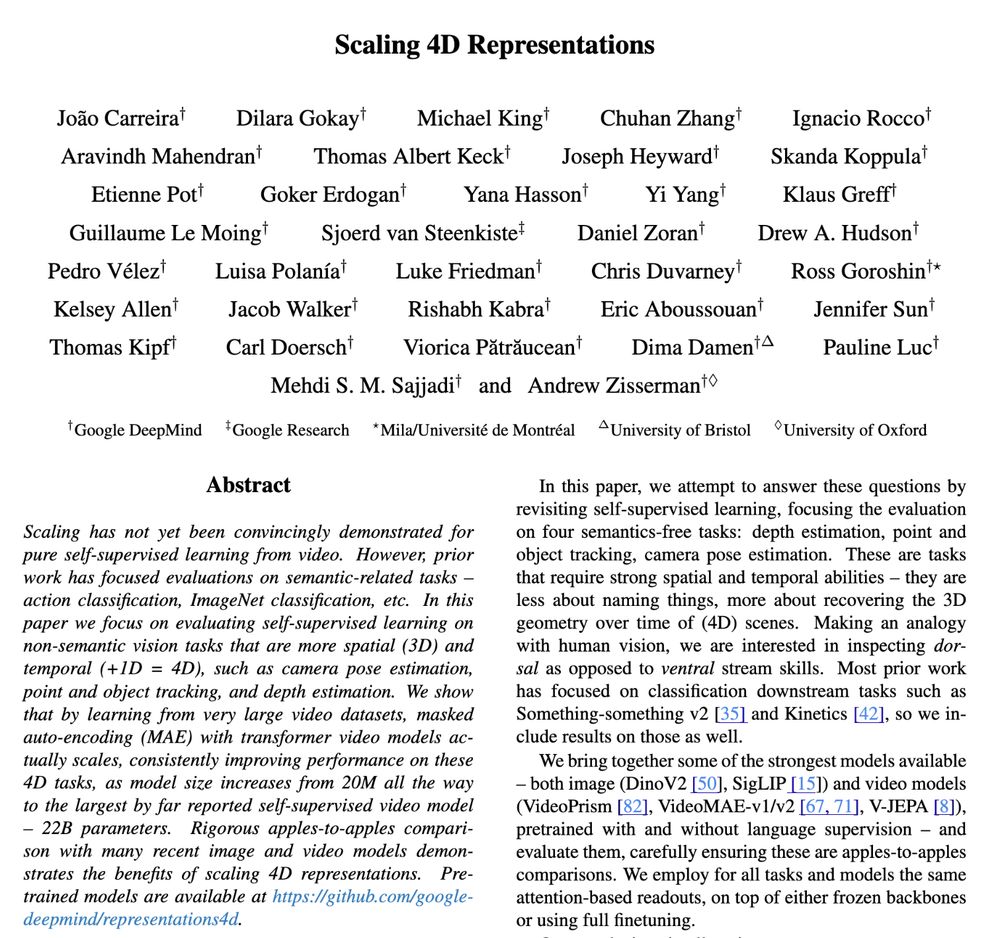

Self-supervised learning from video does scale! In our latest work, we scaled masked auto-encoding models to 22B params, boosting performance on pose estimation, tracking & more.

Paper: arxiv.org/abs/2412.15212

Code & models: github.com/google-deepmind/representations4d

Self-supervised learning from video does scale! In our latest work, we scaled masked auto-encoding models to 22B params, boosting performance on pose estimation, tracking & more.

Paper: arxiv.org/abs/2412.15212

Code & models: github.com/google-deepmind/representations4d

We investigated this question and more in our latest work, please check it out!

*From Image to Video: An Empirical Study of Diffusion Representations*

arxiv.org/abs/2502.07001

We investigated this question and more in our latest work, please check it out!

*From Image to Video: An Empirical Study of Diffusion Representations*

arxiv.org/abs/2502.07001

SRT: srt-paper.github.io

OSRT: osrt-paper.github.io

RUST: rust-paper.github.io

DyST: dyst-paper.github.io

MooG: moog-paper.github.io

SRT: srt-paper.github.io

OSRT: osrt-paper.github.io

RUST: rust-paper.github.io

DyST: dyst-paper.github.io

MooG: moog-paper.github.io

arxiv.org/abs/2412.14294

Causal, 3× fewer parameters, 12× less memory, 5× higher FLOPs than (non-causal) ViViT, matching / outperforming on Kinetics & SSv2 action recognition.

Code and checkpoints out soon.

arxiv.org/abs/2412.14294

Causal, 3× fewer parameters, 12× less memory, 5× higher FLOPs than (non-causal) ViViT, matching / outperforming on Kinetics & SSv2 action recognition.

Code and checkpoints out soon.