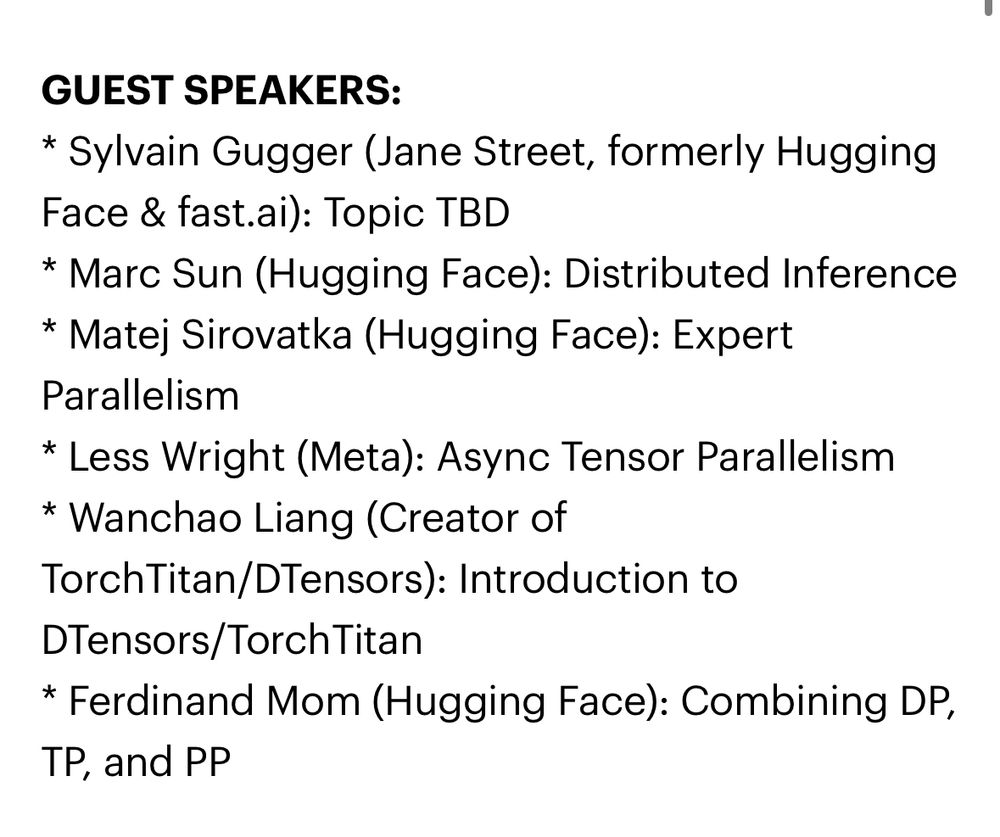

Back very briefly to mention I’m working on a new course, and there’s a star-studded set of guest speakers 🎉

From Scratch to Scale: Distributed Training (from the ground up).

From now until I’m done writing the course material, it’s 25% off :)

maven.com/walk-with-co...

When constrained by a variety of reasons to where you can't include multiple copies (or mmaps) of datasets in memory, be it too many concurrent streams, low resource availability, or a slow CPU, dispatching is here to help.

When constrained by a variety of reasons to where you can't include multiple copies (or mmaps) of datasets in memory, be it too many concurrent streams, low resource availability, or a slow CPU, dispatching is here to help.

When performing distributed data parallelism, we split the dataset every batch so every device sees a different chunk of the data. There are different methods for doing so. One example is sharding at the *dataset* level, shown here.

When performing distributed data parallelism, we split the dataset every batch so every device sees a different chunk of the data. There are different methods for doing so. One example is sharding at the *dataset* level, shown here.

Back very briefly to mention I’m working on a new course, and there’s a star-studded set of guest speakers 🎉

From Scratch to Scale: Distributed Training (from the ground up).

From now until I’m done writing the course material, it’s 25% off :)

maven.com/walk-with-co...

Back very briefly to mention I’m working on a new course, and there’s a star-studded set of guest speakers 🎉

From Scratch to Scale: Distributed Training (from the ground up).

From now until I’m done writing the course material, it’s 25% off :)

maven.com/walk-with-co...

11/10 customer support

11/10 customer support

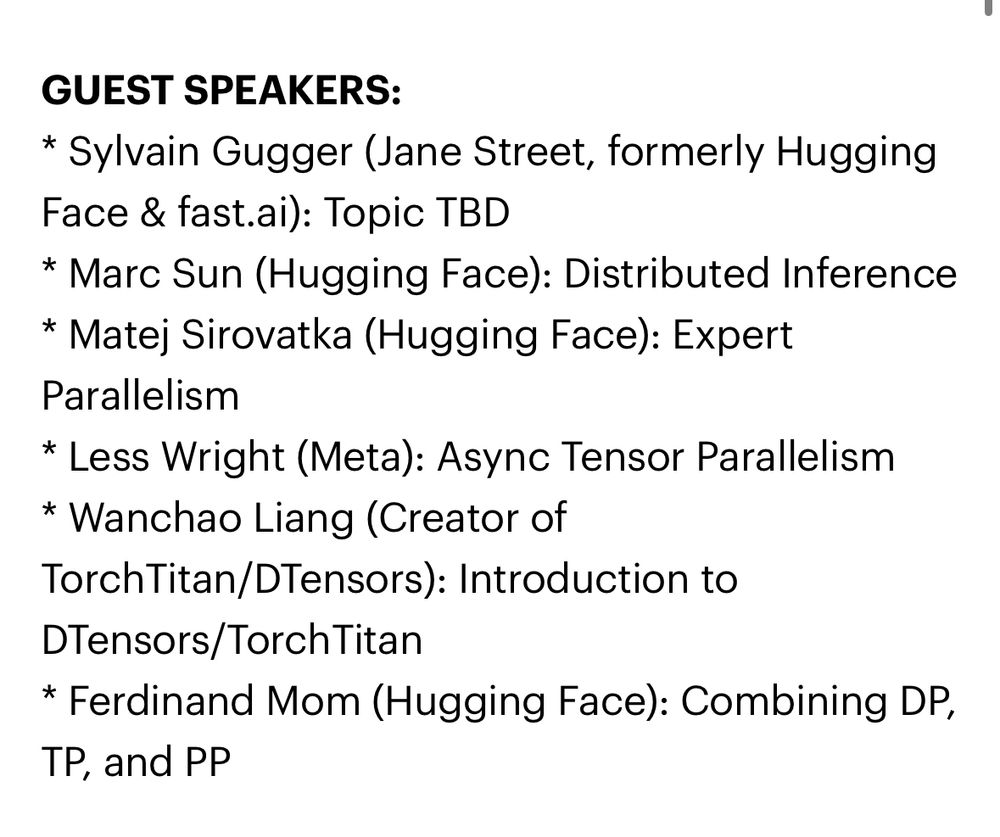

145kg squat

180kg deadlift

90kg bench

145kg squat

180kg deadlift

90kg bench

Going from 260lbs back squat to 115lbs front squat was *lovely*

Going from 260lbs back squat to 115lbs front squat was *lovely*

github.com/johnousterho...

github.com/johnousterho...

Who’s going to be the first to start a study group?

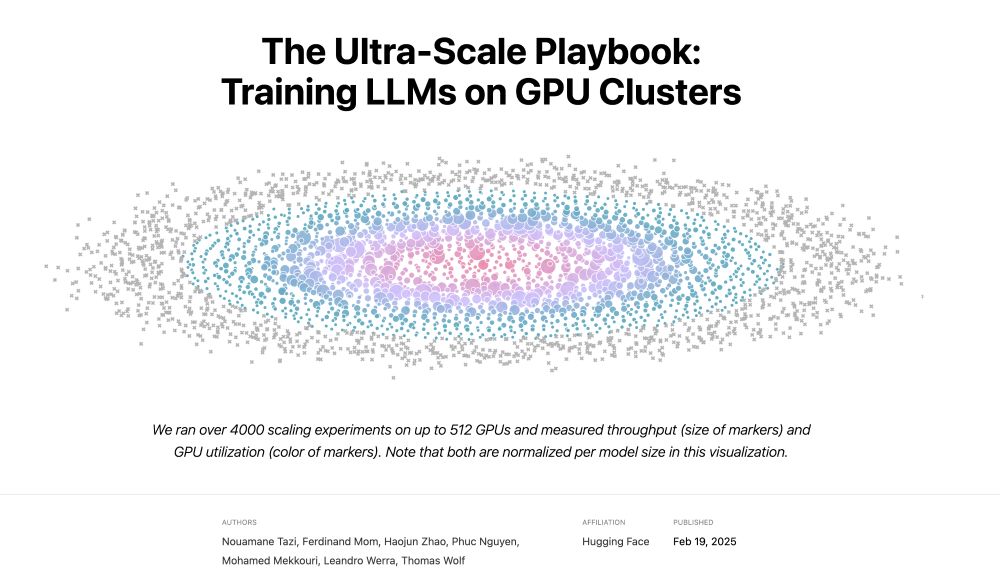

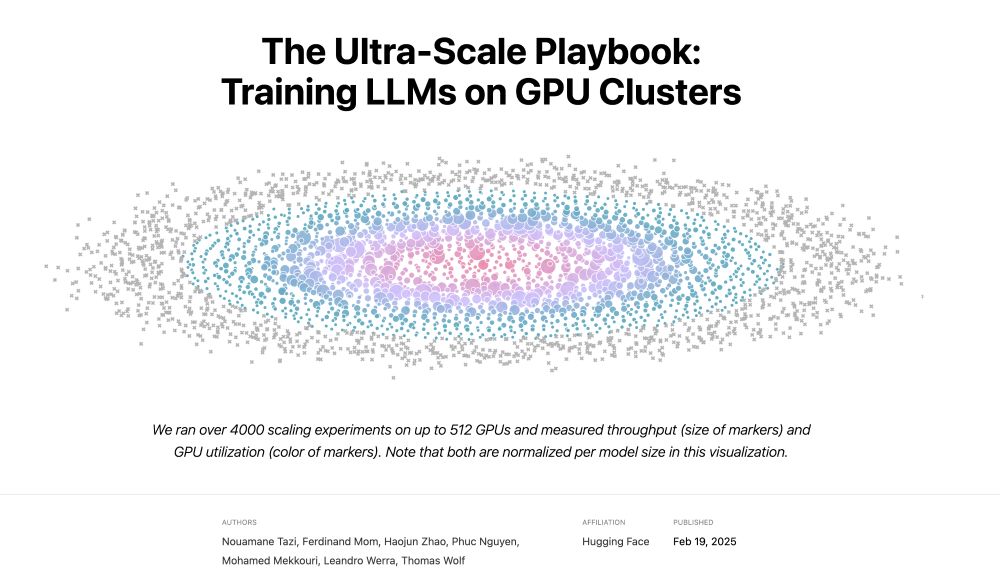

A book to learn all about 5D parallelism, ZeRO, CUDA kernels, how/why overlap compute & coms with theory, motivation, interactive plots and 4000+ experiments!

Who’s going to be the first to start a study group?

A book to learn all about 5D parallelism, ZeRO, CUDA kernels, how/why overlap compute & coms with theory, motivation, interactive plots and 4000+ experiments!

A book to learn all about 5D parallelism, ZeRO, CUDA kernels, how/why overlap compute & coms with theory, motivation, interactive plots and 4000+ experiments!

Eyeballing doing this for the new place

Eyeballing doing this for the new place

* Selling your house

* Buying a house (*after* putting your old house on the market)

* Lifting competition

All in the same week

* Selling your house

* Buying a house (*after* putting your old house on the market)

* Lifting competition

All in the same week

Ran the equivalent of this space locally via fastapi + hooked into the LLM commit message generator got the job done, and very fast (since it’s a T5 model under the hood)

huggingface.co/spaces/mamik...

Ran the equivalent of this space locally via fastapi + hooked into the LLM commit message generator got the job done, and very fast (since it’s a T5 model under the hood)

huggingface.co/spaces/mamik...

Follow along: github.com/huggingface/...

Follow along: github.com/huggingface/...

simonwillison.net/2025/Jan/24/...

simonwillison.net/2025/Jan/24/...

Verizon:

I suppose I’m forcibly done with work for the day, too bad I had to go to the next town over to say that 😅

Verizon:

I suppose I’m forcibly done with work for the day, too bad I had to go to the next town over to say that 😅