🔗 NDIF: https://ndif.us

🧰 NNsight API: https://nnsight.net

😸 GitHub: https://github.com/ndif-team/nnsight

That's easy! (you might think) Because surely it knows: amore, amor, amour are all based on the same Latin word. It can just drop the "e", or add a "u".

That's easy! (you might think) Because surely it knows: amore, amor, amour are all based on the same Latin word. It can just drop the "e", or add a "u".

ArXiv: arxiv.org/abs/2410.22366

Project Website: sdxl-unbox.epfl.ch/

ArXiv: arxiv.org/abs/2410.22366

Project Website: sdxl-unbox.epfl.ch/

Watch here: youtu.be/43NnaqGjArA

Watch here: youtu.be/43NnaqGjArA

More details: ndif.us/hotswap.html

Application link: forms.gle/KHVkYxybmK12...

More details: ndif.us/hotswap.html

Application link: forms.gle/KHVkYxybmK12...

Sign up for the NDIF hot-swapping pilot by October 1st: forms.gle/Cf4WF3xiNzud...

Sign up for the NDIF hot-swapping pilot by October 1st: forms.gle/Cf4WF3xiNzud...

For any questions about the application or the NDIF platform, please contact us at [email protected].

For any questions about the application or the NDIF platform, please contact us at [email protected].

1. Be in the first cohort of users to access models beyond our whitelist

2. Directly control which models are hosted on the NDIF backend

3. Receive guided support on their project from the NDIF team

4. Give feedback, guiding future user experience

1. Be in the first cohort of users to access models beyond our whitelist

2. Directly control which models are hosted on the NDIF backend

3. Receive guided support on their project from the NDIF team

4. Give feedback, guiding future user experience

goodfire.ai/ for sponsoring! nemiconf.github.io/summer25/

If you can't make it in person, the livestream will be here:

www.youtube.com/live/4BJBis...

goodfire.ai/ for sponsoring! nemiconf.github.io/summer25/

If you can't make it in person, the livestream will be here:

www.youtube.com/live/4BJBis...

Every week you will find new talks on recent research in the science of neural networks. The first few are posted: jackmerullo.bsky.social, Roy Rinberg, and me.

At the @ndif-team.bsky.social Youtube Channel: www.youtube.com/@NDIFTeam

Every week you will find new talks on recent research in the science of neural networks. The first few are posted: jackmerullo.bsky.social, Roy Rinberg, and me.

At the @ndif-team.bsky.social Youtube Channel: www.youtube.com/@NDIFTeam

www.youtube.com/@NDIFTeam

www.youtube.com/@NDIFTeam

www.youtube.com/watch?v=eKd...

www.youtube.com/watch?v=eKd...

🔗 nnsight.net/notebooks/m...

🔗 nnsight.net/notebooks/m...

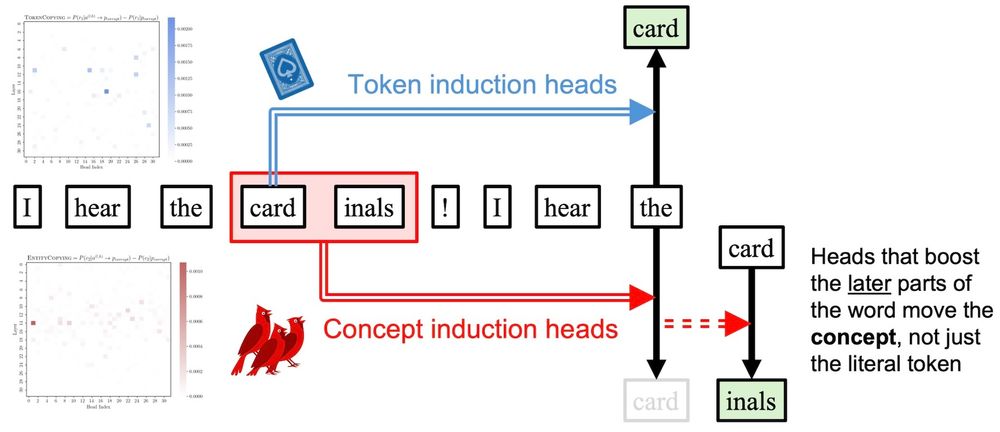

🔗 dualroute.baulab.info/

🔗 dualroute.baulab.info/