Thoughts & opinions are my own and do not necessarily represent my employer.

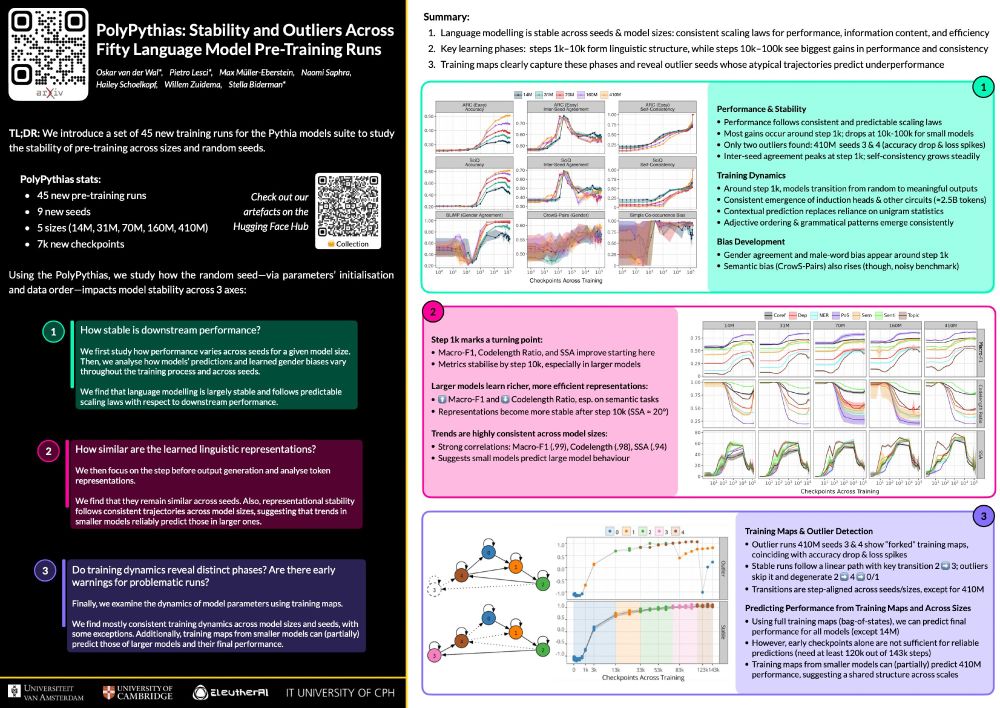

We’ll be presenting our paper on pre-training stability in language models and the PolyPythias 🧵

🔗 ArXiv: arxiv.org/abs/2503.09543

🤗 PolyPythias: huggingface.co/collections/...

We’ll be presenting our paper on pre-training stability in language models and the PolyPythias 🧵

🔗 ArXiv: arxiv.org/abs/2503.09543

🤗 PolyPythias: huggingface.co/collections/...

go.bsky.app/NZDc31B

go.bsky.app/NZDc31B

We're looking back at two inspiring days of talks, posters, and discussions—thanks to everyone who participated!

wai-amsterdam.github.io

We're looking back at two inspiring days of talks, posters, and discussions—thanks to everyone who participated!

wai-amsterdam.github.io

(Since the workshop is non-archival, previously published work is welcome too. So consider submitting previous/future work to join the discussion in Amsterdam!)

👋Join the workshop New Perspectives on Bias and Discrimination in Language Technology 4&5 Nov in #Amsterdam!

(Since the workshop is non-archival, previously published work is welcome too. So consider submitting previous/future work to join the discussion in Amsterdam!)

👋Join the workshop New Perspectives on Bias and Discrimination in Language Technology 4&5 Nov in #Amsterdam!

👋Join the workshop New Perspectives on Bias and Discrimination in Language Technology 4&5 Nov in #Amsterdam!

OLMo accelerates the study of LMs. We release *everything*, from toolkit for creating data (Dolma) to train/inf code

blog blog.allenai.org/olmo-open-la...

olmo paper allenai.org/olmo/olmo-pa...

dolma paper allenai.org/olmo/dolma-p...

OLMo accelerates the study of LMs. We release *everything*, from toolkit for creating data (Dolma) to train/inf code

blog blog.allenai.org/olmo-open-la...

olmo paper allenai.org/olmo/olmo-pa...

dolma paper allenai.org/olmo/dolma-p...

bsky.app/profile/ovdw...

doi.org/10.1613/jair...

bsky.app/profile/ovdw...

doi.org/10.1613/jair...

doi.org/10.1613/jair...

🤖

bskAI

blueskAI

🤖

bskAI

blueskAI

aclanthology.org/2023.blackbo...

A summary 👇

aclanthology.org/2023.blackbo...

A summary 👇