https://web.mit.edu/phillipi/

Ballroom B, Full day

Amazing lineup of speakers: Ali Farhadi, @alisongopnik.bsky.social, Phlipp Krahenbul, @phillipisola.bsky.social

Ballroom B, Full day

Amazing lineup of speakers: Ali Farhadi, @alisongopnik.bsky.social, Phlipp Krahenbul, @phillipisola.bsky.social

Today I want to share two new works on this topic:

Eliciting higher alignment: arxiv.org/abs/2510.02425

Unpaired learning of unified reps: arxiv.org/abs/2510.08492

1/9

Today I want to share two new works on this topic:

Eliciting higher alignment: arxiv.org/abs/2510.02425

Unpaired learning of unified reps: arxiv.org/abs/2510.08492

1/9

chatgpt.com/share/689364...

chatgpt.com/share/689364...

* Better than last year

* About the same

* Worse than last year

Share your thoughts in the thread!

* Better than last year

* About the same

* Worse than last year

Share your thoughts in the thread!

visionbook.mit.edu

We are working on adding some interactive components like search and (beta) integration with LLMs.

Hope this is useful and feel free to submit Github issues to help us improve the text!

visionbook.mit.edu

We are working on adding some interactive components like search and (beta) integration with LLMs.

Hope this is useful and feel free to submit Github issues to help us improve the text!

cvpr.thecvf.com/Conferences/...

cvpr.thecvf.com/Conferences/...

Some of these words are consistently remembered better than others. Why is that?

In our paper, just published in J. Exp. Psychol., we provide a simple Bayesian account and show that it explains >80% of variance in word memorability: tinyurl.com/yf3md5aj

Some of these words are consistently remembered better than others. Why is that?

In our paper, just published in J. Exp. Psychol., we provide a simple Bayesian account and show that it explains >80% of variance in word memorability: tinyurl.com/yf3md5aj

How about this?

Reasoning is planning in knowledge space.

Planning = find a sequence of actions that achieves a goal.

Reasoning = find a sequence of inferences that answers a query.

Then it's no surprise that RL can amortize both.

How about this?

Reasoning is planning in knowledge space.

Planning = find a sequence of actions that achieves a goal.

Reasoning = find a sequence of inferences that answers a query.

Then it's no surprise that RL can amortize both.

So why did chip stocks crash?

Is it because people assume that AI demand will satiate at a certain level of intelligence? Or is there some other explanation

So why did chip stocks crash?

Is it because people assume that AI demand will satiate at a certain level of intelligence? Or is there some other explanation

Now we live in an era when it is becoming meaningful to search for "extraterrestrial life" not just in our universe but in simulated universes as well.

This project provides new tools toward that dream:

Blog: sakana.ai/asal/

We propose a new method called Automated Search for Artificial Life (ASAL) which uses foundation models to automate the discovery of the most interesting and open-ended artificial lifeforms!

Now we live in an era when it is becoming meaningful to search for "extraterrestrial life" not just in our universe but in simulated universes as well.

This project provides new tools toward that dream:

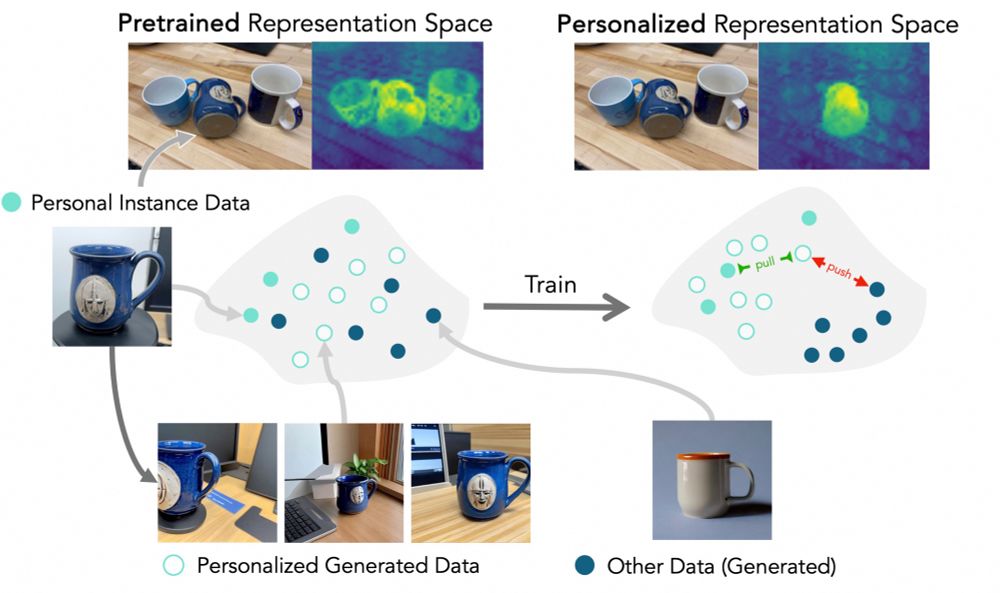

In our new paper, we show you can adapt general-purpose vision models to these tasks from just three photos!

📝: arxiv.org/abs/2412.16156

💻: github.com/ssundaram21/...

(1/n)

In our new paper, we show you can adapt general-purpose vision models to these tasks from just three photos!

📝: arxiv.org/abs/2412.16156

💻: github.com/ssundaram21/...

(1/n)

They are all about the following question: how to characterize the geometry of deep learning problems, and in particular how to measure *distance*?

Each paper/talk gives a rather different answer, detailed below:

They are all about the following question: how to characterize the geometry of deep learning problems, and in particular how to measure *distance*?

Each paper/talk gives a rather different answer, detailed below:

A few items I wasn't sure where to put. You could break it down differently. What did I get wrong?

A few items I wasn't sure where to put. You could break it down differently. What did I get wrong?

A big dream in AI is to create world models of sufficient quality that you can train agents within them.

Classic simulators lack visual diversity and realism. GenAI lacks physical accuracy. But combining the two can work pretty well!

Paper: arxiv.org/abs/2411.00083

A big dream in AI is to create world models of sufficient quality that you can train agents within them.

Classic simulators lack visual diversity and realism. GenAI lacks physical accuracy. But combining the two can work pretty well!

Paper: arxiv.org/abs/2411.00083

Hope we can get a critical mass like there was on science Twitter!

Hope we can get a critical mass like there was on science Twitter!