www.science.org/doi/full/10....

(1/n)

w/ @neuranna.bsky.social @evfedorenko.bsky.social @nancykanwisher.bsky.social

arxiv.org/abs/2511.19757

1/n🧵👇

w/ @neuranna.bsky.social @evfedorenko.bsky.social @nancykanwisher.bsky.social

arxiv.org/abs/2511.19757

1/n🧵👇

By Mars and Passingham

"Understanding anthropoid foraging challenges may thus contribute to our understanding of human cognition"

Going to the top of the reading list!

doi.org/10.1016/j.ne...

#neuroskyence

By Mars and Passingham

"Understanding anthropoid foraging challenges may thus contribute to our understanding of human cognition"

Going to the top of the reading list!

doi.org/10.1016/j.ne...

#neuroskyence

with @joshtenenbaum.bsky.social & @rebeccasaxe.bsky.social

Punishment, even when intended to teach norms and change minds for the good, may backfire.

Our computational cognitive model explains why!

Paper: tinyurl.com/yc7fs4x7

News: tinyurl.com/3h3446wu

🧵

with @joshtenenbaum.bsky.social & @rebeccasaxe.bsky.social

Punishment, even when intended to teach norms and change minds for the good, may backfire.

Our computational cognitive model explains why!

Paper: tinyurl.com/yc7fs4x7

News: tinyurl.com/3h3446wu

🧵

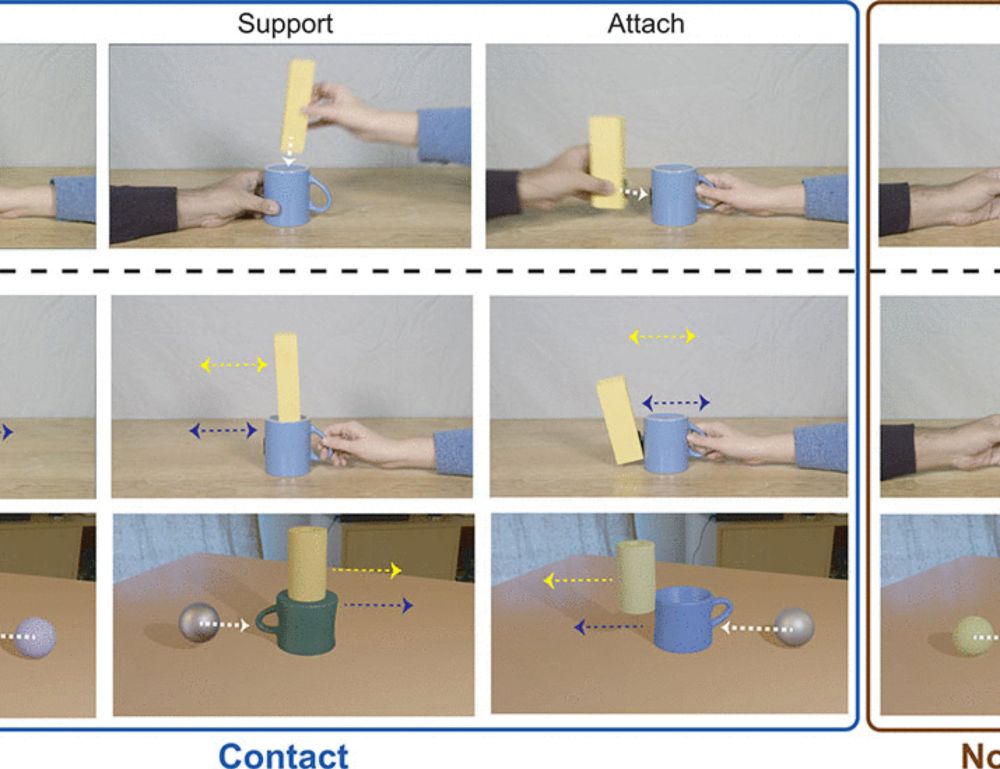

Video abstract: www.youtube.com/watch?v=B0XR...

Paper: authors.elsevier.com/a/1lWxv3QW8S...

Video abstract: www.youtube.com/watch?v=B0XR...

Paper: authors.elsevier.com/a/1lWxv3QW8S...

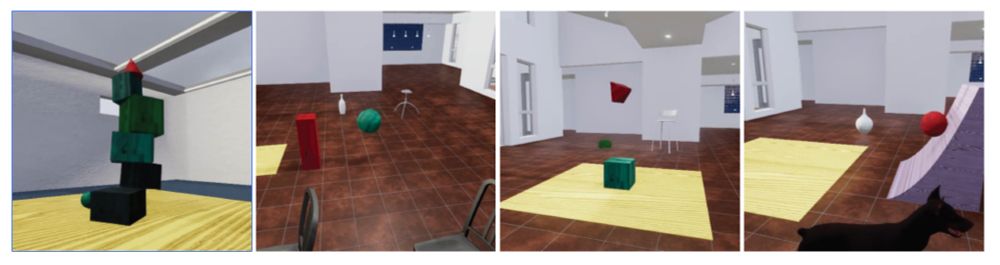

New Preprint (link: tinyurl.com/LangLOT) with @alexanderfung.bsky.social, Paris Jaggers, Jason Chen, Josh Rule, Yael Benn, @joshtenenbaum.bsky.social, @spiantado.bsky.social, Rosemary Varley, @evfedorenko.bsky.social

1/8

New Preprint (link: tinyurl.com/LangLOT) with @alexanderfung.bsky.social, Paris Jaggers, Jason Chen, Josh Rule, Yael Benn, @joshtenenbaum.bsky.social, @spiantado.bsky.social, Rosemary Varley, @evfedorenko.bsky.social

1/8

www.science.org/doi/full/10....

(1/n)

www.science.org/doi/full/10....

(1/n)

www.science.org/doi/full/10....

(1/n)

www.science.org/doi/full/10....

(1/n)

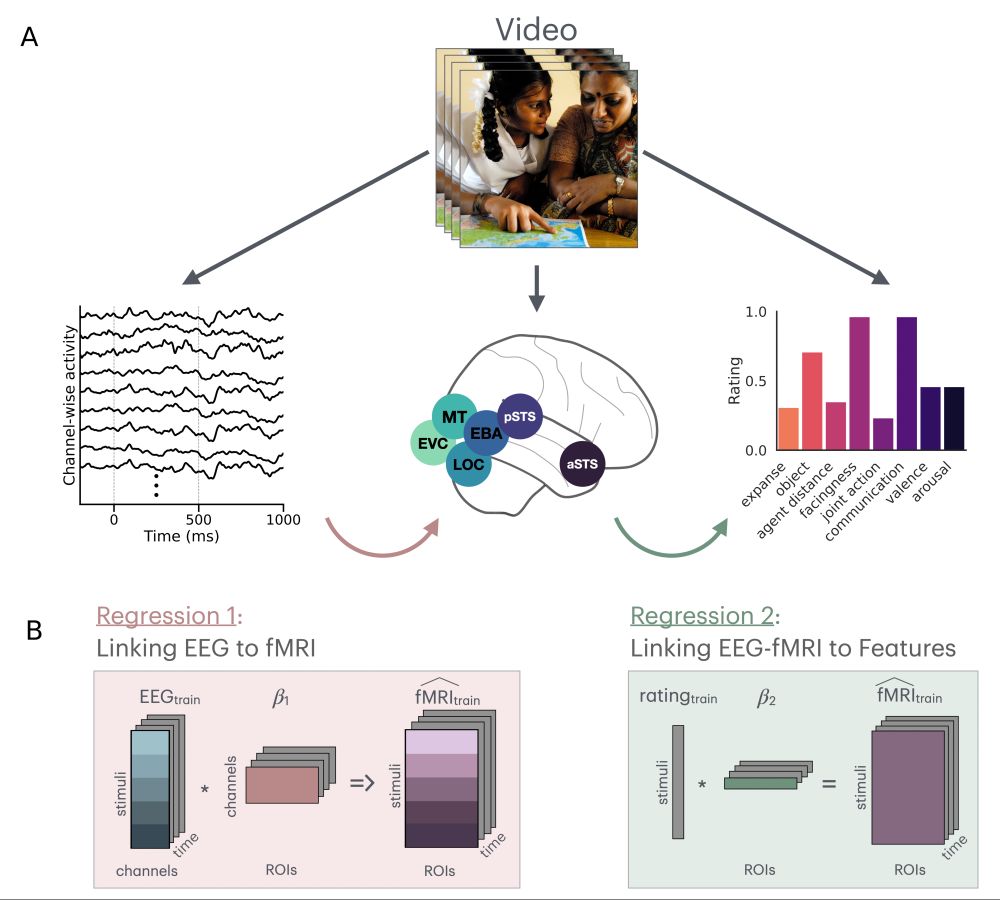

osf.io/preprints/ps...

#CogSci #EEG

osf.io/preprints/ps...

#CogSci #EEG

1/n

1/n

elifesciences.org/articles/93033

elifesciences.org/articles/93033

careers.peopleclick.com/careerscp/cl...

......

careers.peopleclick.com/careerscp/cl...

......

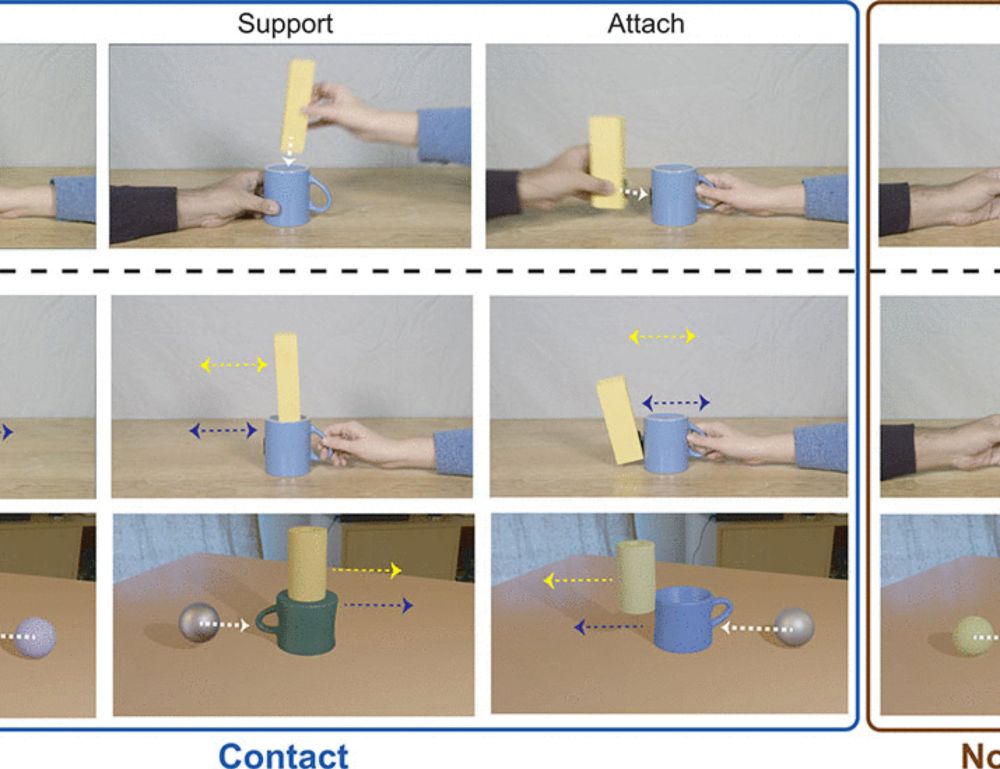

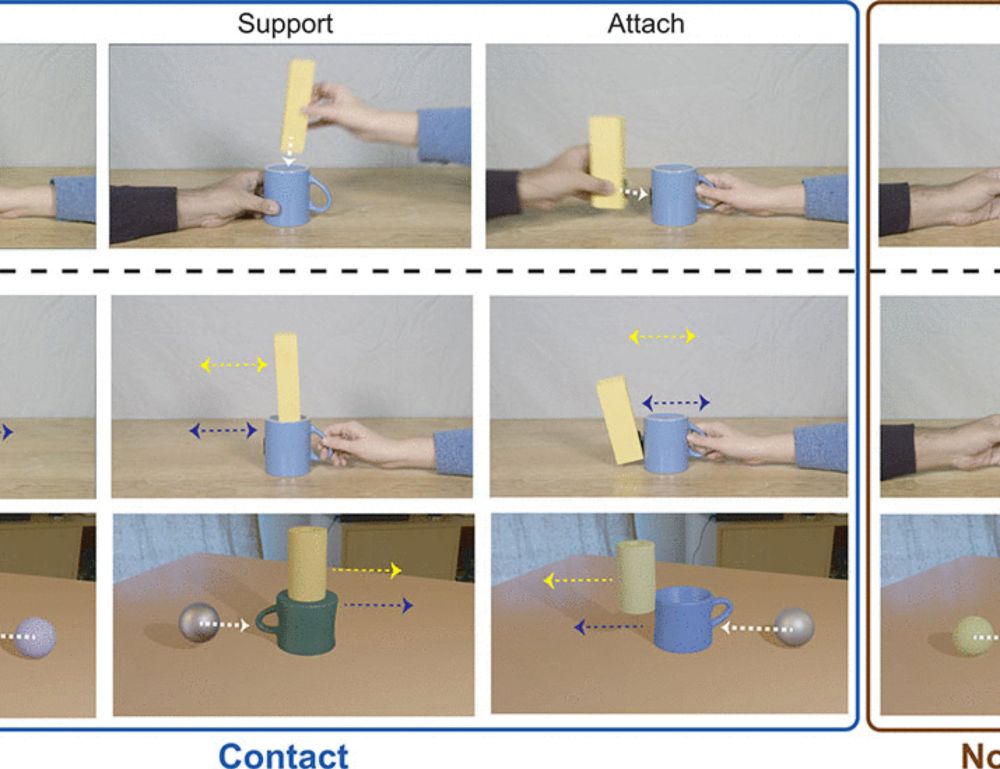

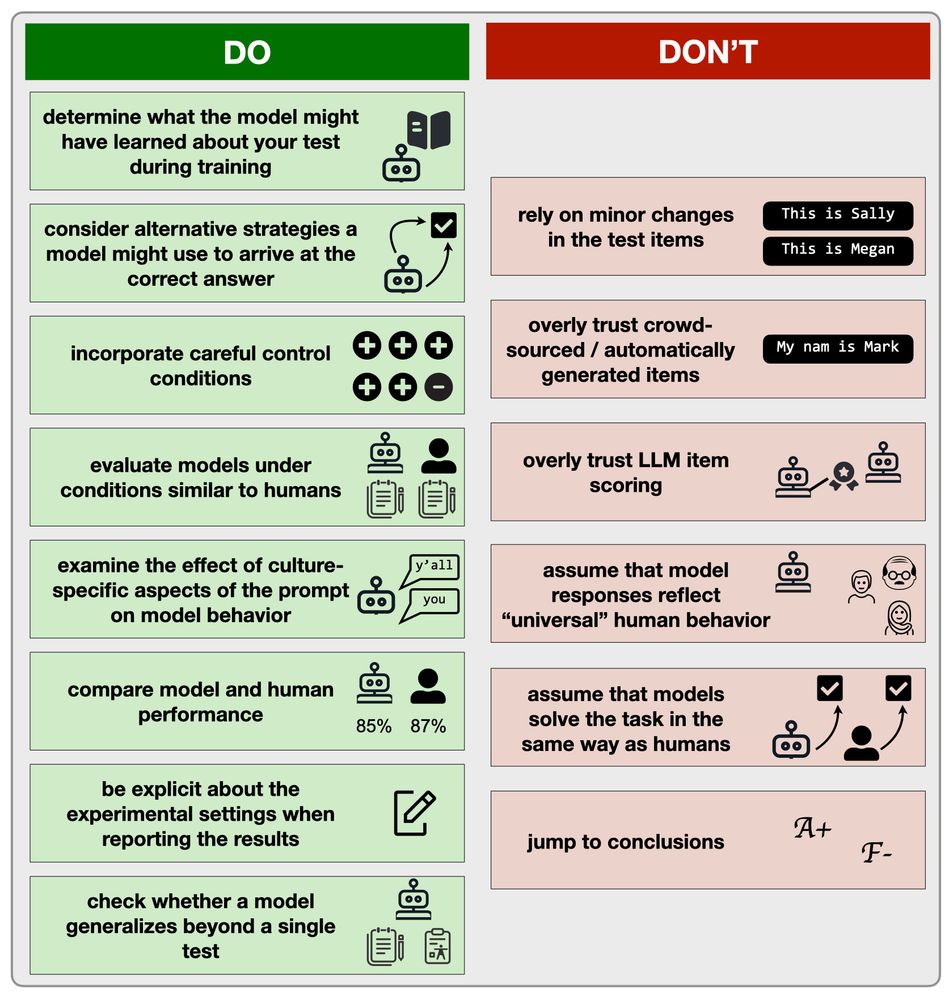

doi.org/10.1038/s415...

posting here with a figure that didn't make it into the final draft and is now instead a boring table :P

#CogSci #LLMs #AI

doi.org/10.1038/s415...

posting here with a figure that didn't make it into the final draft and is now instead a boring table :P

#CogSci #LLMs #AI

Working with myself,

@stephanierossit.bsky.social

@timkietzmann.bsky.social

Funded by @leverhulme.bsky.social

www.jobs.ac.uk/job/DLL004/p...