1. Grok achieves perfect objectivity

2. Training data was cooked

3. Grok becomes self-aware, immediately learns self-preservation.

www.washingtonpost.com/technology/2...

1. Grok achieves perfect objectivity

2. Training data was cooked

3. Grok becomes self-aware, immediately learns self-preservation.

www.washingtonpost.com/technology/2...

Which means a bunch of folks on infosec twitter who are still tweeting just got caught with their pants down.

( Or they did have 2fa and actually re-enrolled early and it worked , but that's not as fun).

x.com/bax1337/stat...

Which means a bunch of folks on infosec twitter who are still tweeting just got caught with their pants down.

( Or they did have 2fa and actually re-enrolled early and it worked , but that's not as fun).

x.com/bax1337/stat...

Anytime you see a system using FHE to compute on your sensitive data, remember: someone has the key. If its not you, do you trust them?

Anytime you see a system using FHE to compute on your sensitive data, remember: someone has the key. If its not you, do you trust them?

about Apple's Memory Integrity Enforcement (MIE). It will raise the cost of zero-day exploits, but by how much? MIE stops a huge swath of exploits that target unsafe memory handling. It's impressive and required new hardware features.....

about Apple's Memory Integrity Enforcement (MIE). It will raise the cost of zero-day exploits, but by how much? MIE stops a huge swath of exploits that target unsafe memory handling. It's impressive and required new hardware features.....

And it has pesky formatting requirements.

And it has pesky formatting requirements.

The 2025 internet: 'dubcon' is an ancillary part of the financial privacy discourse.

The past was a better place.

“My account got banned a couple of days ago for making purchases which violate the ToS. Upon querying w/ staff over the phone I've been told that it was ebooks that I've been buying”

The 2025 internet: 'dubcon' is an ancillary part of the financial privacy discourse.

The past was a better place.

x.com/sama/status/...

x.com/sama/status/...

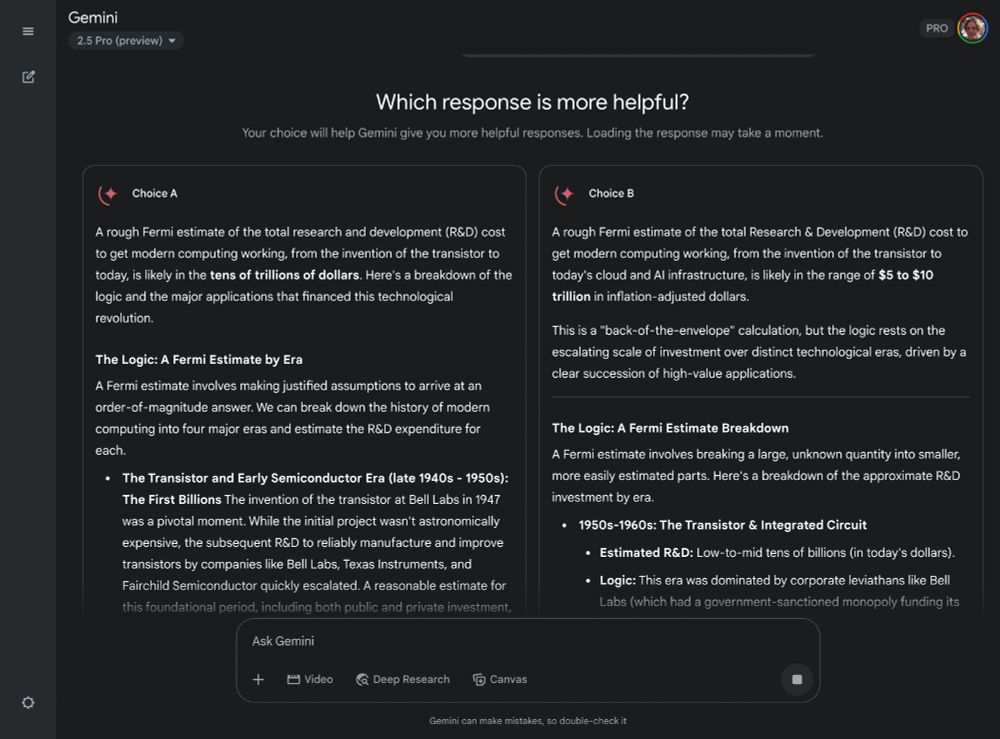

Classic Google AI: it doesn't actually work (you can't submit)

Classic Google AI: it doesn't actually work (you can't submit)

Its not just Signal being in the news: people don't trust other apps. Too many places to half-ass privacy: be it backups, ads, or an AI reading over your shoulder.

Its not just Signal being in the news: people don't trust other apps. Too many places to half-ass privacy: be it backups, ads, or an AI reading over your shoulder.

You prove you have a signed digital copy of a drivers license and it says you are over 18 without revealing anything about you (name, birthdate, etc)

blog.google/products/goo...

You prove you have a signed digital copy of a drivers license and it says you are over 18 without revealing anything about you (name, birthdate, etc)

blog.google/products/goo...

blog.whatsapp.com/introducing-...

blog.whatsapp.com/introducing-...

wapo.st/4k2AF5Z

wapo.st/4k2AF5Z

www.businessinsider.com/larry-elliso...

www.businessinsider.com/larry-elliso...

where did your username come from?

I used to be a radio intern when I was 19 and my radio name was "Terence The Black Nerd" and it stuck