🐦: https://x.com/sedielem

Research Scientist at Google DeepMind (WaveNet, Imagen 3, Veo, ...). I tweet about deep learning (research + software), music, generative models (personal account).

sander.ai/2025/04/15/l...

Produced by Welch Labs, this is the first in a short series of 3b1b this summer. I enjoyed providing editorial feedback throughout the last several months, and couldn't be happier with the result.

🕒Join us at 3PM on Thursday July 17. We'll meet here (see photo, near the west building's west entrance), and venture out from there to find a good spot to sit. Tell your friends!

🕒Join us at 3PM on Thursday July 17. We'll meet here (see photo, near the west building's west entrance), and venture out from there to find a good spot to sit. Tell your friends!

Nice blog + code by Raymond Fan: rfangit.github.io/blog/2025/op...

Nice blog + code by Raymond Fan: rfangit.github.io/blog/2025/op...

The other two interviewees' research played a pivotal role in the rise of diffusion models, whereas I just like to yap about them 😬 this was a wonderful opportunity to do exactly that!

The other two interviewees' research played a pivotal role in the rise of diffusion models, whereas I just like to yap about them 😬 this was a wonderful opportunity to do exactly that!

Submission deadline: May 23 (Friday next week)

mlforaudioworkshop.github.io

Submission deadline: May 23 (Friday next week)

mlforaudioworkshop.github.io

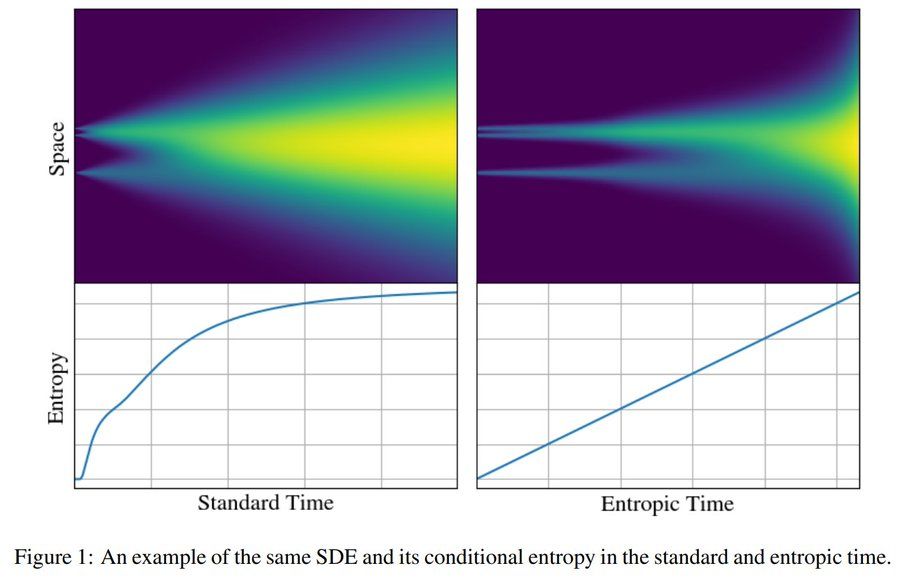

"Entropic Time Schedulers for Generative Diffusion Models"

We find that the conditional entropy offers a natural data-dependent notion of time during generation

Link: arxiv.org/abs/2504.13612

"Entropic Time Schedulers for Generative Diffusion Models"

We find that the conditional entropy offers a natural data-dependent notion of time during generation

Link: arxiv.org/abs/2504.13612

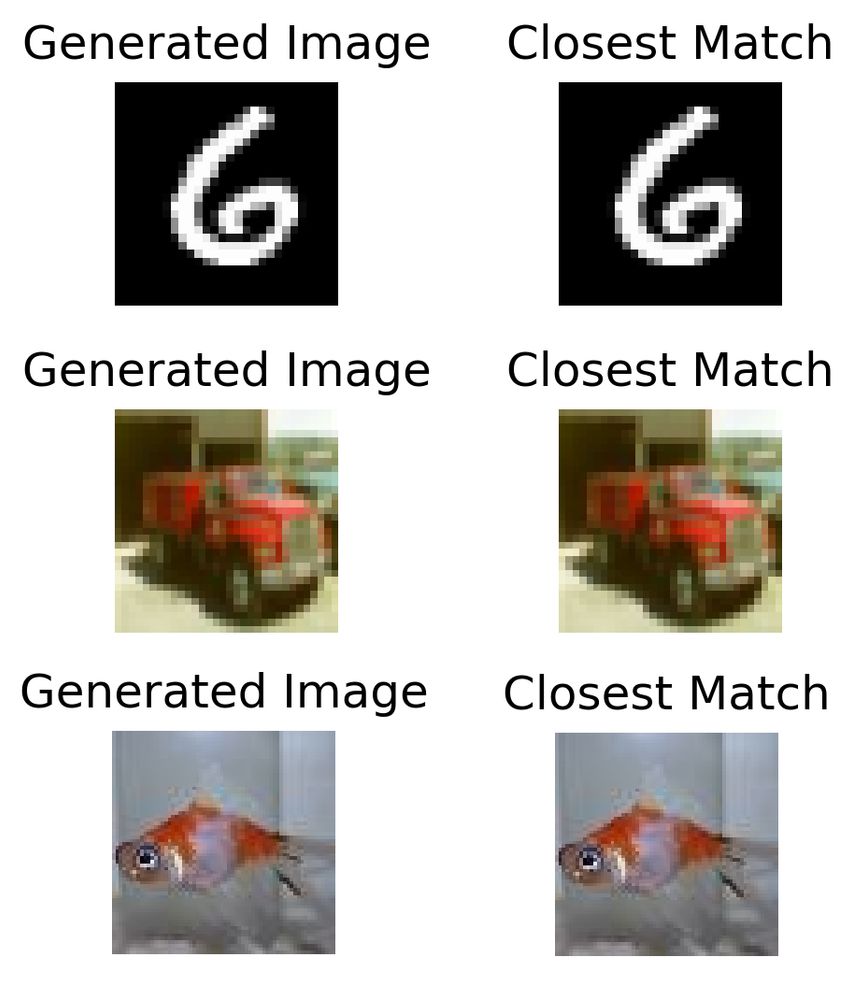

Combining latents with PCA components extracted from DINOv2 features yields faster training and better samples. Also enables a new guidance strategy. Simple and effective!

– Low-level image details (via VAE latents)

– High-level semantic features (via DINOv2)🧵

Combining latents with PCA components extracted from DINOv2 features yields faster training and better samples. Also enables a new guidance strategy. Simple and effective!

sander.ai/2025/04/15/l...

sander.ai/2025/04/15/l...

The most important lesson: be fearless! The community's view on score matching was quite pessimistic at the time, he went against the grain and made it work at scale!

www.youtube.com/watch?v=ud6z...

The most important lesson: be fearless! The community's view on score matching was quite pessimistic at the time, he went against the grain and made it work at scale!

www.youtube.com/watch?v=ud6z...

Now integrating thinking capabilities, 2.5 Pro Experimental is our most performant Gemini model yet. It’s #1 on the LM Arena leaderboard. 🥇

Now integrating thinking capabilities, 2.5 Pro Experimental is our most performant Gemini model yet. It’s #1 on the LM Arena leaderboard. 🥇

We work on Imagen, Veo, Lyria and all that good stuff. Come work with us! If you're interested, apply before Feb 28.

We work on Imagen, Veo, Lyria and all that good stuff. Come work with us! If you're interested, apply before Feb 28.

(One of these is not like the others -- both of them basically invented the field, and I occasionally write a blog post 🥲)

(One of these is not like the others -- both of them basically invented the field, and I occasionally write a blog post 🥲)