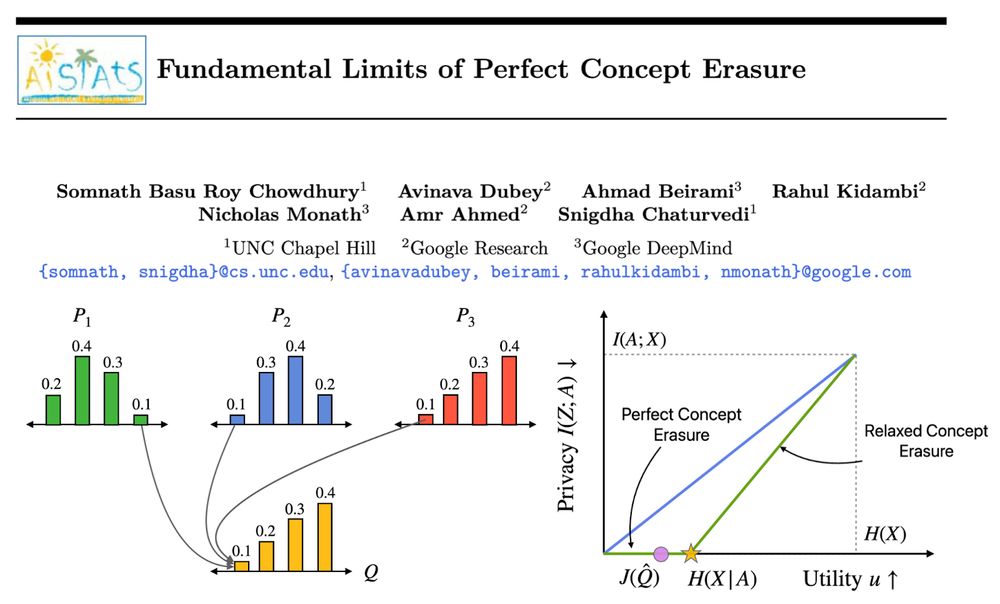

Our method, Perfect Erasure Functions (PEF), erases concepts perfectly from LLM representations. We analytically derive PEF w/o parameter estimation. PEFs achieve pareto optimal erasure-utility tradeoff backed w/ theoretical guarantees. #AISTATS2025 🧵

Our method, Perfect Erasure Functions (PEF), erases concepts perfectly from LLM representations. We analytically derive PEF w/o parameter estimation. PEFs achieve pareto optimal erasure-utility tradeoff backed w/ theoretical guarantees. #AISTATS2025 🧵

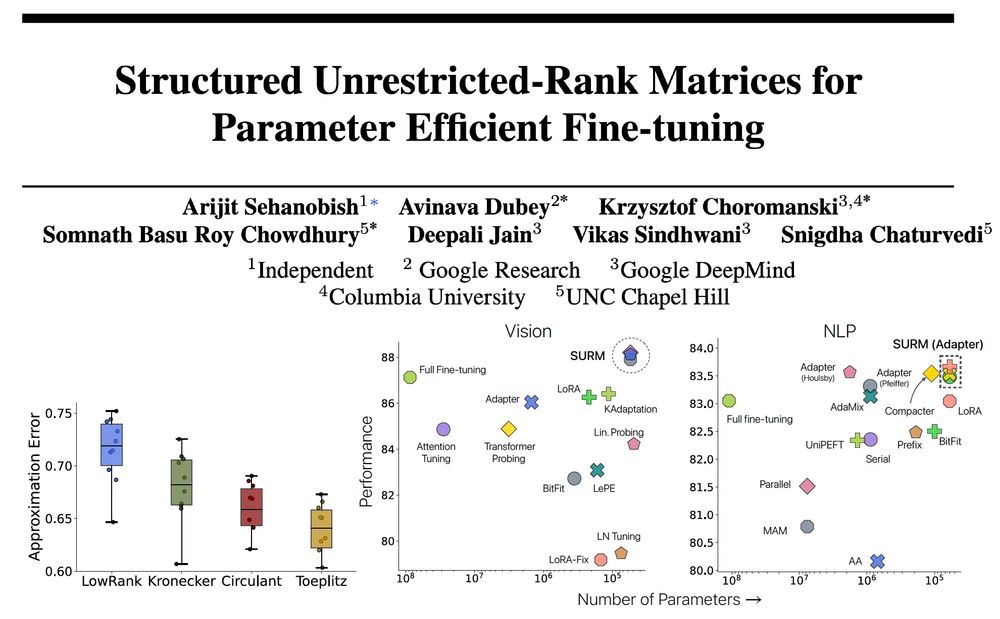

(1/3) 𝐒𝐭𝐫𝐮𝐜𝐭𝐮𝐫𝐞𝐝 𝐔𝐧𝐫𝐞𝐬𝐭𝐫𝐢𝐜𝐭𝐞𝐝-𝐑𝐚𝐧𝐤 𝐌𝐚𝐭𝐫𝐢𝐜𝐞𝐬 𝐟𝐨𝐫 𝐏𝐄𝐅𝐓

A new PEFT method replacing low-rank matrices (LoRA) with more expressive structured matrices

arxiv.org/abs/2406.17740

(1/3) 𝐒𝐭𝐫𝐮𝐜𝐭𝐮𝐫𝐞𝐝 𝐔𝐧𝐫𝐞𝐬𝐭𝐫𝐢𝐜𝐭𝐞𝐝-𝐑𝐚𝐧𝐤 𝐌𝐚𝐭𝐫𝐢𝐜𝐞𝐬 𝐟𝐨𝐫 𝐏𝐄𝐅𝐓

A new PEFT method replacing low-rank matrices (LoRA) with more expressive structured matrices

arxiv.org/abs/2406.17740