Here is the report:

aapor.org/wp-content/u...

And here is the Executive Summary: aapor.org/wp-content/u...

Here is the report:

aapor.org/wp-content/u...

And here is the Executive Summary: aapor.org/wp-content/u...

Come to @upenn.edu for a great postdoctoral fellowship opportunity at @perryworldhouse.bsky.social.

Pass it on.

perryworldhouse.upenn.edu/opportunitie...

Come to @upenn.edu for a great postdoctoral fellowship opportunity at @perryworldhouse.bsky.social.

Pass it on.

perryworldhouse.upenn.edu/opportunitie...

Our paper, FirstView at @politicalanalysis.bsky.social, tackles this question using browsing data from three U.S. samples (Facebook, YouGov, and Lucid):

Our paper, FirstView at @politicalanalysis.bsky.social, tackles this question using browsing data from three U.S. samples (Facebook, YouGov, and Lucid):

Survey Professionalism: New Evidence from Web Browsing Data - https://cup.org/3KWgqtg

- Bernhard Clemm von Hohenberg, @tiagoventura.bsky.social, Jonathan Nagler, @ericka.bric.digital & Magdalena Wojcieszak

#FirstView

Survey Professionalism: New Evidence from Web Browsing Data - https://cup.org/3KWgqtg

- Bernhard Clemm von Hohenberg, @tiagoventura.bsky.social, Jonathan Nagler, @ericka.bric.digital & Magdalena Wojcieszak

#FirstView

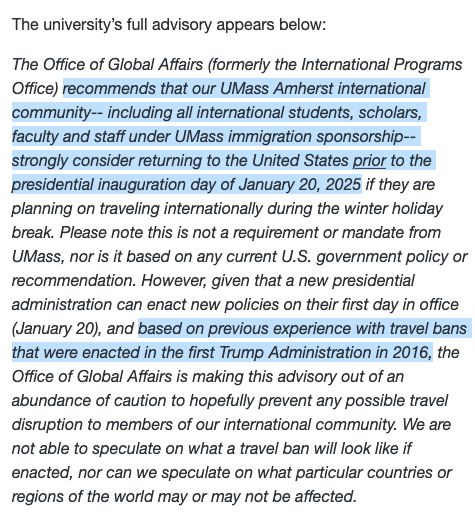

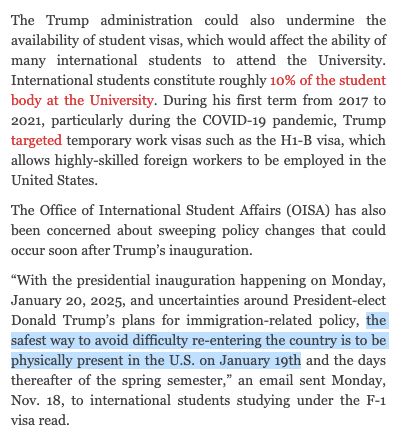

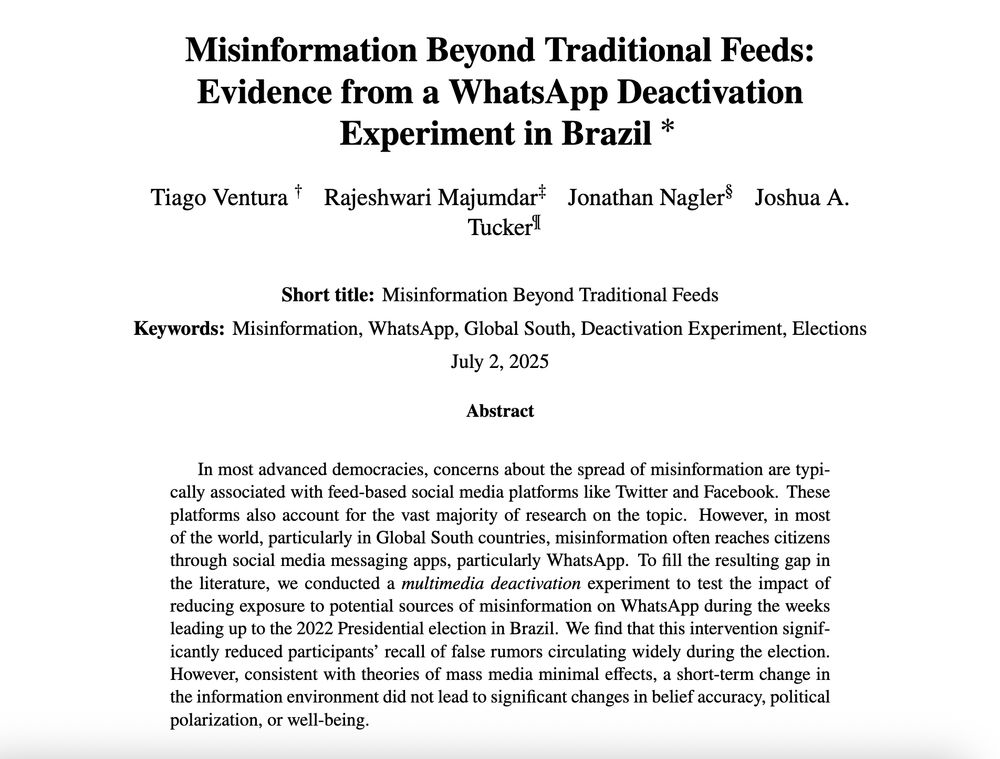

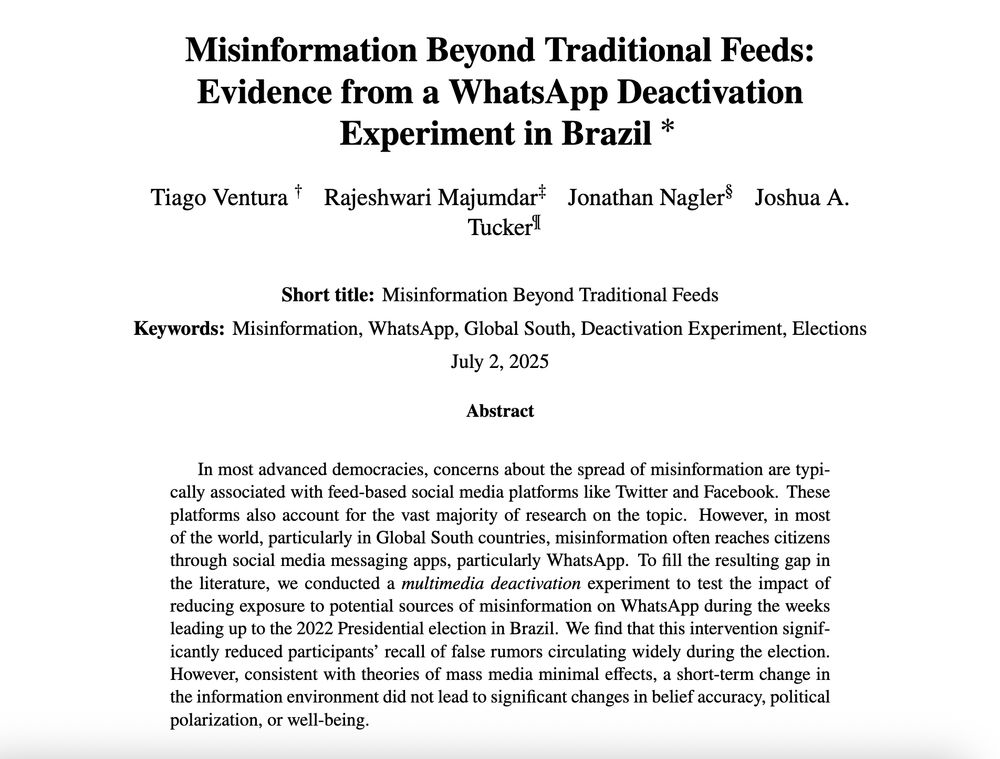

New in @The_JOP, we ran a WhatsApp deactivation experiment during Brazil’s 2022 election to explore how the app facilitates the spread of misinformation and affects voters’ attitudes.

www.journals.uchicago.edu/doi/abs/10.1...

New in @The_JOP, we ran a WhatsApp deactivation experiment during Brazil’s 2022 election to explore how the app facilitates the spread of misinformation and affects voters’ attitudes.

www.journals.uchicago.edu/doi/abs/10.1...

New in @The_JOP, we ran a WhatsApp deactivation experiment during Brazil’s 2022 election to explore how the app facilitates the spread of misinformation and affects voters’ attitudes.

www.journals.uchicago.edu/doi/abs/10.1...

journals.sagepub.com/doi/abs/10.1...

journals.sagepub.com/doi/abs/10.1...

🚨 Fact-checking increases perceptions of fact-checkers’ reputations, even for counter-attitudinal corrections to voters’ political views.

Thanks @respol.bsky.social for publishing and sharing!

& @ecalvo68.bsky.social examine how exposure to counter- and pro-attitudinal fact-checking messages impacts voters’ perception of the fact-checker: Fact-checking increases the reputation of the fact-checker but creates perceptions of ideological biases.

🚨 Fact-checking increases perceptions of fact-checkers’ reputations, even for counter-attitudinal corrections to voters’ political views.

Thanks @respol.bsky.social for publishing and sharing!

& @ecalvo68.bsky.social examine how exposure to counter- and pro-attitudinal fact-checking messages impacts voters’ perception of the fact-checker: Fact-checking increases the reputation of the fact-checker but creates perceptions of ideological biases.

& @ecalvo68.bsky.social examine how exposure to counter- and pro-attitudinal fact-checking messages impacts voters’ perception of the fact-checker: Fact-checking increases the reputation of the fact-checker but creates perceptions of ideological biases.

Do polarizing political tweets make us less trustworthy?

Not quite. But they do make us trust others less.

Our article shows how social media erodes trust, even when we stay principled.

🧵1/

Do polarizing political tweets make us less trustworthy?

Not quite. But they do make us trust others less.

Our article shows how social media erodes trust, even when we stay principled.

🧵1/

We start reviewing apps on MAY 1st and it pays over $58,000. Please share with anyone who might be interested!

Please apply here: apply.interfolio.com/166620

See more details below:

We start reviewing apps on MAY 1st and it pays over $58,000. Please share with anyone who might be interested!

Please apply here: apply.interfolio.com/166620

See more details below:

Thread below.

Thread below.

Apply here: politicalscience.uwo.ca/graduate/pro...

Or contact Grad Chair: [email protected]

Apply here: politicalscience.uwo.ca/graduate/pro...

Or contact Grad Chair: [email protected]

Much has been shown on fact-checking effects on the ability to discern true from false. But.. does it affect the fact-checker's perceived reputation and ideological leaning?

Check below: journals.sagepub.com/doi/10.1177/...

Much has been shown on fact-checking effects on the ability to discern true from false. But.. does it affect the fact-checker's perceived reputation and ideological leaning?

Check below: journals.sagepub.com/doi/10.1177/...

But I always read @caseynewton.bsky.social, and I think he misses a key point about market power and the purpose of tech criticism.

TL;DR: we shouldn't grade the tech barons on a curve.

open.substack.com/pub/davekarp...

But I always read @caseynewton.bsky.social, and I think he misses a key point about market power and the purpose of tech criticism.

TL;DR: we shouldn't grade the tech barons on a curve.

open.substack.com/pub/davekarp...

But I always read @caseynewton.bsky.social, and I think he misses a key point about market power and the purpose of tech criticism.

TL;DR: we shouldn't grade the tech barons on a curve.

open.substack.com/pub/davekarp...

blog.ssrn.com/2024/12/03/m...

@jatucker.bsky.social