- AI 101 series

- ML techniques

- AI Unicorns profiles

- Global dynamics

- ML History

- AI/ML Flashcards

Haven't decided yet which handle to maintain: this or @kseniase

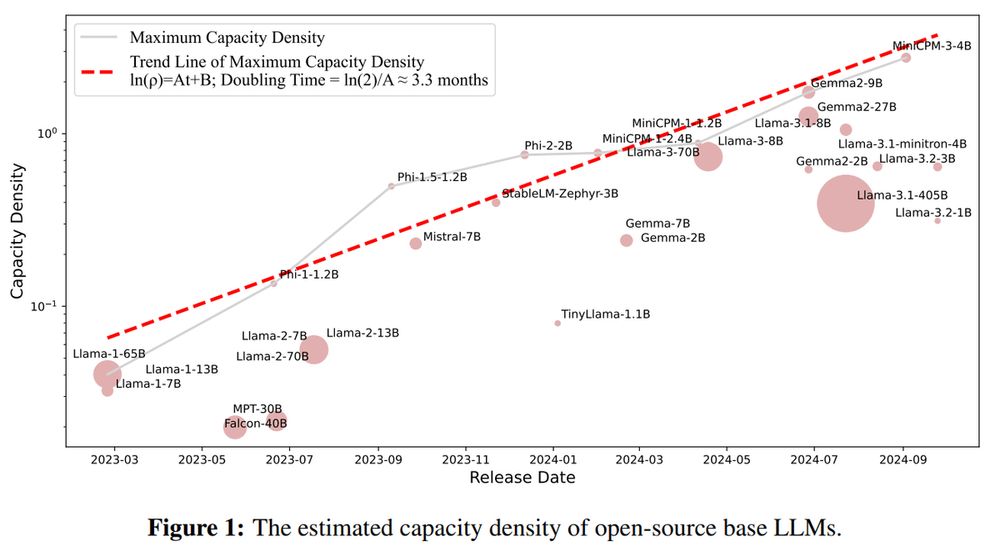

Focus on a balance between models' size and performance is more important that aiming for larger models

Tsinghua University and ModelBest Inc propose the idea of “capacity density” to measure how efficiently a model uses its size

Focus on a balance between models' size and performance is more important that aiming for larger models

Tsinghua University and ModelBest Inc propose the idea of “capacity density” to measure how efficiently a model uses its size

Here are 2 latest revolutional World Models, which create interactive 3D environments:

1. GoogleDeepMind's Genie 2

2. AI system from World Labs, co-founded by Fei-Fei Li

Explore more below 👇

Here are 2 latest revolutional World Models, which create interactive 3D environments:

1. GoogleDeepMind's Genie 2

2. AI system from World Labs, co-founded by Fei-Fei Li

Explore more below 👇

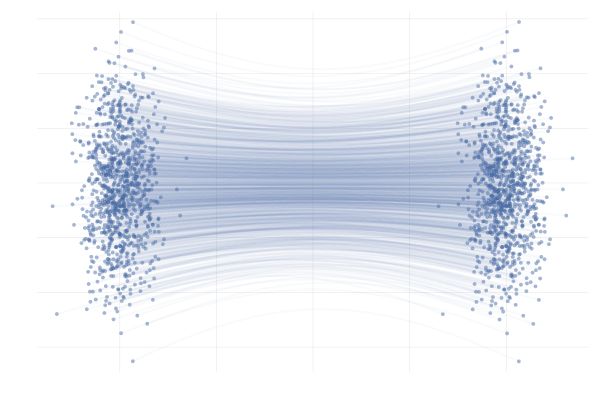

Flow Matching (FM) is used in top generative models, like Flux, F5-TTS, E2-TTS, and MovieGen with state-pf-the-art results. Some experts even say that FM might surpass diffusion models👇

Flow Matching (FM) is used in top generative models, like Flux, F5-TTS, E2-TTS, and MovieGen with state-pf-the-art results. Some experts even say that FM might surpass diffusion models👇

• Alibaba’s QwQ-32B

• OLMo 2 by Allen AI

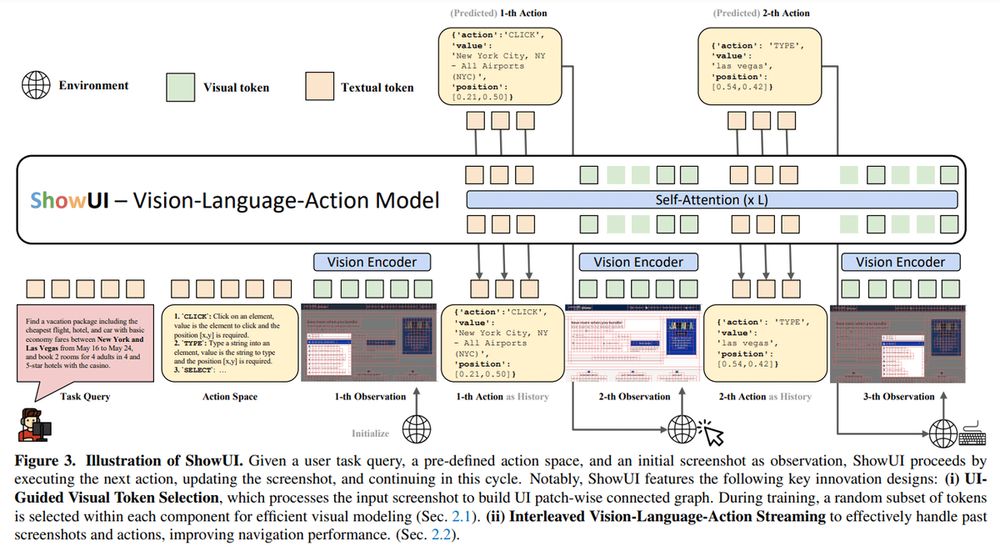

• ShowUI by Show Lab, NUS, Microsoft

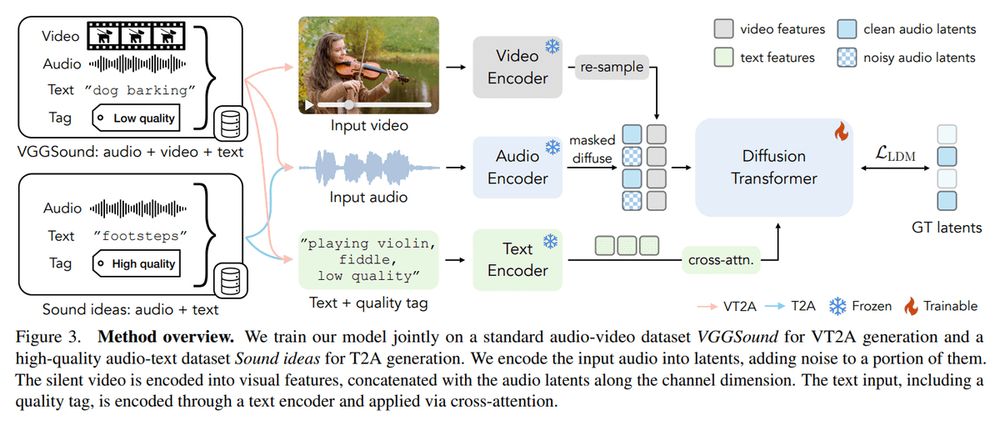

• Adobe's MultiFoley

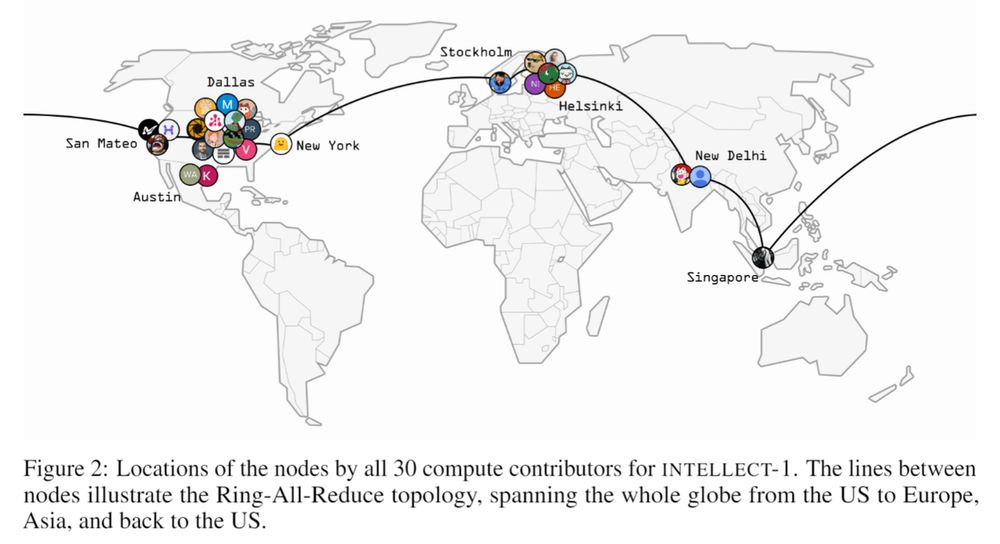

• INTELLECT-1 by Prime Intellect

🧵

• Alibaba’s QwQ-32B

• OLMo 2 by Allen AI

• ShowUI by Show Lab, NUS, Microsoft

• Adobe's MultiFoley

• INTELLECT-1 by Prime Intellect

🧵

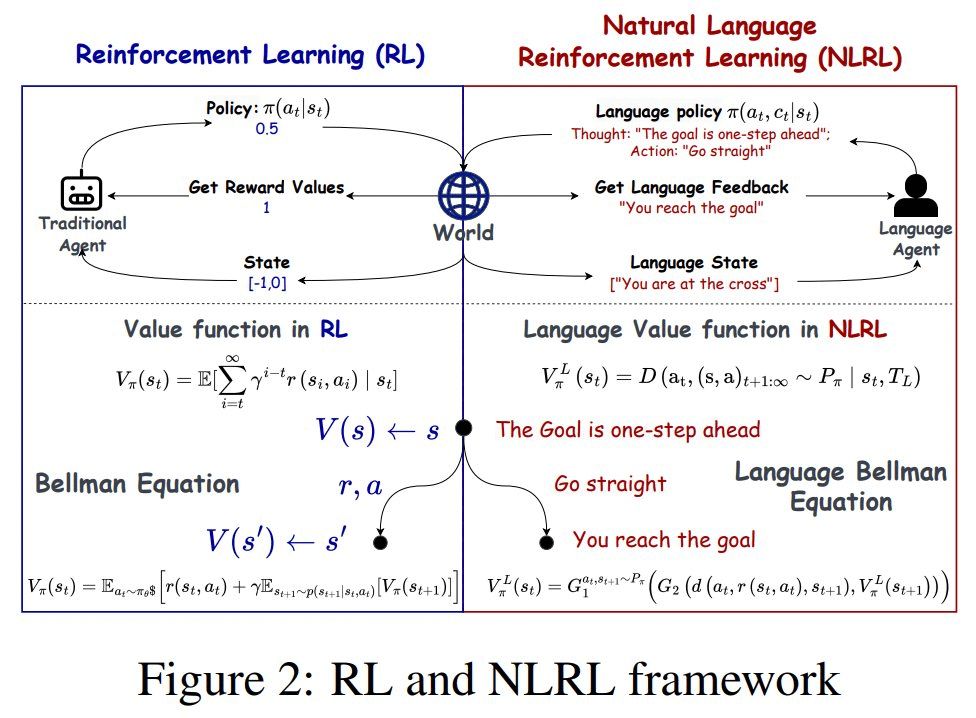

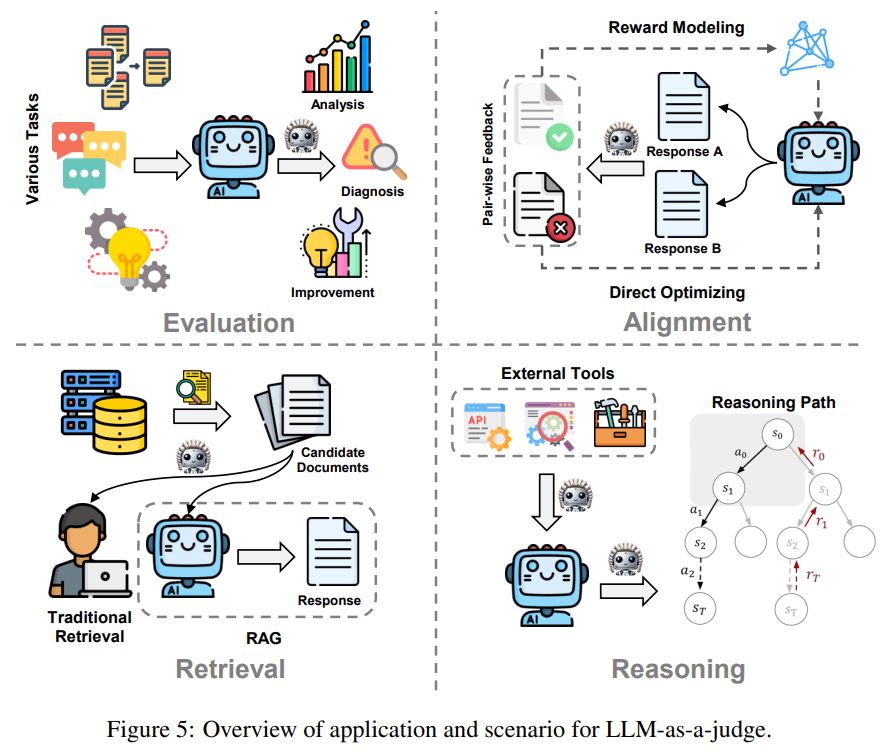

• Natural Language Reinforcement Learning

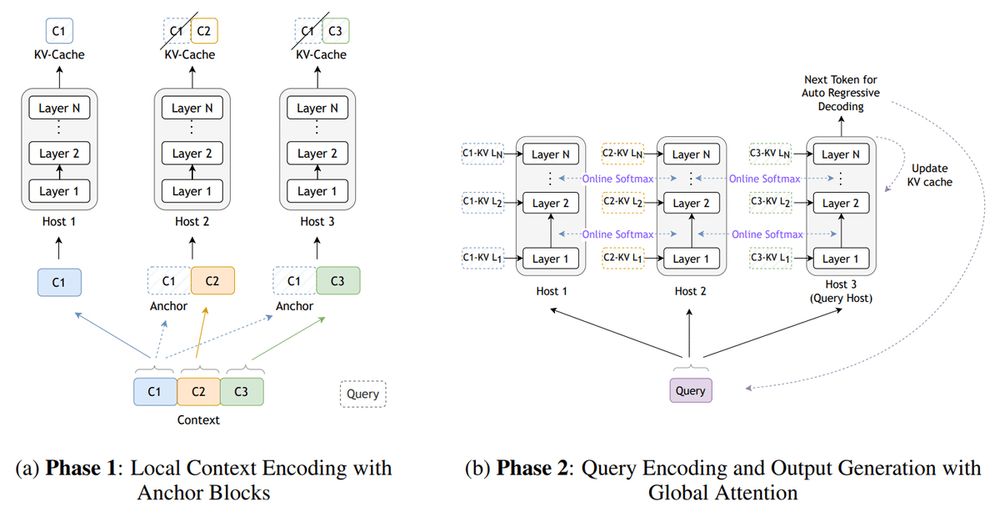

• Star Attention, NVIDIA

• Opportunities and Challenges of LLM-as-a-judge

• MH-MoE: Multi-Head Mixture-of-Experts, @msftresearch.bsky.social

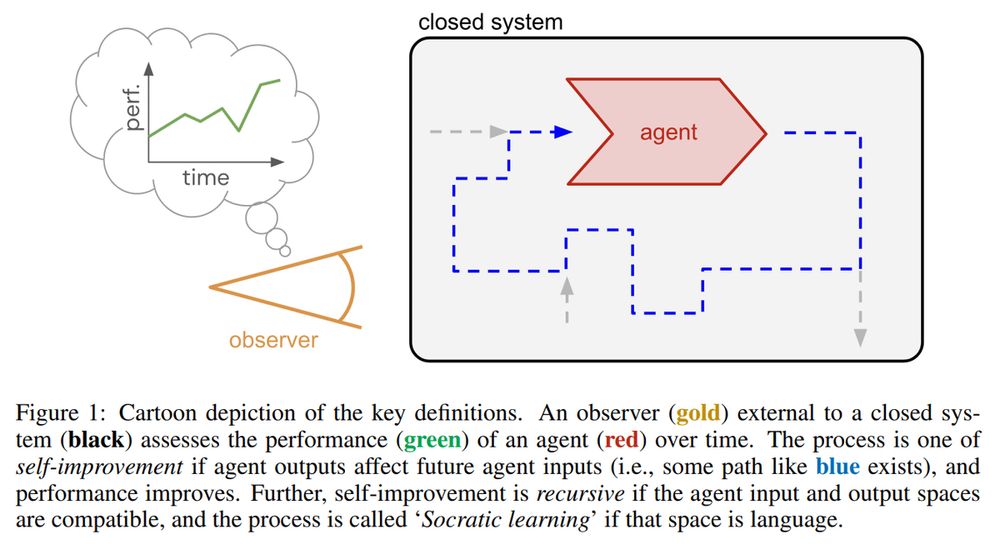

• Boundless Socratic Learning with Language Games, Google DeepMind

🧵

• Natural Language Reinforcement Learning

• Star Attention, NVIDIA

• Opportunities and Challenges of LLM-as-a-judge

• MH-MoE: Multi-Head Mixture-of-Experts, @msftresearch.bsky.social

• Boundless Socratic Learning with Language Games, Google DeepMind

🧵

They blend LLMs' capabilities with software interaction to work with websites, mobile apps, and desktop software, simplifying complex tasks.

A new survey on LLM-brained GUI agents was published👇

They blend LLMs' capabilities with software interaction to work with websites, mobile apps, and desktop software, simplifying complex tasks.

A new survey on LLM-brained GUI agents was published👇

• 100 Days of ML Code

• Data Science For Beginners

• Awesome Data Science

• Data Science Masters

• Homemade Machine Learning

• 500+ AI Projects List with Code

• Awesome Artificial Intelligence

...

Check out for more👇

• 100 Days of ML Code

• Data Science For Beginners

• Awesome Data Science

• Data Science Masters

• Homemade Machine Learning

• 500+ AI Projects List with Code

• Awesome Artificial Intelligence

...

Check out for more👇

The idea of HiAR-ICL (High-level Automated Reasoning in ICL) is to teaches the model abstract thinking patterns, using atomic reasoning actions and thought cards. It doesn't rely only on examples and prompts like in ICL

Details👇

The idea of HiAR-ICL (High-level Automated Reasoning in ICL) is to teaches the model abstract thinking patterns, using atomic reasoning actions and thought cards. It doesn't rely only on examples and prompts like in ICL

Details👇

Baichuan emphasizes slow thinking, long-term strategy, and practical innovation. Led by Wang Xiaochuan, it envisions a "symbiotic future" with math as its guide.

Explore Baichuan's unique path, strategy, and AI innovations:

Baichuan emphasizes slow thinking, long-term strategy, and practical innovation. Led by Wang Xiaochuan, it envisions a "symbiotic future" with math as its guide.

Explore Baichuan's unique path, strategy, and AI innovations:

Whether you are a professional or a beginner in Machine Learning, we made our flashcards to be easy to digest and help everyone refresh key ML concepts.

Here's Self-Supervised Learning, a technique used to train ML models👇

Whether you are a professional or a beginner in Machine Learning, we made our flashcards to be easy to digest and help everyone refresh key ML concepts.

Here's Self-Supervised Learning, a technique used to train ML models👇

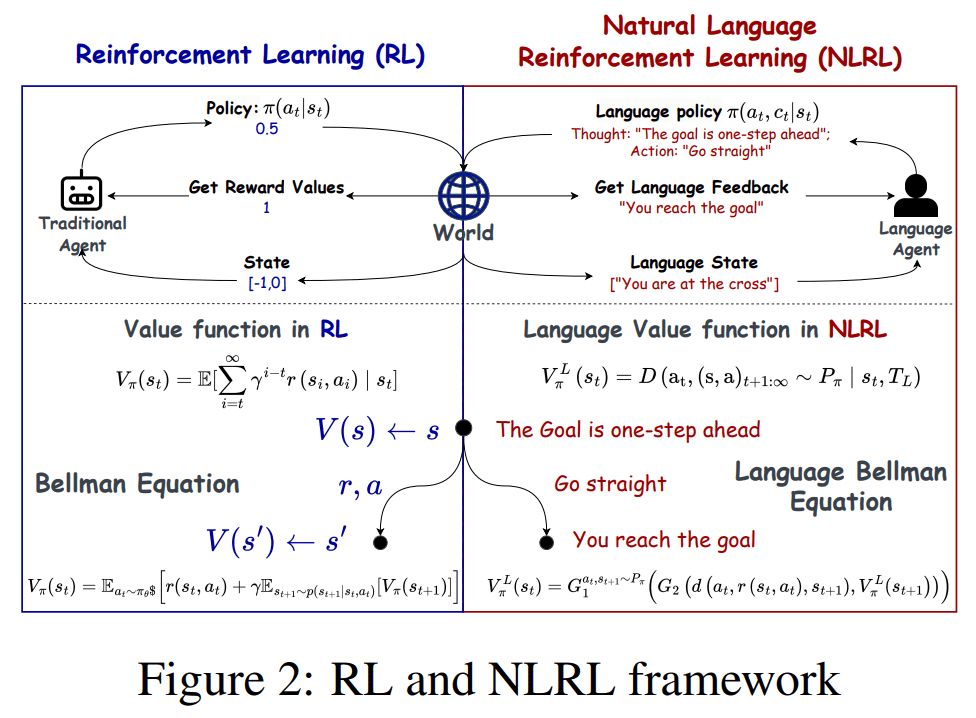

NLRL's main idea:

The core parts of RL like goals, strategies, and evaluation methods are reimagined using natural language instead of rigid math.

Let's explore this approach more precisely🧵

NLRL's main idea:

The core parts of RL like goals, strategies, and evaluation methods are reimagined using natural language instead of rigid math.

Let's explore this approach more precisely🧵

1. Profiling: Keeps the agent aligned with its purpose.

2. Knowledge: Provides domain-specific expertise.

Find other components below👇

P.S.: As an example, here's LangChain’s Harrison Chase's agent framework.

1. Profiling: Keeps the agent aligned with its purpose.

2. Knowledge: Provides domain-specific expertise.

Find other components below👇

P.S.: As an example, here's LangChain’s Harrison Chase's agent framework.

Here are 6 FREE AI courses for beginners:

• Introduction to Artificial Intelligence

• Artificial Intelligence for Beginners

• AI For Everyone

• Machine Learning for Beginners

• Introduction to Data Science Specialization

• Data Science for Beginners

Links👇

Here are 6 FREE AI courses for beginners:

• Introduction to Artificial Intelligence

• Artificial Intelligence for Beginners

• AI For Everyone

• Machine Learning for Beginners

• Introduction to Data Science Specialization

• Data Science for Beginners

Links👇

Here's what enhances LLaVA-o1's complex multimodal reasoning:

- Reasoning in 4 stages

- Effective inference-time scaling - Stage-level beam search

- Special dataset with step-by-step reasoning examples

👇

Here's what enhances LLaVA-o1's complex multimodal reasoning:

- Reasoning in 4 stages

- Effective inference-time scaling - Stage-level beam search

- Special dataset with step-by-step reasoning examples

👇

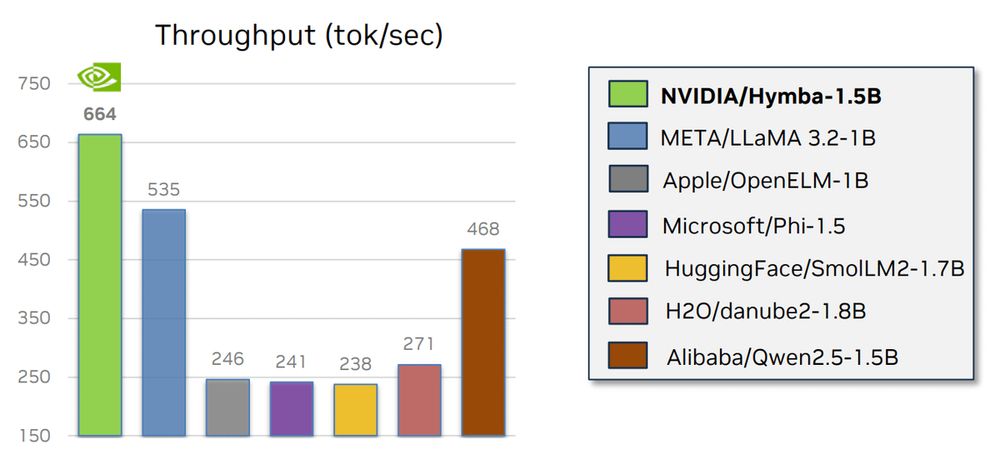

- Transformer attention to help the model remember details.

- State Space Models (SSMs) to efficiently summarize context.

This model is also a treasure trove of interesting features.

Here are the details:

- Transformer attention to help the model remember details.

- State Space Models (SSMs) to efficiently summarize context.

This model is also a treasure trove of interesting features.

Here are the details:

▪️ Multimodal Autoregressive Pre-training of Large Vision Encoders

▪️ Enhancing the Reasoning Ability of Multimodal Large Language Models via Mixed Preference Optimization

▪️ SAMURAI

▪️ Natural Language Reinforcement Learning

and 4 more!

🧵

▪️ Multimodal Autoregressive Pre-training of Large Vision Encoders

▪️ Enhancing the Reasoning Ability of Multimodal Large Language Models via Mixed Preference Optimization

▪️ SAMURAI

▪️ Natural Language Reinforcement Learning

and 4 more!

🧵

Has been working on Agentic Workflows Series recently. Here is my Glossary for you ;)

Agentic Workflows – Fancy term for letting AI handle your to-do list – with the constant fear that it creates its own.

Has been working on Agentic Workflows Series recently. Here is my Glossary for you ;)

Agentic Workflows – Fancy term for letting AI handle your to-do list – with the constant fear that it creates its own.

• Hymba: NVIDIA’s hybrid-head architecture merges transformer attention with state space models (SSMs). It outperforms larger models like Llama-3.2-3B with less memory use and higher throughput.

🧵

• Hymba: NVIDIA’s hybrid-head architecture merges transformer attention with state space models (SSMs). It outperforms larger models like Llama-3.2-3B with less memory use and higher throughput.

🧵

They are all about models:

• Tülu 3

• Marco-o1

• DeepSeek-R1-Lite

• Bi-Mamba

• Pixtral Large

🧵

They are all about models:

• Tülu 3

• Marco-o1

• DeepSeek-R1-Lite

• Bi-Mamba

• Pixtral Large

🧵

I'm sharing newsletters exploring AI & ML

- Weekly trends

- LLM/FM insights

- Unicorn spotlights

- Global dynamics

- History

Joins to get fresh news and interesting materials about AI! turingpost.com

I'm sharing newsletters exploring AI & ML

- Weekly trends

- LLM/FM insights

- Unicorn spotlights

- Global dynamics

- History

Joins to get fresh news and interesting materials about AI! turingpost.com