The administration's new AI Executive Order, aiming to suppress state-level AI regulation, risks undermining the innovation it seeks to advance.

nationalinterest.org/blog/techlan...

The administration's new AI Executive Order, aiming to suppress state-level AI regulation, risks undermining the innovation it seeks to advance.

nationalinterest.org/blog/techlan...

Check out this new op-ed by @stephbatalis.bsky.social and myself for the 80th anniversary of @thebulletin.org!

thebulletin.org/premium/2025...

Check out this new op-ed by @stephbatalis.bsky.social and myself for the 80th anniversary of @thebulletin.org!

thebulletin.org/premium/2025...

cset.georgetown.edu/publication/...

Identifying assumptions can help policymakers make informed, flexible decisions about AI under uncertainty.

cset.georgetown.edu/publication/...

Identifying assumptions can help policymakers make informed, flexible decisions about AI under uncertainty.

One interesting aspect is its statement that the federal government should withhold AI-related funding from states with "burdensome AI regulations."

This could be cause for concern.

One interesting aspect is its statement that the federal government should withhold AI-related funding from states with "burdensome AI regulations."

This could be cause for concern.

CSET's policy.ai author @alexfriedland.bsky.social broke down the key takeaways and the most interesting and important recommendations across all 30 sections.

Check it out: cset.georgetown.edu/article/trum...

One crucial reason is that states play a critical role in building AI governance infrastructure.

Check out this new op-ed by @jessicaji.bsky.social, myself, and @minanrn.bsky.social on this topic!

thehill.com/opinion/tech...

One crucial reason is that states play a critical role in building AI governance infrastructure.

Check out this new op-ed by @jessicaji.bsky.social, myself, and @minanrn.bsky.social on this topic!

thehill.com/opinion/tech...

This article is fascinating- it points out that while much of the AMR prevention discussion focuses on overuse of antimicrobials, underuse can also be a major issue.

This article is fascinating- it points out that while much of the AMR prevention discussion focuses on overuse of antimicrobials, underuse can also be a major issue.

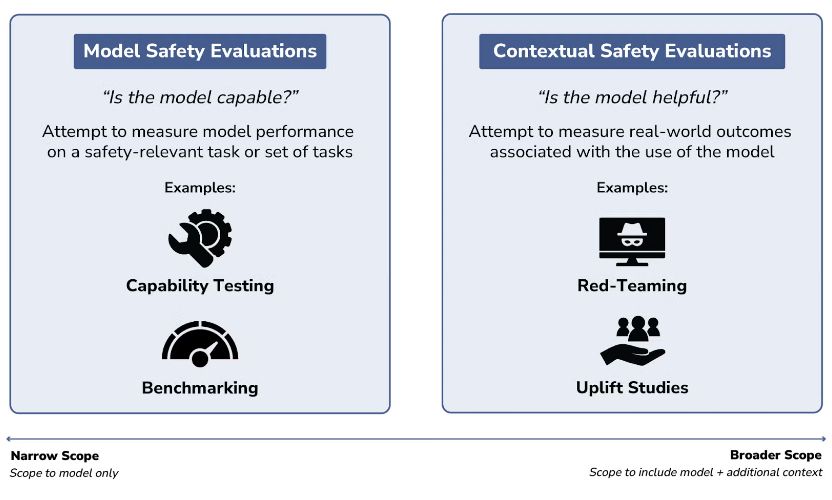

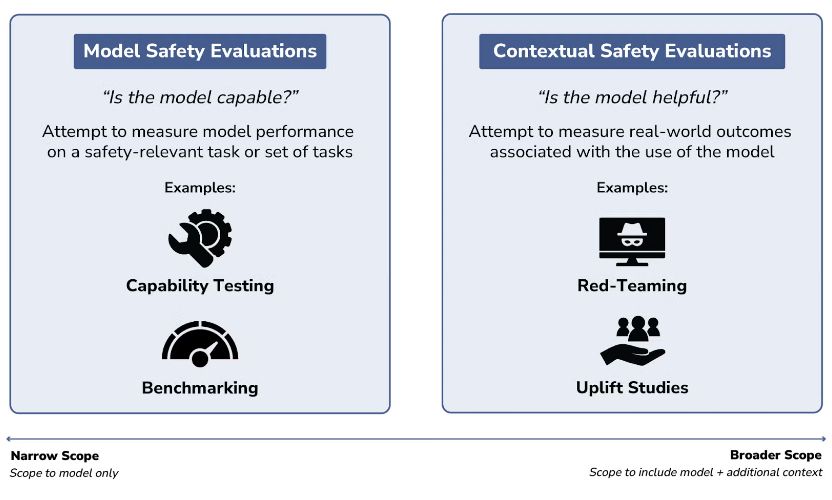

In our recent blog post, we explore the different questions we can ask about safety, how we can start to measure them, and what it means for AIxBio. Check it out! ⬇️

Effectively evaluating AI models is more crucial than ever. But how do AI evaluations actually work?

In their new explainer,

@jessicaji.bsky.social, @vikramvenkatram.bsky.social &

@stephbatalis.bsky.social break down the different fundamental types of AI safety evaluations.

In our recent blog post, we explore the different questions we can ask about safety, how we can start to measure them, and what it means for AIxBio. Check it out! ⬇️

There's no perfect method, but safety evaluations are the best tool we have.

That said, different evals answer different questions about a model!

Effectively evaluating AI models is more crucial than ever. But how do AI evaluations actually work?

In their new explainer,

@jessicaji.bsky.social, @vikramvenkatram.bsky.social &

@stephbatalis.bsky.social break down the different fundamental types of AI safety evaluations.

There's no perfect method, but safety evaluations are the best tool we have.

That said, different evals answer different questions about a model!

America's biodefense strategy uses robust health infrastructure to deter bad actors. Right now, we're tearing down our own defenses so adversaries don't have to.

America's biodefense strategy uses robust health infrastructure to deter bad actors. Right now, we're tearing down our own defenses so adversaries don't have to.

“Dismantling critical preparedness offices, cutting infrastructure and funding, and allowing misinformation to derail the response are not just bad for healthcare—they’re dangerous national security signals.”

www.defenseone.com/ideas/2025/0...

“Dismantling critical preparedness offices, cutting infrastructure and funding, and allowing misinformation to derail the response are not just bad for healthcare—they’re dangerous national security signals.”

www.defenseone.com/ideas/2025/0...

For the National Interest, @jack-corrigan.bsky.social and I discuss a potential change that could benefit public access to medical drugs.

nationalinterest.org/blog/techlan...

For the National Interest, @jack-corrigan.bsky.social and I discuss a potential change that could benefit public access to medical drugs.

nationalinterest.org/blog/techlan...

If you were in their shoes, where would you draw the line?

Check it out here!

thebulletin.org/2025/04/how-...

If you were in their shoes, where would you draw the line?

Check it out here!

thebulletin.org/2025/04/how-...

How? Here are three recommendations.

"How to stop bioterrorists from buying dangerous DNA," by @stephbatalis.bsky.social and @vikramvenkatram.bsky.social. ⬇️

How? Here are three recommendations.

"How to stop bioterrorists from buying dangerous DNA," by @stephbatalis.bsky.social and @vikramvenkatram.bsky.social. ⬇️

Our experts informed debates on AI governance, military applications, and workforce trends, testified before Congress, and introduced impactful data tools like AGORA and PARAT.

Learn more in our Annual Report: cset.georgetown.edu/publication/...

Re: AI x bio policy, the piece mentions a few of our key findings. I'd like to discuss them here:

Re: AI x bio policy, the piece mentions a few of our key findings. I'd like to discuss them here:

We publish evidence-based, data-driven, and analytically rigorous work on a wide range of tech policy topics!

Follow us here: bsky.app/starter-pack...

We publish evidence-based, data-driven, and analytically rigorous work on a wide range of tech policy topics!

Follow us here: bsky.app/starter-pack...

It breaks down how AI specifically impacts biorisk, and discusses the particular policy tools usable at each stage to reduce risk.

Definitely worth a read!

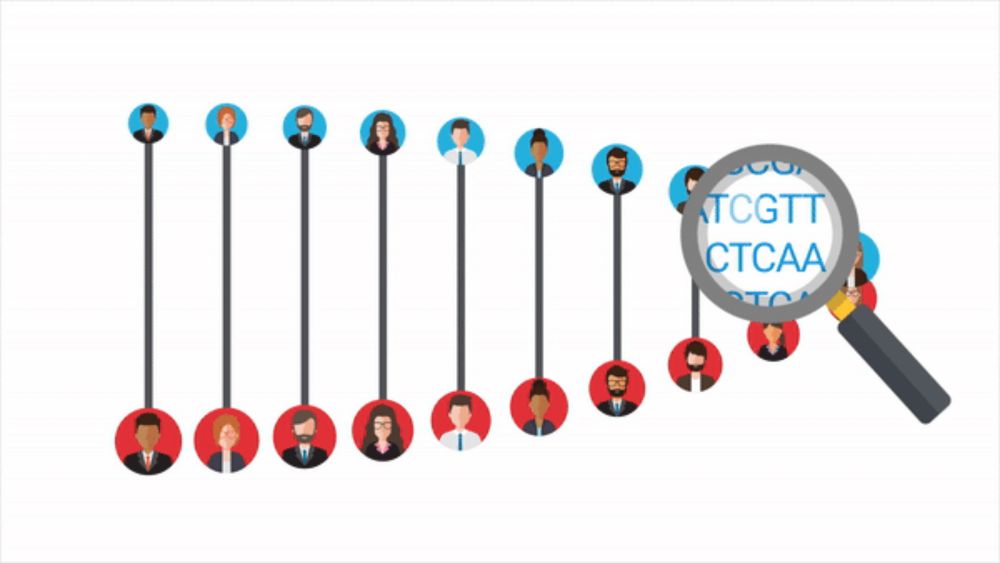

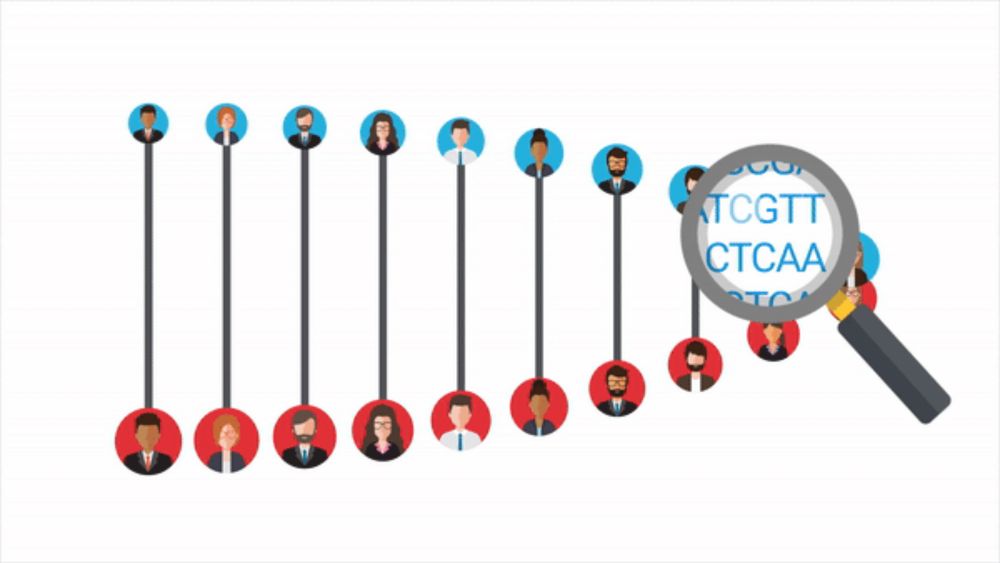

A new report from @stephbatalis.bsky.social breaks down the steps into planning and design to execution phases. Understanding these pathways can help us implement safeguards and promote innovation.

More: cset.georgetown.edu/publication/...

It breaks down how AI specifically impacts biorisk, and discusses the particular policy tools usable at each stage to reduce risk.

Definitely worth a read!

A new report from @stephbatalis.bsky.social breaks down the steps into planning and design to execution phases. Understanding these pathways can help us implement safeguards and promote innovation.

More: cset.georgetown.edu/publication/...

A new report from @stephbatalis.bsky.social breaks down the steps into planning and design to execution phases. Understanding these pathways can help us implement safeguards and promote innovation.

More: cset.georgetown.edu/publication/...

Check out my and @stephbatalis.bsky.social's new response to a NIST/AISI RFI on chem-bio models!

cset.georgetown.edu/publication/...

Check out my and @stephbatalis.bsky.social's new response to a NIST/AISI RFI on chem-bio models!

cset.georgetown.edu/publication/...