www.biorxiv.org/content/10.6...

www.biorxiv.org/content/10.6...

academic.oup.com/schizophreni...

academic.oup.com/schizophreni...

#PsychSciSky #VisionScience #neuroskyence

More information to follow!

visualneuroscience.auckland.ac.nz/epc-apcv-2026/

#PsychSciSky #VisionScience #neuroskyence

More information to follow!

visualneuroscience.auckland.ac.nz/epc-apcv-2026/

This was a fun project : )

Turns out that the main task used to measure the capacity of people to visualize for many decades isn't really fit for that purpose!

authors.elsevier.com/a/1lS9S_NzVj...

This was a fun project : )

Turns out that the main task used to measure the capacity of people to visualize for many decades isn't really fit for that purpose!

authors.elsevier.com/a/1lS9S_NzVj...

Our evidence suggests Autism / Aphantasia links have been overstated by a circular logic (as the most popular Autistic trait measure has questions about imagery).

authors.elsevier.com/c/1kYhW3lcz4...

Our evidence suggests Autism / Aphantasia links have been overstated by a circular logic (as the most popular Autistic trait measure has questions about imagery).

authors.elsevier.com/c/1kYhW3lcz4...

We think imagery binocular rivalry priming is an unreliable subjective measure of imagery

authors.elsevier.com/sd/article/S...

We think imagery binocular rivalry priming is an unreliable subjective measure of imagery

authors.elsevier.com/sd/article/S...

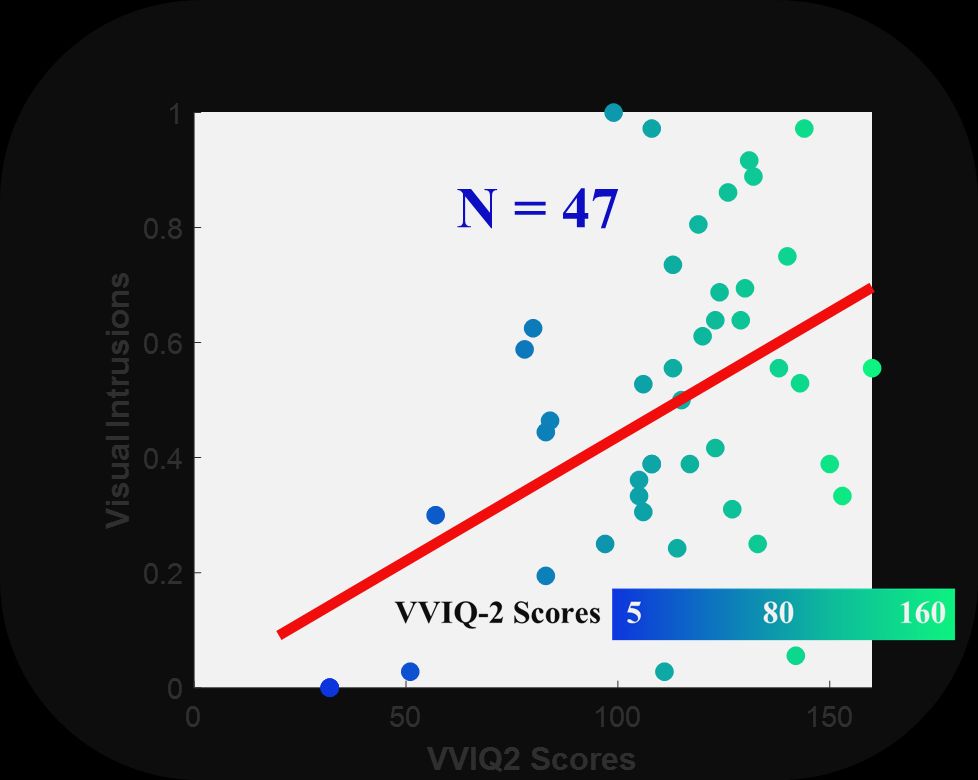

People with vivid visual imaginations are less able to suppress involuntary visualisations.

doi.org/10.1016/j.co...

#Aphantasia #Imagery

People with vivid visual imaginations are less able to suppress involuntary visualisations.

doi.org/10.1016/j.co...

#Aphantasia #Imagery

Aphants, and people with low vividness imagery, may be resistant to intrusive visualisations!

#Aphantasia #Imagery

@sampendu.bsky.social

theconversation.com/the-pink-ele...

Aphants, and people with low vividness imagery, may be resistant to intrusive visualisations!

#Aphantasia #Imagery

@sampendu.bsky.social

theconversation.com/the-pink-ele...

"Mapping object space dimensions: new insights from temporal dynamics"

🔗 doi.org/10.1101/2024...

1/ Summary: 🧵👇

"Mapping object space dimensions: new insights from temporal dynamics"

🔗 doi.org/10.1101/2024...

1/ Summary: 🧵👇

🔍 Title: Flexible Use of Facial Features Supports Face Identity Processing

Our study reveals that the key to accurate face recognition lies in the flexibility of using different facial features.

#Research #FaceRecognition #Psychology #Forensics #Security #AcademicResearch

🔍 Title: Flexible Use of Facial Features Supports Face Identity Processing

Our study reveals that the key to accurate face recognition lies in the flexibility of using different facial features.

#Research #FaceRecognition #Psychology #Forensics #Security #AcademicResearch

epc.psy.unsw.edu.au/index.html

epc.psy.unsw.edu.au/index.html

www.sciencedirect.com/science/arti...

www.sciencedirect.com/science/arti...

www.sciencedirect.com/science/arti...

www.sciencedirect.com/science/arti...

www.sciencedirect.com/science/arti...

www.sciencedirect.com/science/arti...

#Aphantasia #Imagery

www.biorxiv.org/content/10.1...

#Aphantasia #Imagery

www.biorxiv.org/content/10.1...