Thousands are turning to AI chatbots for emotional connection – finding comfort, sharing secrets, and even falling in love. But as AI companionship grows, the line between real and artificial relationships blurs.

Thousands are turning to AI chatbots for emotional connection – finding comfort, sharing secrets, and even falling in love. But as AI companionship grows, the line between real and artificial relationships blurs.

I’m a CS Postdoc at Stanford in the Stanford HCI group.

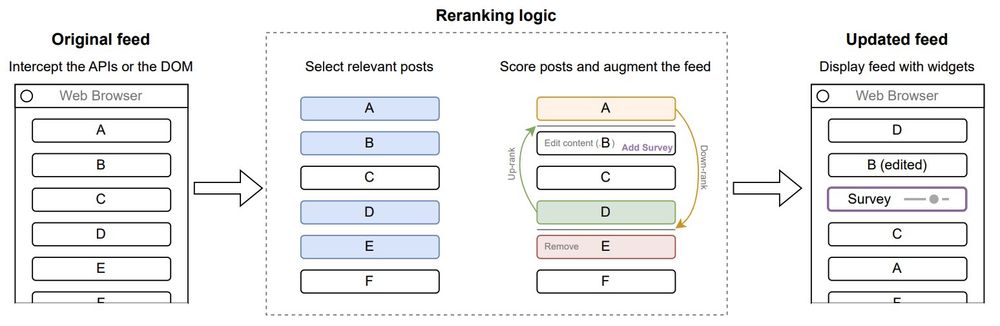

I develop ways to improve the online information ecosystem by designing better social media feeds & improving Wikipedia. I work on AI, Social Computing, and HCI.

piccardi.me 🧵

I’m a CS Postdoc at Stanford in the Stanford HCI group.

I develop ways to improve the online information ecosystem by designing better social media feeds & improving Wikipedia. I work on AI, Social Computing, and HCI.

piccardi.me 🧵

It works! #cscw2024 paper by @popowski.bsky.social

.

It works! #cscw2024 paper by @popowski.bsky.social

.