Adam Brandt

@adambrandt.bsky.social

740 followers

200 following

40 posts

Senior Lecturer in Applied Linguistics at Newcastle University (UK). Uses #EMCA to research social interaction, and particularly how people communicate with (and through) technologies, such as #conversationalAI.

Posts

Media

Videos

Starter Packs

Reposted by Adam Brandt

Reposted by Adam Brandt

Adam Brandt

@adambrandt.bsky.social

· May 29

Adam Brandt

@adambrandt.bsky.social

· May 28

Reposted by Adam Brandt

Reposted by Adam Brandt

DOTE and DOTEbase

@dote.bsky.social

· May 23

Reposted by Adam Brandt

Reposted by Adam Brandt

Hypervisible

@hypervisible.blacksky.app

· Mar 27

Bill Gates: Within 10 years, AI will replace many doctors and teachers—humans won't be needed ‘for most things'

Over the next decade, advances in artificial intelligence will mean that humans will no longer be needed "for most things" in the world, says Bill Gates.

www.nbcchicago.com

Adam Brandt

@adambrandt.bsky.social

· Mar 14

Education Committee announces session on higher education funding - Committees - UK Parliament

Education Committee Chair Helen Hayes MP has today announced a deep dive evidence session examining funding issues in the higher education sector.

committees.parliament.uk

Adam Brandt

@adambrandt.bsky.social

· Mar 7

Adam Brandt

@adambrandt.bsky.social

· Feb 27

Adam Brandt

@adambrandt.bsky.social

· Feb 23

Reposted by Adam Brandt

Adam Brandt

@adambrandt.bsky.social

· Feb 22

Adam Brandt

@adambrandt.bsky.social

· Feb 10

Reposted by Adam Brandt

Adam Brandt

@adambrandt.bsky.social

· Jan 29

Adam Brandt

@adambrandt.bsky.social

· Jan 29

Adam Brandt

@adambrandt.bsky.social

· Jan 29

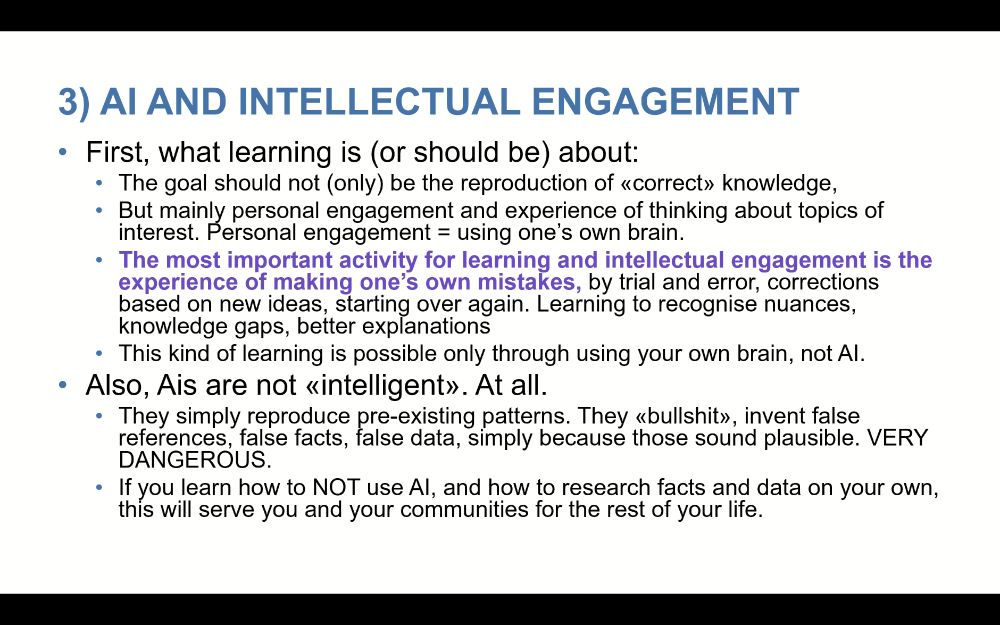

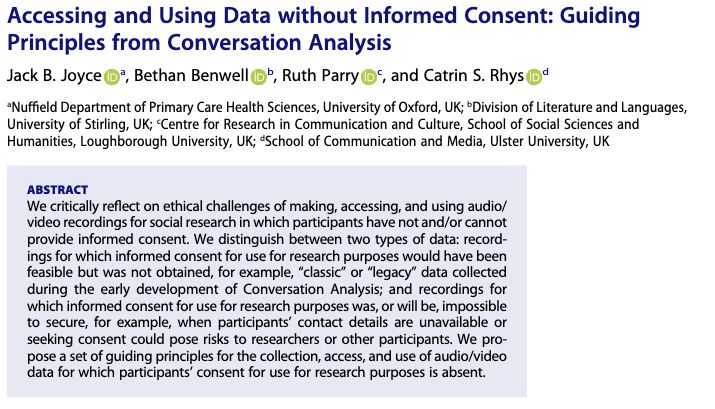

![Preamble

All research at our institution, from ideation and execution to analysis and reporting, is bound by the Netherlands Code of Conduct for Research Integrity. This code specifies five core values that organise and inform research conduct: Honesty, Scrupulousness, Transparency, Independence and Responsibility.

One way to summarise the guidelines in this document is to say they are about taking these core values seriously. When it comes to using Generative AI in or for research, the question is if and how this can be done honestly, scrupulously, transparently, independently, and responsibly.

A key ethical challenge is that most current Generative AI undermines these values by design [3–5; details below]. Input data is legally questionable; output reproduces biases and erases authorship; fine-tuning involves exploitation; access is gated; versioning is opaque; and use taxes the environment.

While most of these issues apply across societal spheres, there is something especially pernicious about text generators in academia, where writing is not merely an output format but a means of thinking, crediting, arguing, and structuring thoughts. Hollowing out these skills carries foundational risks.

A common argument for Generative AI is a promise of higher productivity [5]. Yet productivity does not equal insight, and when kept unchecked it may hinder innovation and creativity [6, 7]. We do not need more papers, faster; we rather need more thoughtful, deep work, also known as slow science [8–10].

For these reasons, the first principle when it comes to Generative AI is to not use it unless you can do so honestly, scrupulously, transparently, independently and responsibly. The ubiquity of tools like ChatGPT is no reason to skimp on standards of research integrity; if anything, it requires more vigilance.](https://cdn.bsky.app/img/feed_thumbnail/plain/did:plc:tfyqeo3tprov5lejatxoi6lb/bafkreidtloh77ocmijnfeddey4fmme43s2mhahdpmr7otrrmvpzjca5kn4@jpeg)