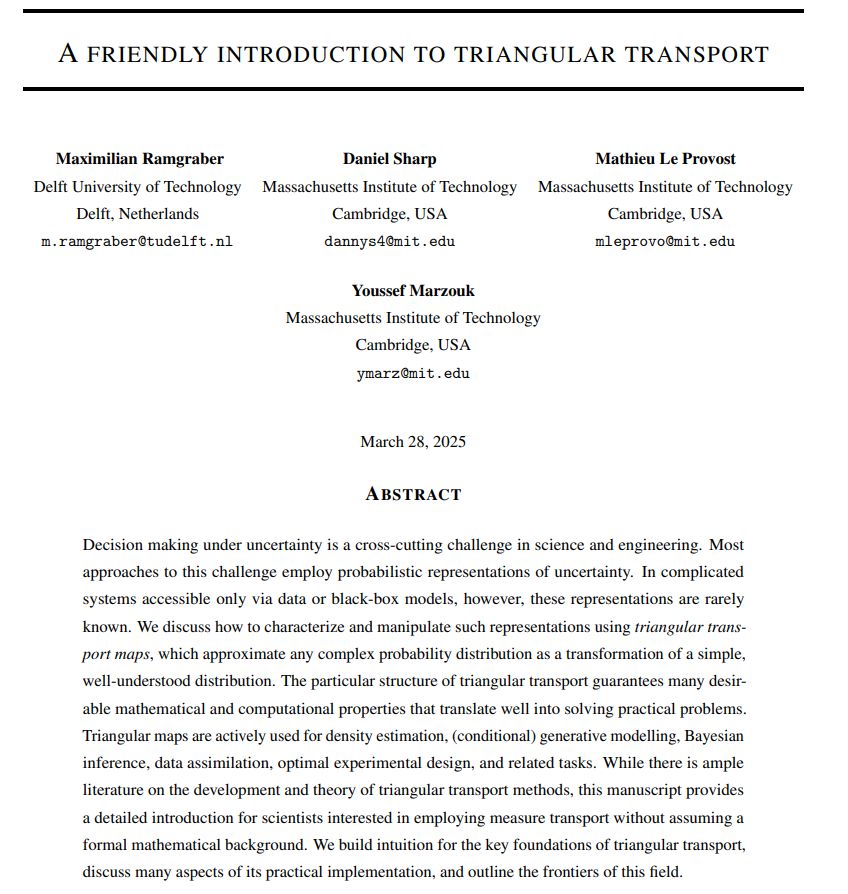

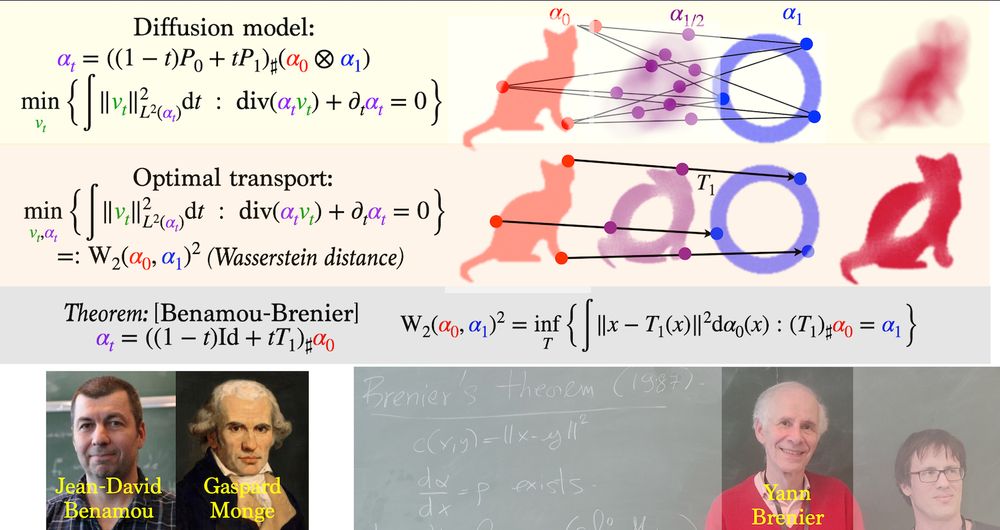

It might not be the easiest intro to diffusion models, but this monograph is an amazing deep dive into the math behind them and all the nuances

It might not be the easiest intro to diffusion models, but this monograph is an amazing deep dive into the math behind them and all the nuances

But how do we discover such temporal structure?

Hierarchical RL provides a natural formalism-yet many questions remain open.

Here's our overview of the field🧵

But how do we discover such temporal structure?

Hierarchical RL provides a natural formalism-yet many questions remain open.

Here's our overview of the field🧵

In a new preprint with Zahra Kadkhodaie and @eerosim.bsky.social, we develop a novel energy-based model in order to answer these questions: 🧵

In a new preprint with Zahra Kadkhodaie and @eerosim.bsky.social, we develop a novel energy-based model in order to answer these questions: 🧵

cvpr.thecvf.com/Conferences/...

📄 arxiv.org/abs/2503.07565

🌍 lumalabs.ai/news/inducti...

📄 arxiv.org/abs/2503.07565

🌍 lumalabs.ai/news/inducti...

www.lesswrong.com/posts/oKAFFv...

www.lesswrong.com/posts/oKAFFv...

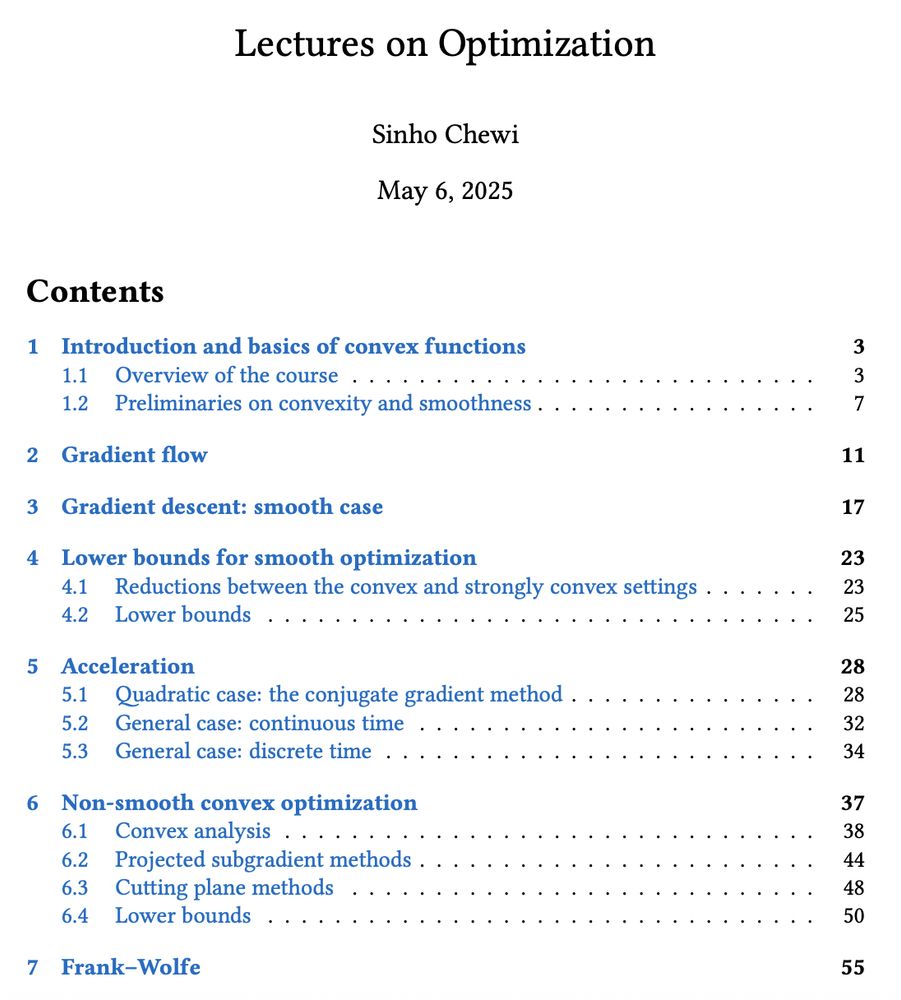

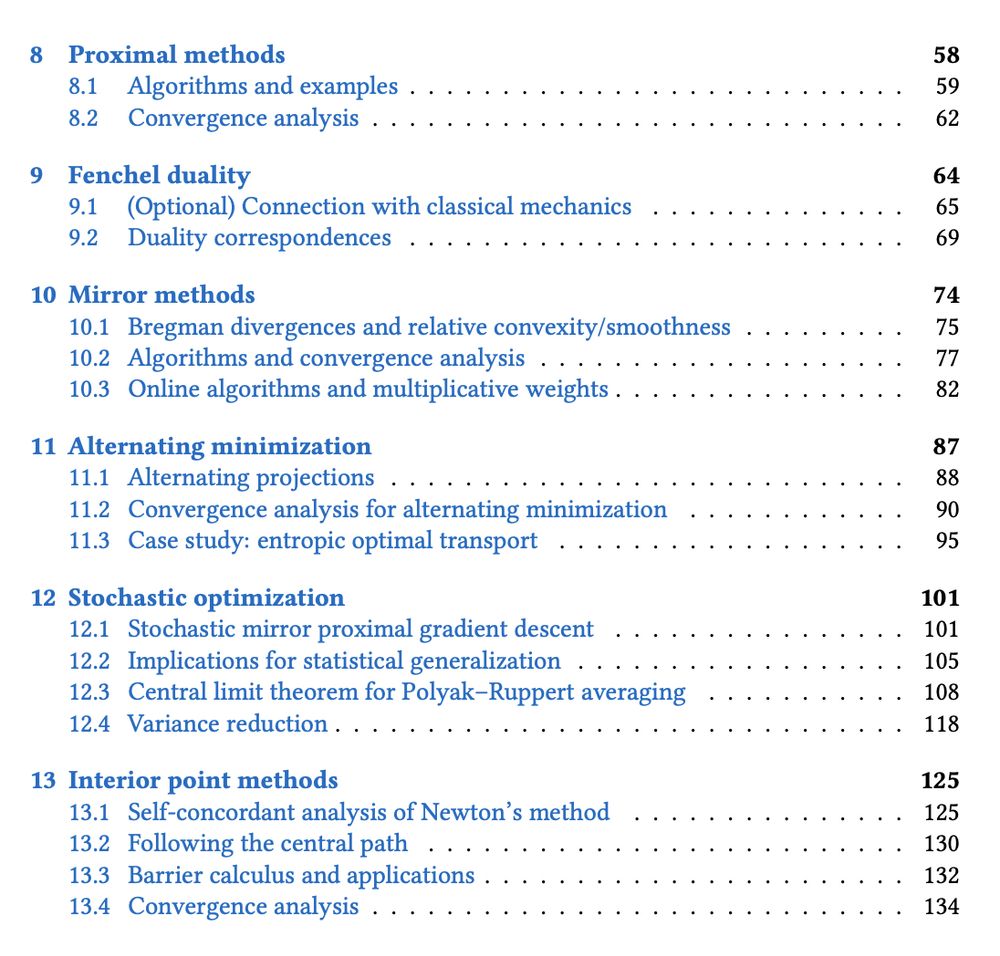

This week, with the agreement of the publisher, I uploaded the published version on arXiv.

Less typos, more references and additional sections including PAC-Bayes Bernstein.

arxiv.org/abs/2110.11216

This week, with the agreement of the publisher, I uploaded the published version on arXiv.

Less typos, more references and additional sections including PAC-Bayes Bernstein.

arxiv.org/abs/2110.11216

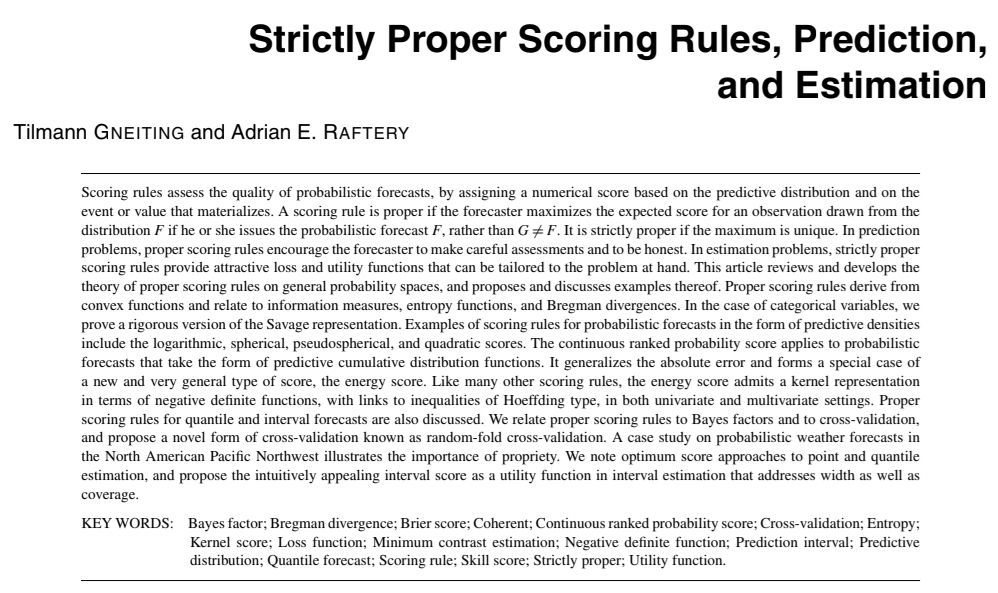

I assume I should advertise for it after the holidays, but in case you are still online today:

arxiv.org/abs/2412.18539

I assume I should advertise for it after the holidays, but in case you are still online today:

arxiv.org/abs/2412.18539

which reminded me of @ardemp.bsky.social rule #1 on how to science: Don’t be too busy

Being too busy (with noise) = less time to read papers, less time to think and to connect the dots, less time for creative work!

which reminded me of @ardemp.bsky.social rule #1 on how to science: Don’t be too busy

Being too busy (with noise) = less time to read papers, less time to think and to connect the dots, less time for creative work!

This manuscript gives a big-picture, up-to-date overview of the field of (deep) reinforcement learning and sequential decision making, covering value-based RL, policy-gradient methods, model-based methods, and various other topics.

arxiv.org/abs/2412.05265

This manuscript gives a big-picture, up-to-date overview of the field of (deep) reinforcement learning and sequential decision making, covering value-based RL, policy-gradient methods, model-based methods, and various other topics.

arxiv.org/abs/2412.05265

🧵👇

“Working with LLMs doesn’t feel the same. It’s like fitting pieces into a pre-defined puzzle instead of building the puzzle itself.”

www.reddit.com/r/MachineLea...

🧵👇

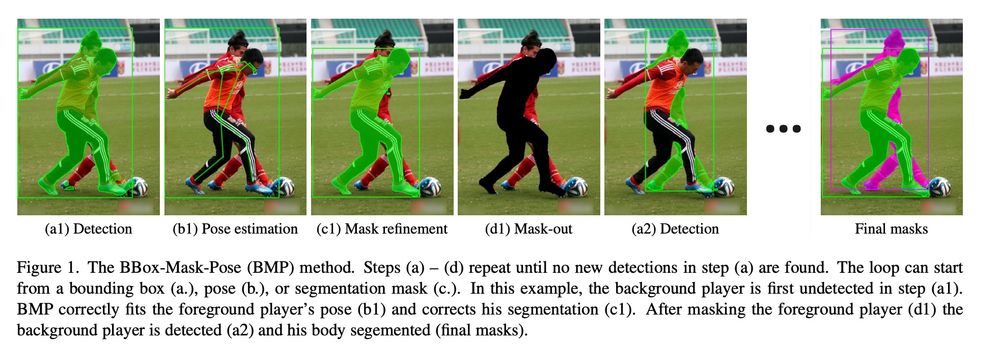

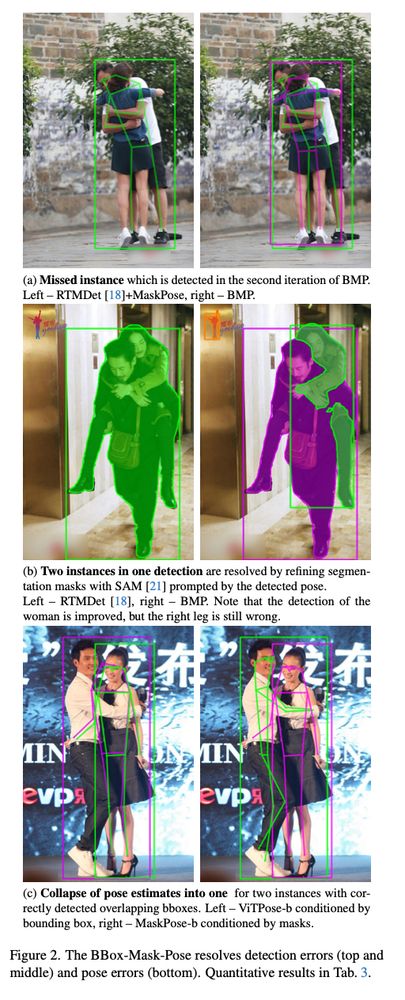

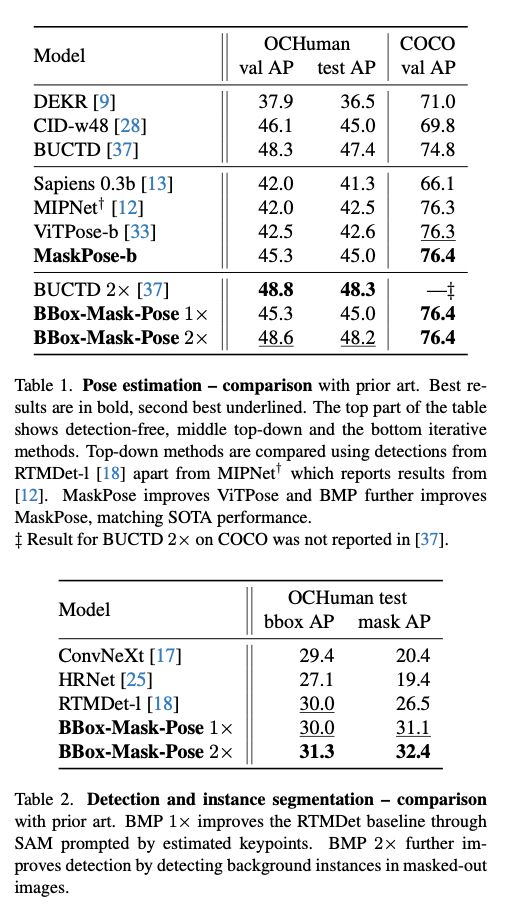

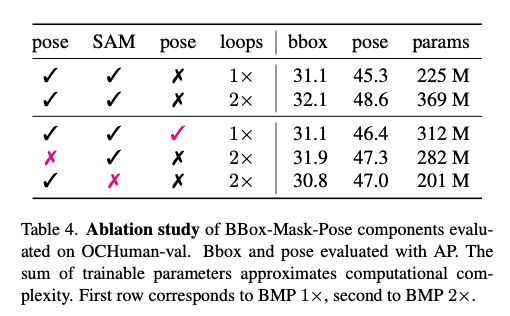

Miroslav Purkrabek, Jiri Matas

tl;dr: detect bbox -> mask -> estimate human pose -> mask them and repeat. SAM-enabled method :)

arxiv.org/abs/2412.01562

Miroslav Purkrabek, Jiri Matas

tl;dr: detect bbox -> mask -> estimate human pose -> mask them and repeat. SAM-enabled method :)

arxiv.org/abs/2412.01562

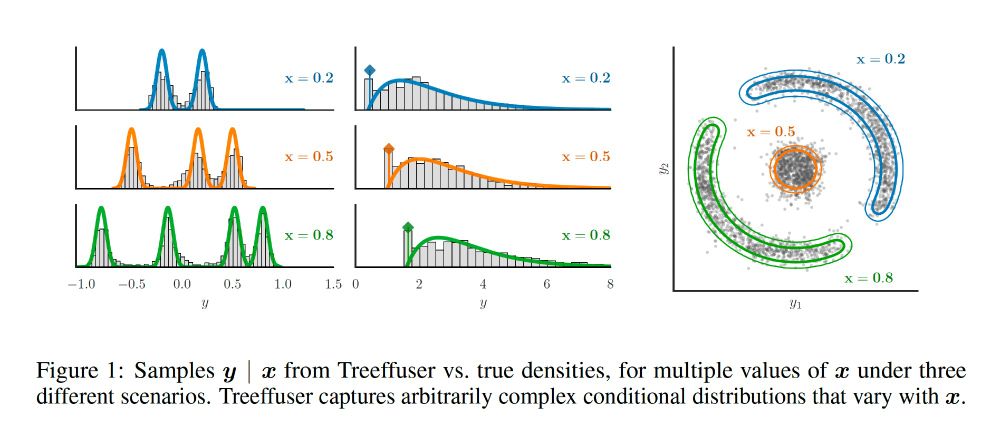

paper: arxiv.org/abs/2406.07658

repo: github.com/blei-lab/tre...

🧵(1/8)

paper: arxiv.org/abs/2406.07658

repo: github.com/blei-lab/tre...

🧵(1/8)

📖 arxiv.org/abs/2402.19460 🧵1/10

📖 arxiv.org/abs/2402.19460 🧵1/10

However, it's criminally undocumented. I tried using it outside Google to fine-tune PaliGemma and SigLIP on GPUs, and wrote a tutorial: lb.eyer.be/a/bv_tuto.html

However, it's criminally undocumented. I tried using it outside Google to fine-tune PaliGemma and SigLIP on GPUs, and wrote a tutorial: lb.eyer.be/a/bv_tuto.html